Chapter 6 Multiple Regression

## Linking to ImageMagick 6.9.12.3

## Enabled features: cairo, fontconfig, freetype, heic, lcms, pango, raw, rsvg, webp

## Disabled features: fftw, ghostscript, x11This chapter deals with the regression problem where the goal is to understand the relationship between a specific variable called the response or dependent variable (\(y\)) and several other related variables called explanatory or independent variables or more generally covariates. This is an extension of our previous discussion of simple regression, where we only had a single covariate (\(x\)).

6.1 Examples

We will go through some specific examples to demonstrate the range of the types of problems we might consider in this chapter.

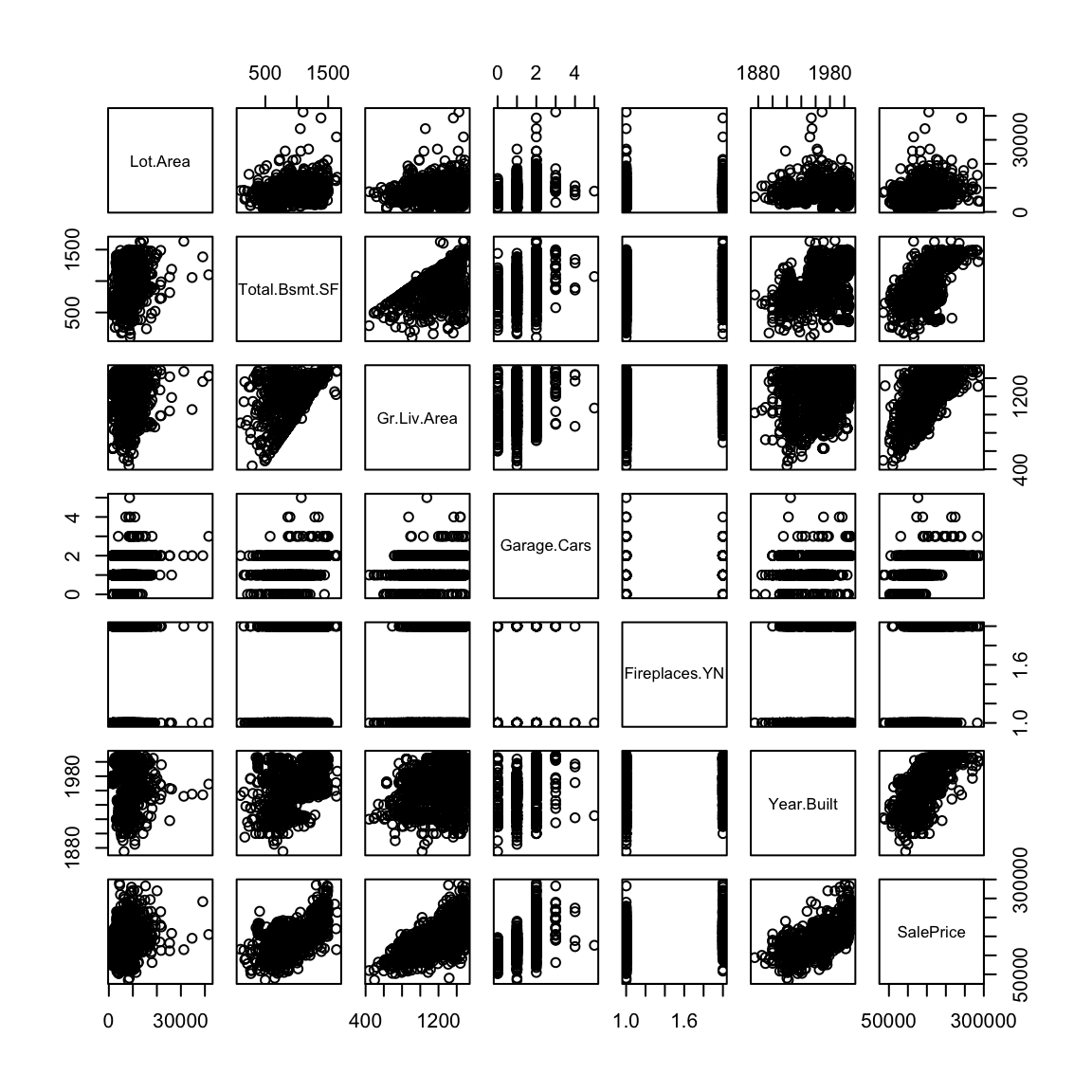

- Prospective buyers and sellers might want to understand how the price of a house depends on various characteristics of the house such as the total above ground living space, total basement square footage, lot area, number of cars that can be parked in the garage, construction year and presence or absence of a fireplace. This is an instance of a regression problem where the response variable is the house price and the other characteristics of the house listed above are the explanatory variables.

This dataset contains information on sales of houses in Ames, Iowa from 2006 to 2010. The full dataset can be obtained by following links given in the paper: (https://ww2.amstat.org/publications/jse/v19n3/decock.pdf)). I have shortened the dataset slightly to make life easier for us.

dataDir <- "../finalDataSets"

dd = read.csv(file.path(dataDir, "Ames_Short.csv"),

header = T, stringsAsFactors = TRUE)

pairs(dd)

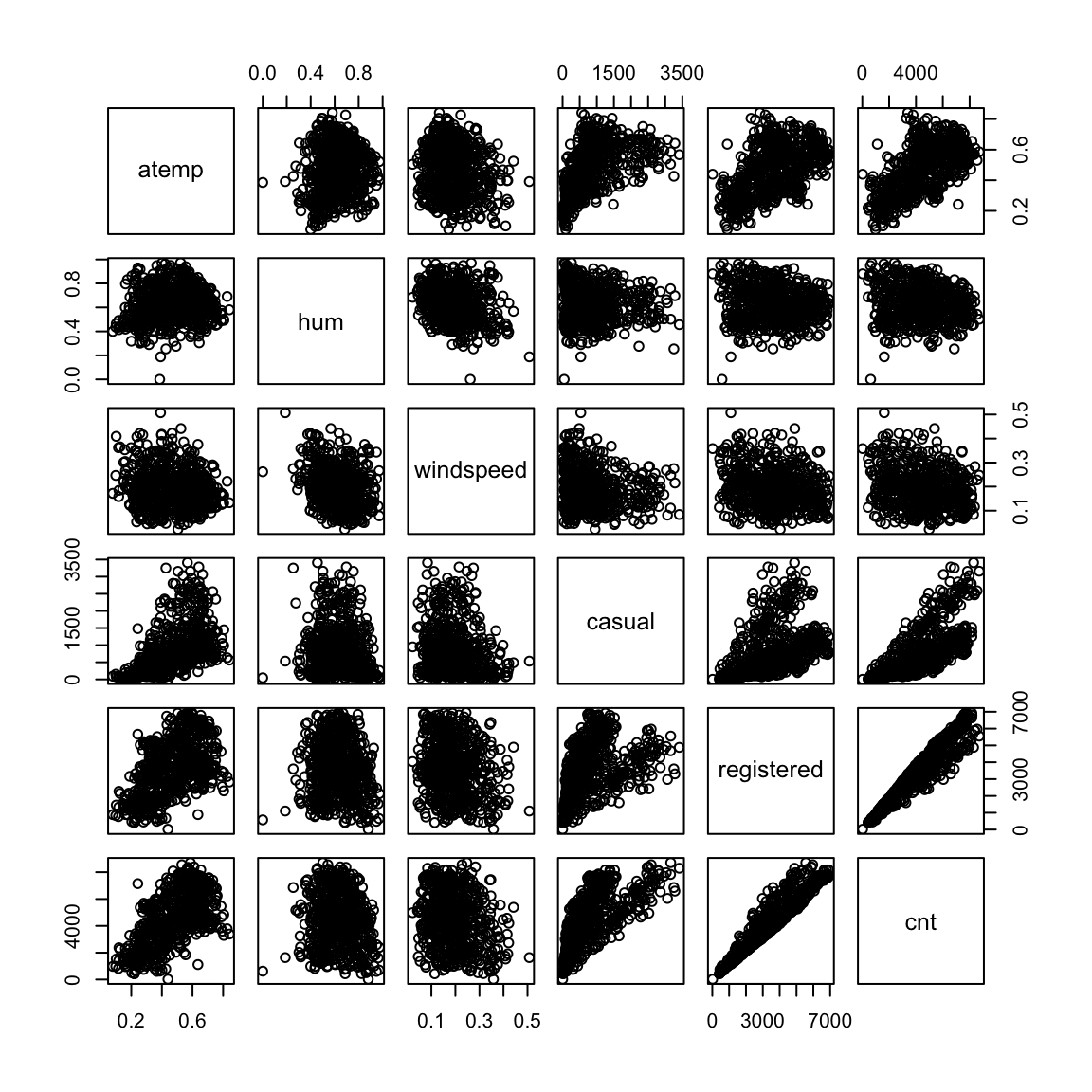

- A bike rental company wants to understand how the number of bike rentals in a given hour depends on environmental and seasonal variables (such as temperature, humidity, presence of rain etc.) and various other factors such as weekend or weekday, holiday etc. This is also an instance of a regression problems where the response variable is the number of bike rentals and all other variables mentioned are explanatory variables.

bike <- read.csv(file.path(dataDir, "DailyBikeSharingDataset.csv"),

stringsAsFactors = TRUE)

bike$yr <- factor(bike$yr)

bike$mnth <- factor(bike$mnth)

pairs(bike[, 11:16])

We might want to understand how the retention rates of colleges depend on various aspects such as tuition fees, faculty salaries, number of faculty members that are full time, number of undergraduates enrolled, number of students on federal loans etc. using our college data from before. This is again a regression problem with the response variable being the retention rate and other variables being the explanatory variables.

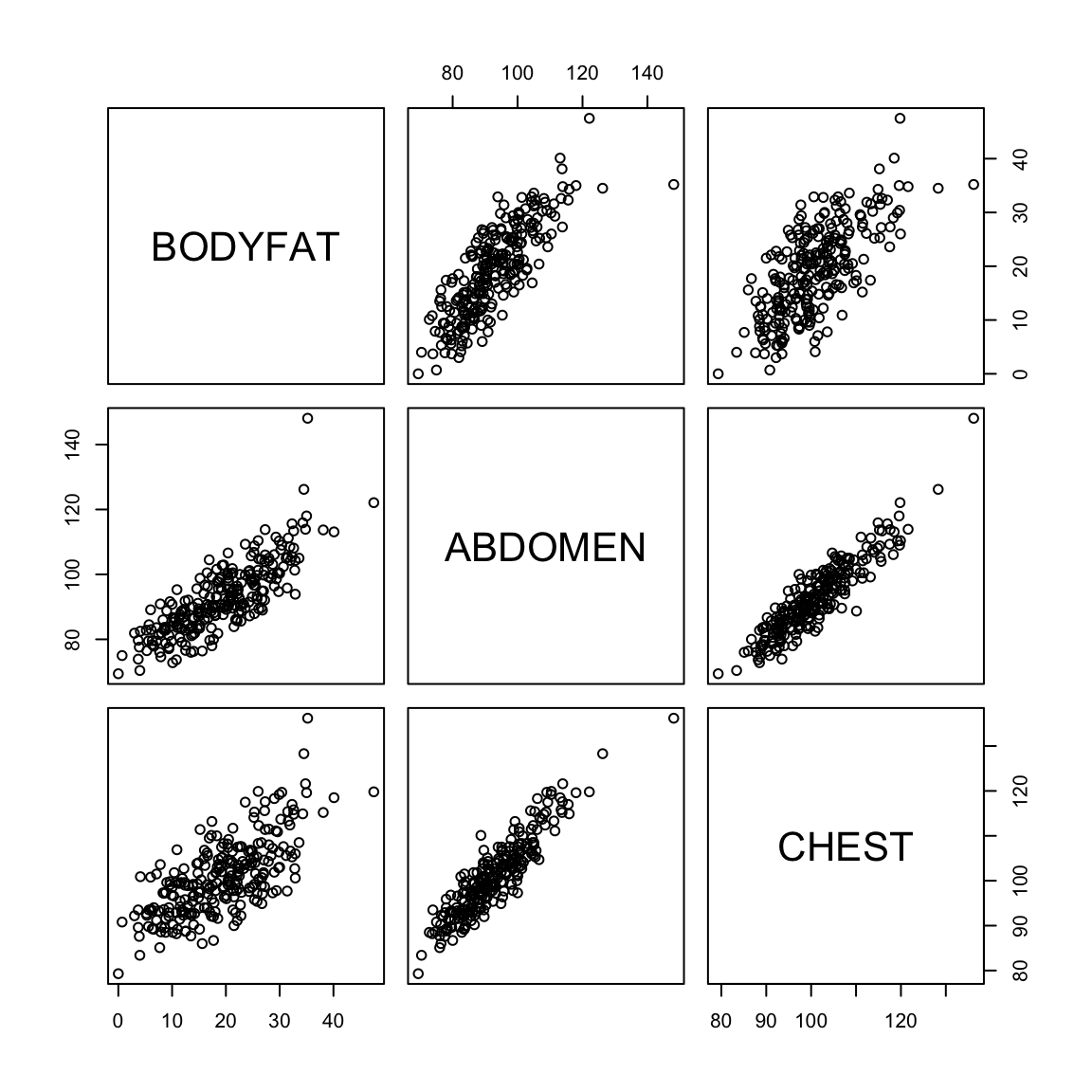

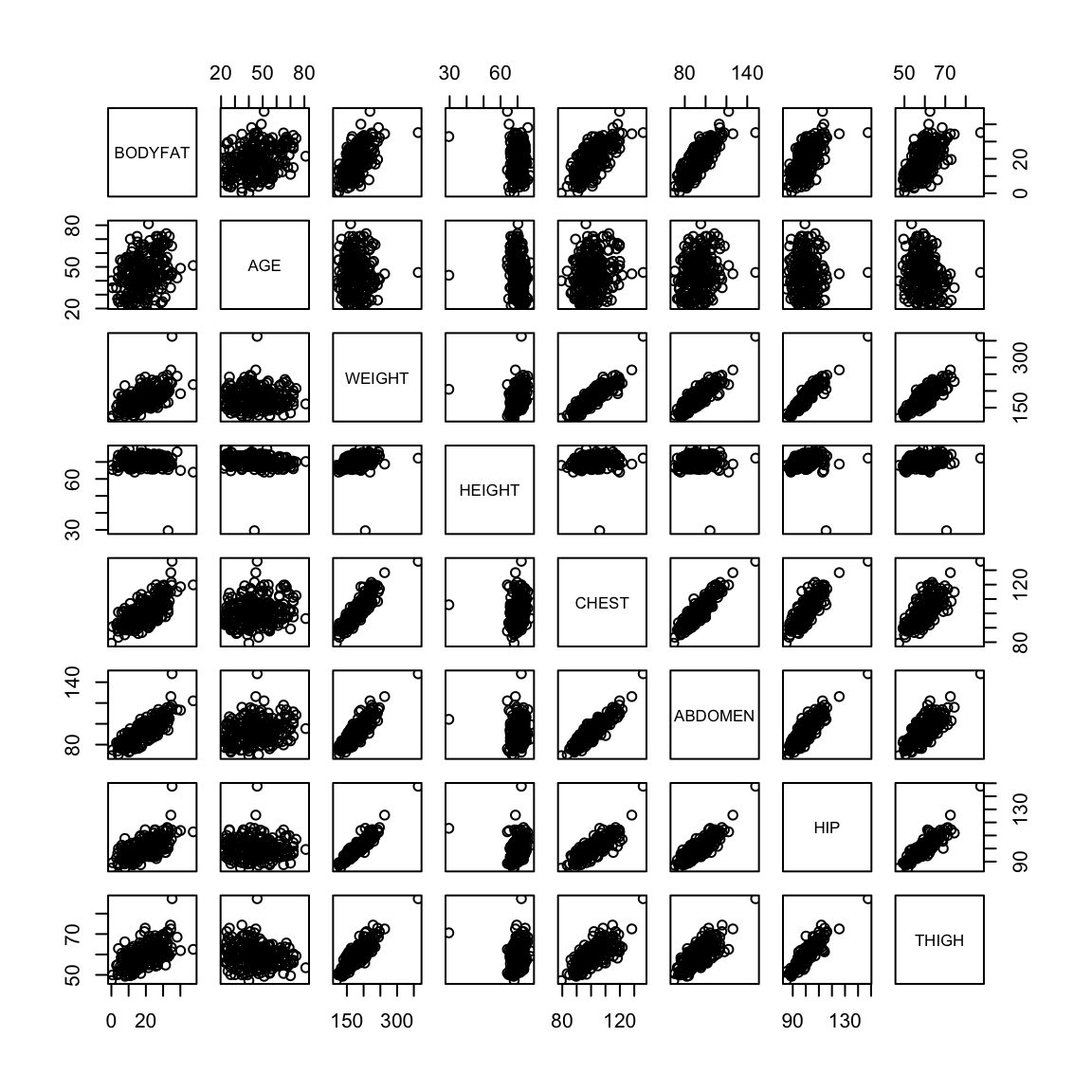

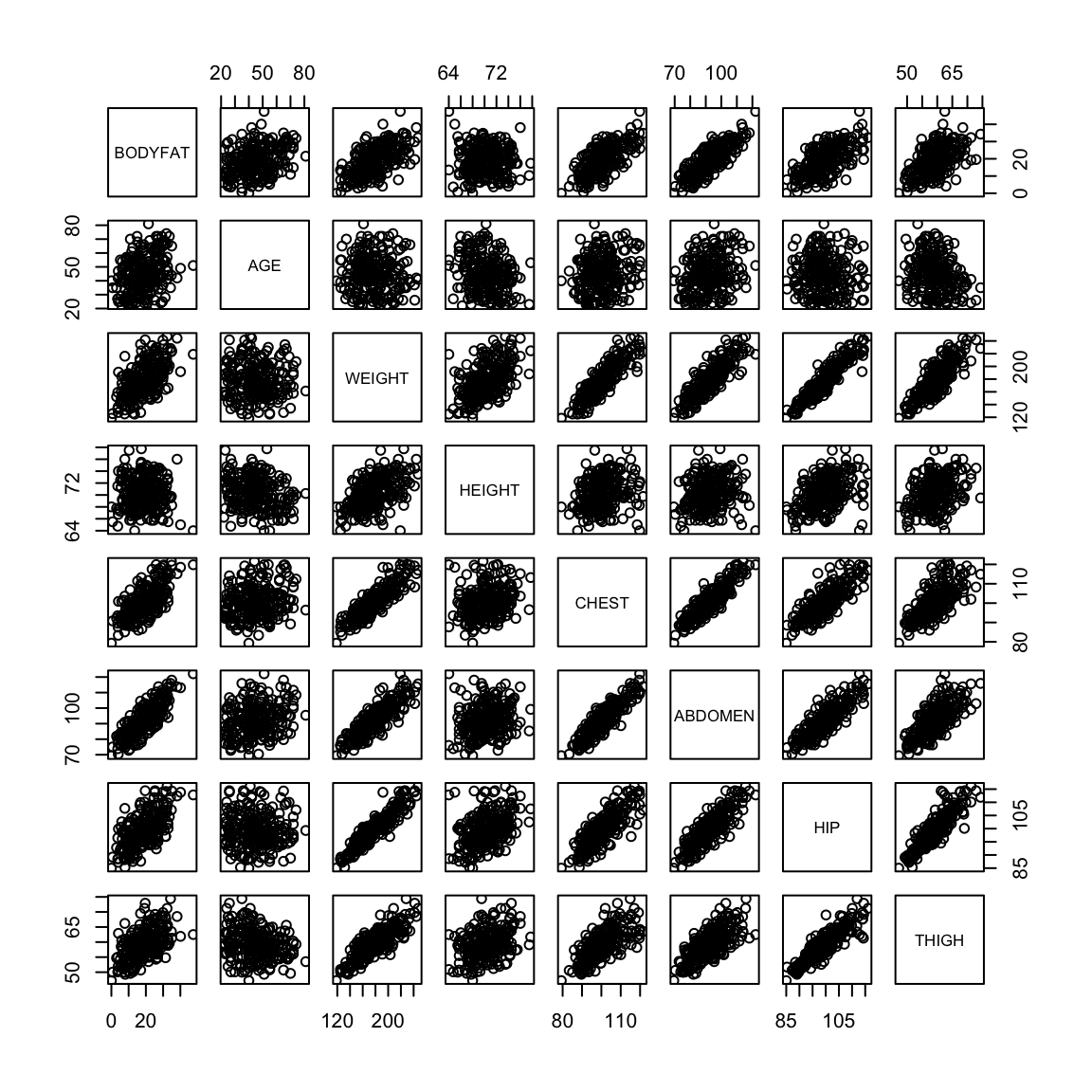

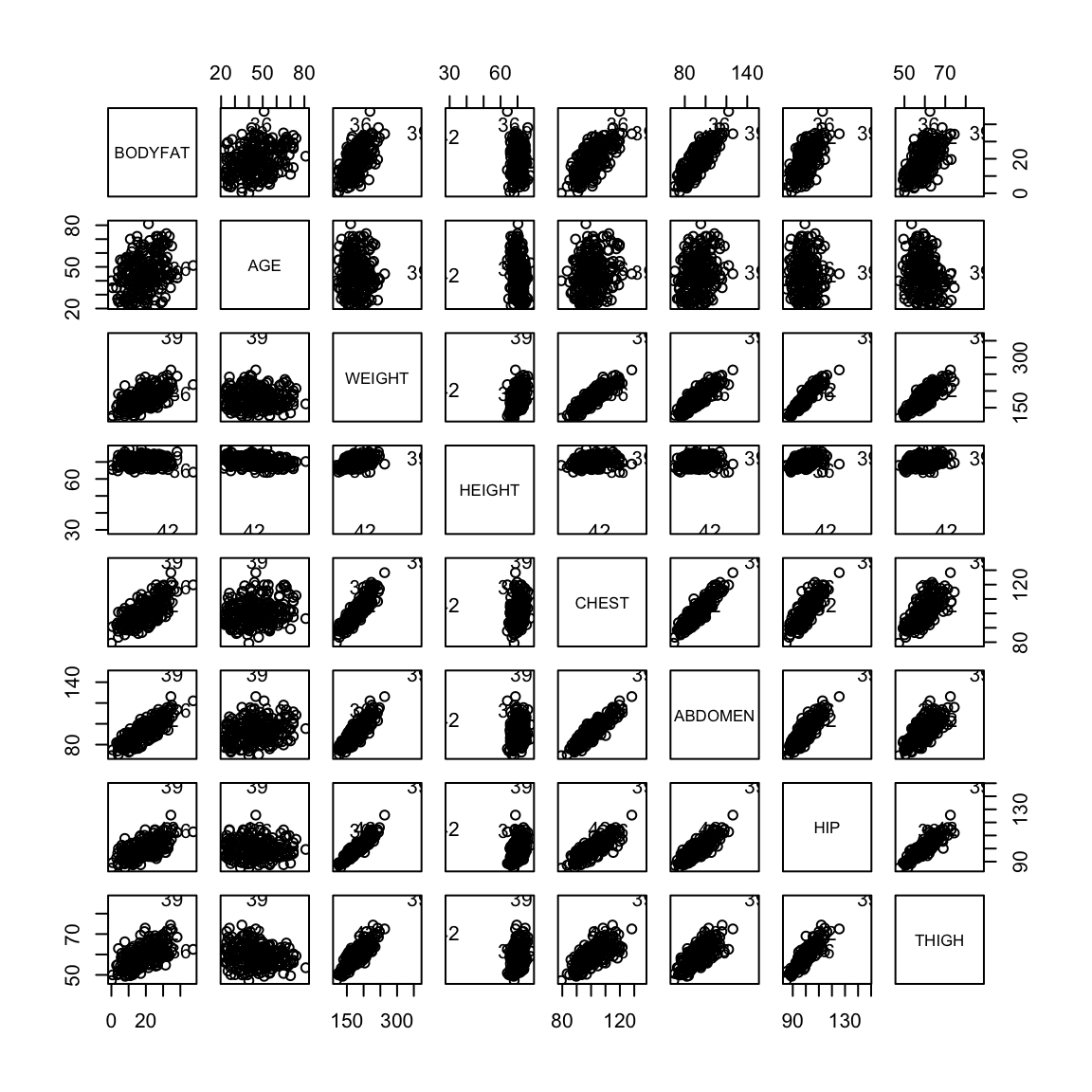

We might be interested in understanding the proportion of my body weight that is fat (body fat percentage). Directly measuring this quantity is probably hard but I can easily obtain various body measurements such as height, weight, age, chest circumeference, abdomen circumference, hip circumference and thigh circumference. Can we predict my body fat percentage based on these measurements? This is again a regression problem with the response variable being body fat percentage and all the measurements are explanatory variables.

Body fat percentage (computed by a complicated underwater weighing technique) along with various body measurements are given for 252 adult men.

body = read.csv(file.path(dataDir, "bodyfat_short.csv"),

header = T, stringsAsFactors = TRUE)

pairs(body)

There are outliers in the data and they make it hard to look at the relationships between the variables. We can try to look at the pairs plots after deleting some outlying observations.

ou1 = which(body$HEIGHT < 30)

ou2 = which(body$WEIGHT > 300)

ou3 = which(body$HIP > 120)

ou = c(ou1, ou2, ou3)

pairs(body[-ou, ])

6.2 The nature of the `relationship’

Notice that in these examples, the goals of the analysis shift depending on the example from truly wanting to just be able to predict future observations (e.g. body-fat), wanting to have insight into how to the variables are related to the response (e.g. college data), and a combination of the two (e.g. housing prices and bike sharing).

What do we mean by the relationship of a explanatory variable to a response? There are multiple valid interpretations that are used in regression that are important to distinguish.

- The explanatory variable is a good predictor of the response.

The explanatory variable is necessary for good prediction of the response (among the set of variables we are considering).

Changes in the explanatory variable cause the response to change (causality).

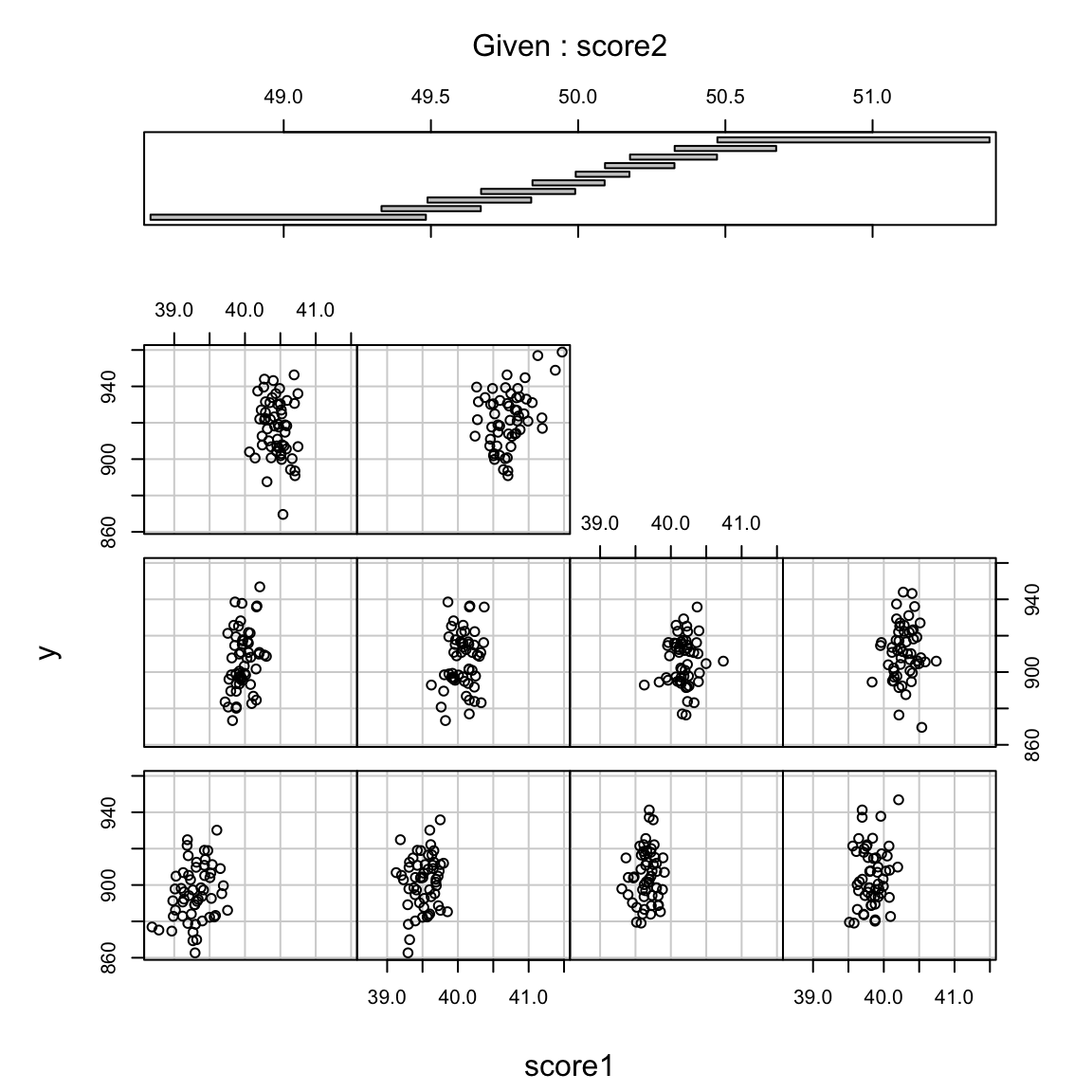

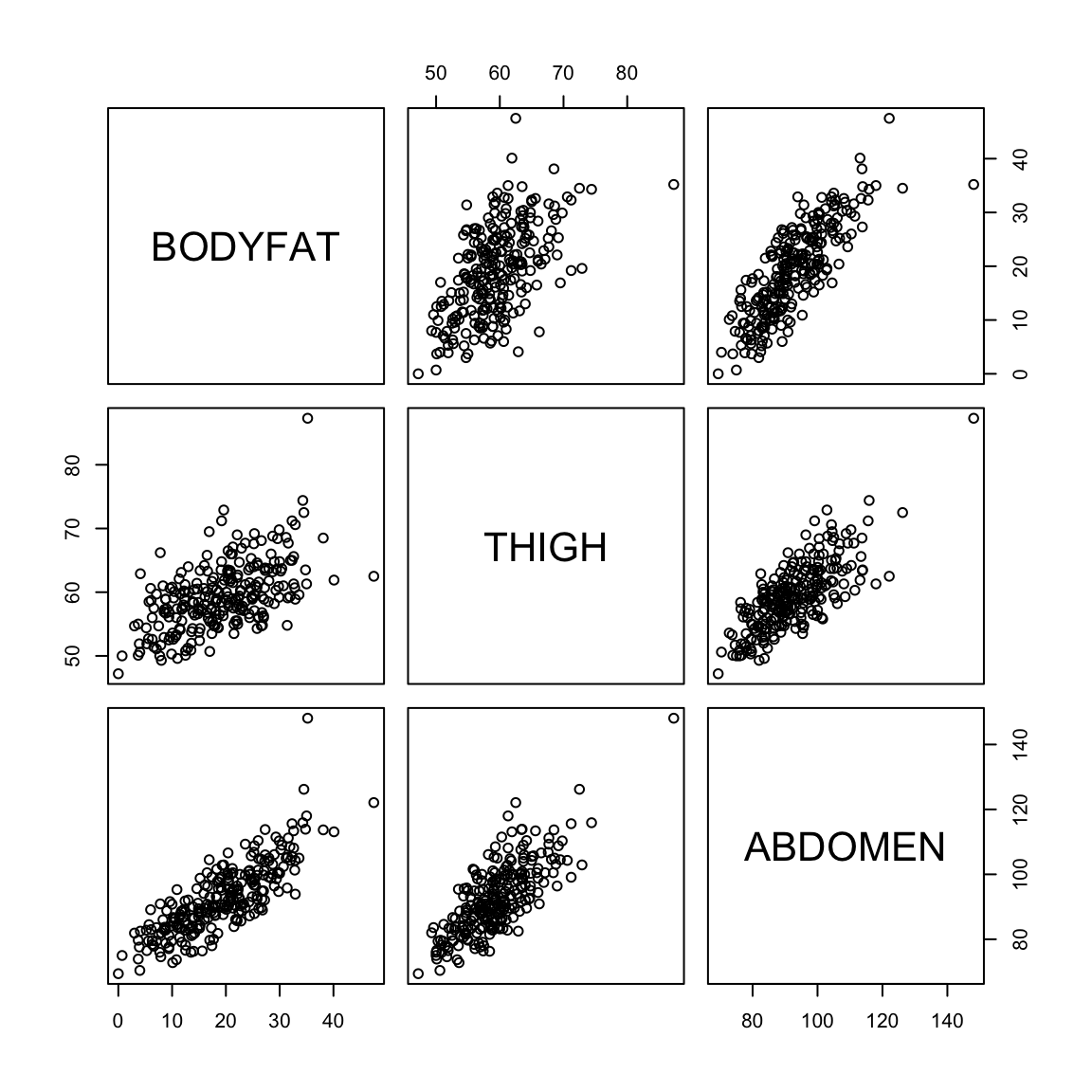

We can visualize the difference in the first and second with plots. Being a good predictor is like the pairwise scatter plots from before, in which case both thigh and abdominal circumference are good predictors of percentage of body fat.

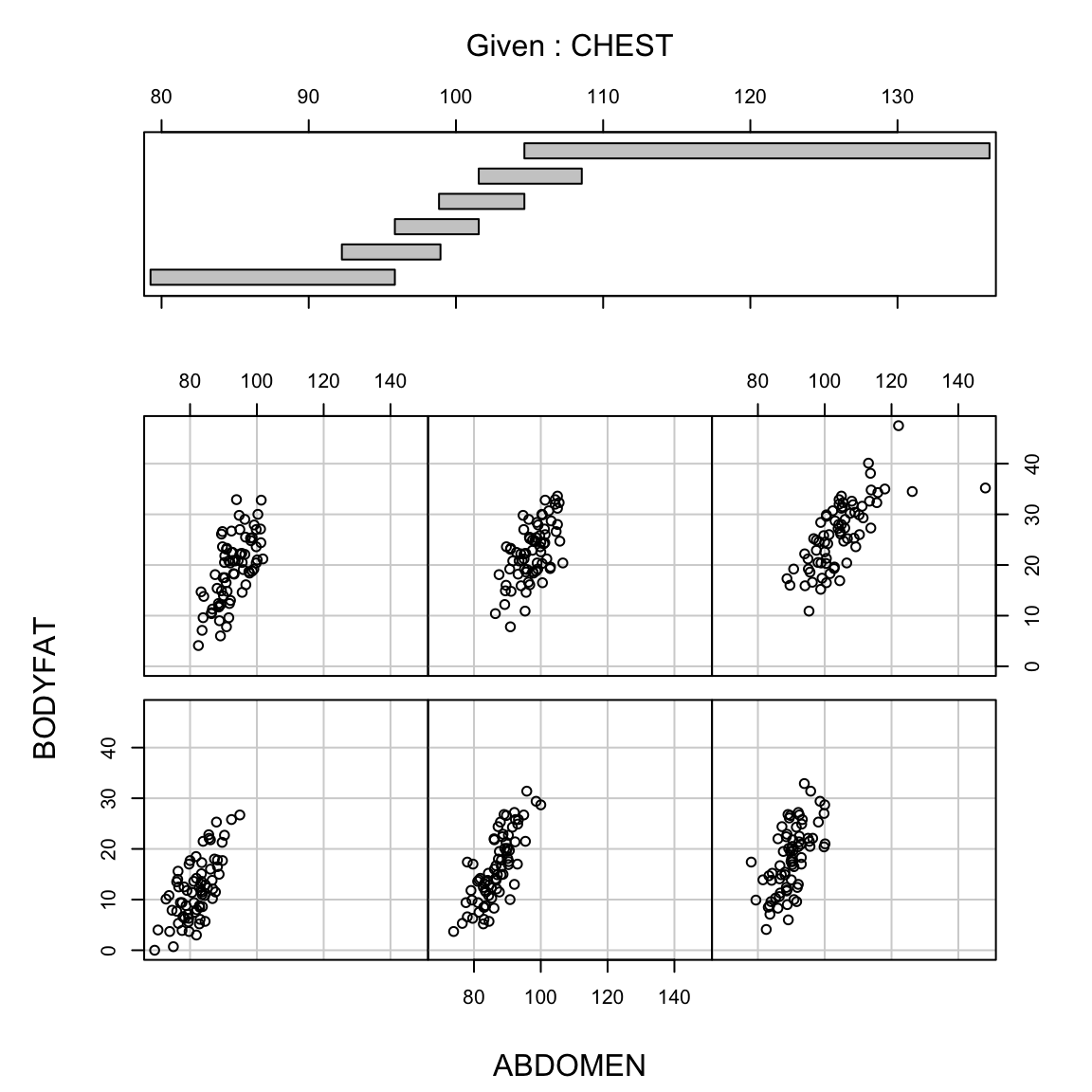

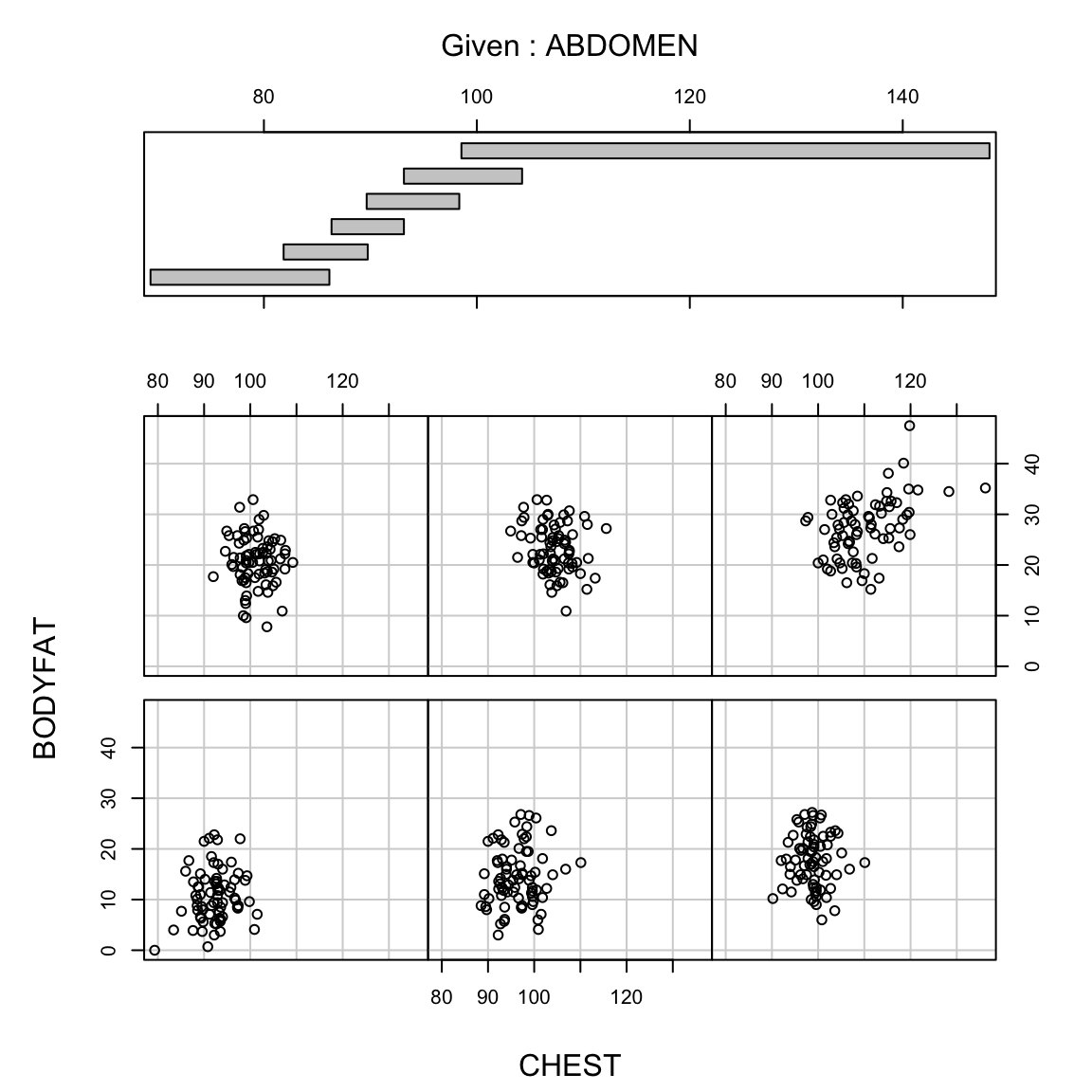

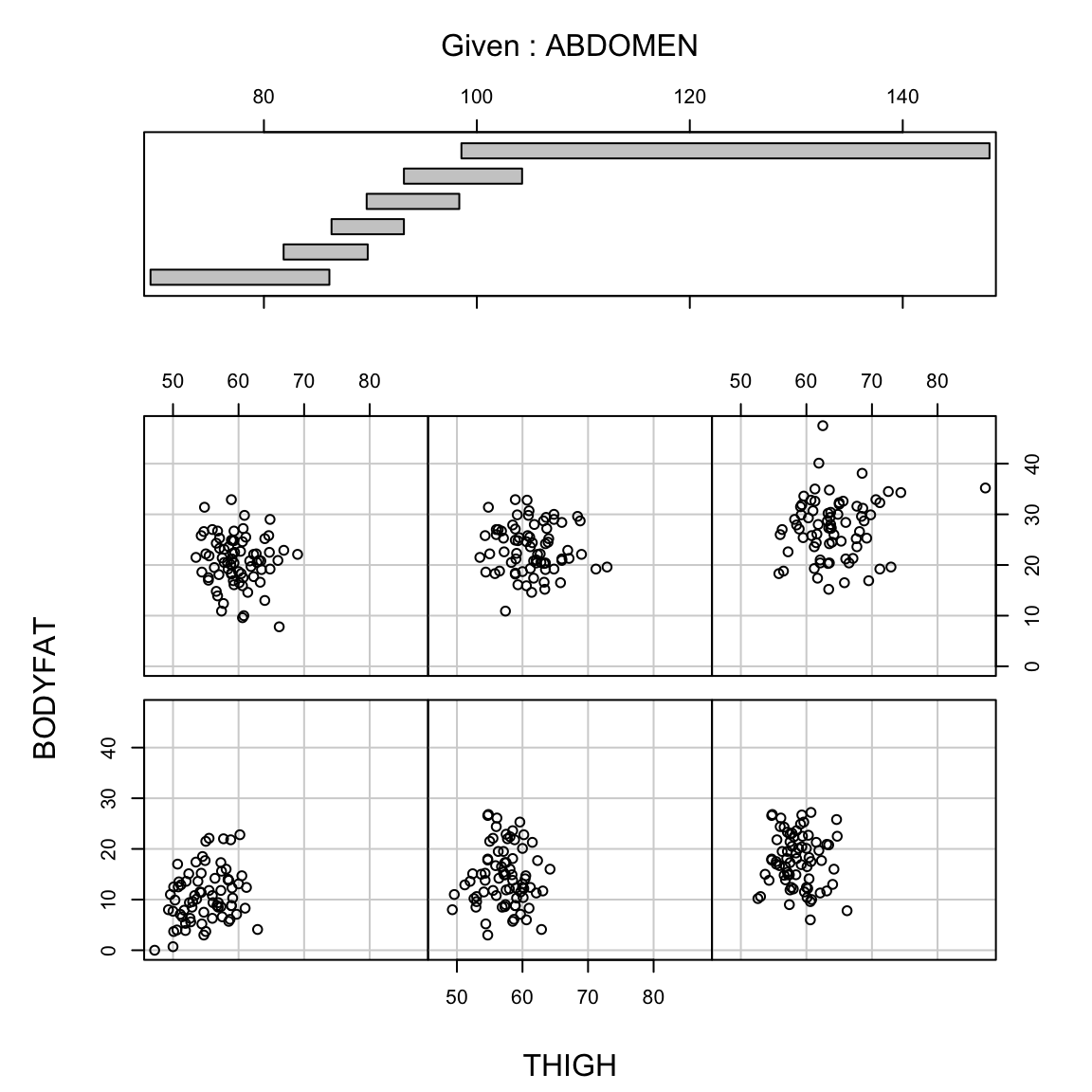

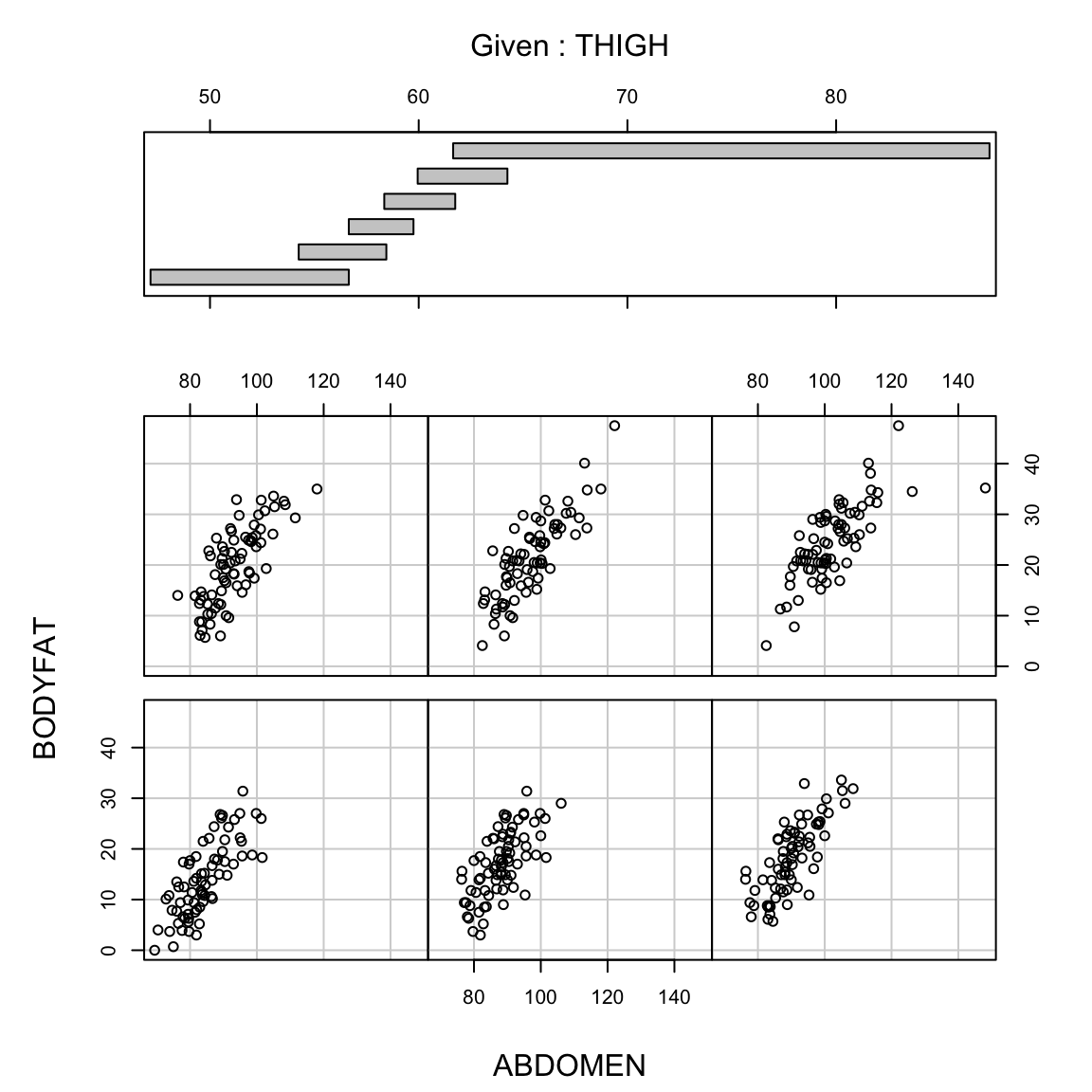

But in fact if we know the abdominal circumference, the thigh circumference does not tell us much more. A coplot visualizes this relationship, by plotting the relationship between two variables, conditional on the value of another. In otherwords, it plots the scatter plot of percent body fat against thigh, but only for those points for abdomen in a certain range (with the ranges indicated at the top).

We see there is no longer a strong relationship between percentage body fat and thigh circumference for specific values of abdomen circumference

The same is not true, however, for the reverse,

We will see later in the course when we have many variables the answers to these three questions are not always the same (and that we can’t always answer all of them). We will almost always be able to say something about the first two, but the last is often not possible.

6.2.1 Causality

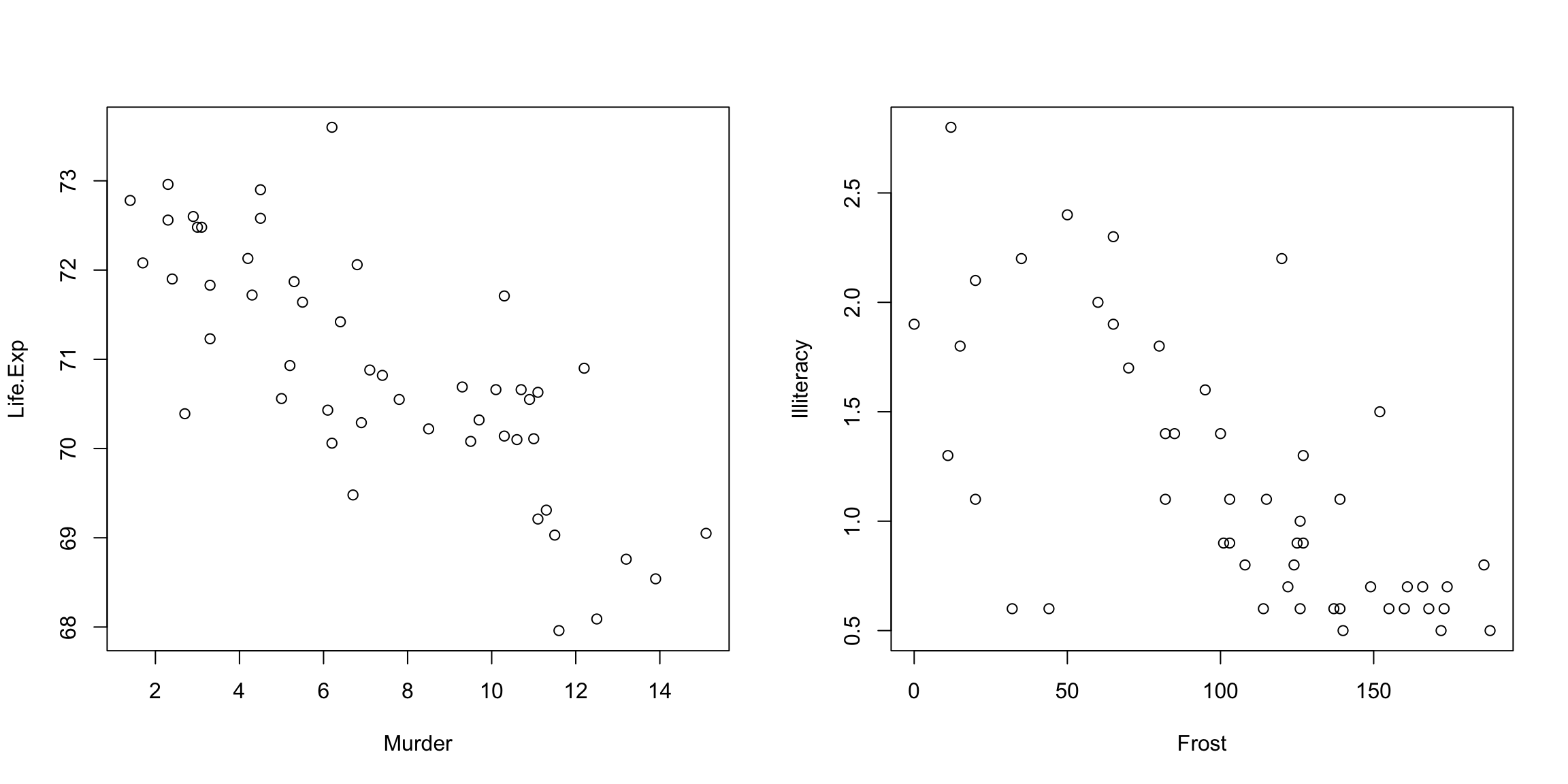

Often a (unspoken) goal of linear regression can be to determine whether something `caused’ something else. It is critical to remember that whether you can attribute causality to a variable depends on how your data was collected. Specifically, most people often have observational data, i.e. they sample subjects or units from the population and then measure the variables that naturally occur on the units they happen to sample. In general, you cannot determine causality by just collecting observations on existing subjects. You can only observe what is likely to naturally occur jointly in your population, often due to other causes. Consider the following data on the relationship between the murder rate and the life expectancy of different states or that of Illiteracy and Frost:

st <- as.data.frame(state.x77)

colnames(st)[4] = "Life.Exp"

colnames(st)[6] = "HS.Grad"

par(mfrow = c(1, 2))

with(st, plot(Murder, Life.Exp))

with(st, plot(Frost, Illiteracy))

What do you observe in the plotted relationship between the murder rate and the life expectancy ? What about between frost levels and illiteracy? What would it mean to (wrongly) assume causality here?

It is a common mistake in regression to to jump to the conclusion that one variable causes the other, but all you can really say is that there is a strong relationship in the population, i.e. when you observe one value of the variable you are highly likely to observed a particular value of the other.

Can you ever claim causality? Yes, if you run an experiment; this is where you assign what the value of the predictors are for every observation independently from any other variable. An example is a clinical trial, where patients are randomly assigned a treatment.

It’s often not possible to run an experiment, especially in the social sciences or working with humans (you can’t assign a murder rate in a state and sit back and see what the effect is on life expectancy!). In the absence of an experiment, it is common to collect a lot of other variables that might also explain the response, and ask our second question – “how necessary is it (in addition to these other variables)?” with the idea that this is a proxy for causality. This is sometime called “controlling” for the effect of the other variables, but it is important to remember that this is not the same as causality.

Regardless, the analysis of observational and experimental data often both use linear regression.53 It’s what conclusions you can draw that differ.

6.3 Multiple Linear Regression

The body fat dataset is a useful one to use to explain linear regression because all of the variables are continuous and the relationships are reasonably linear.

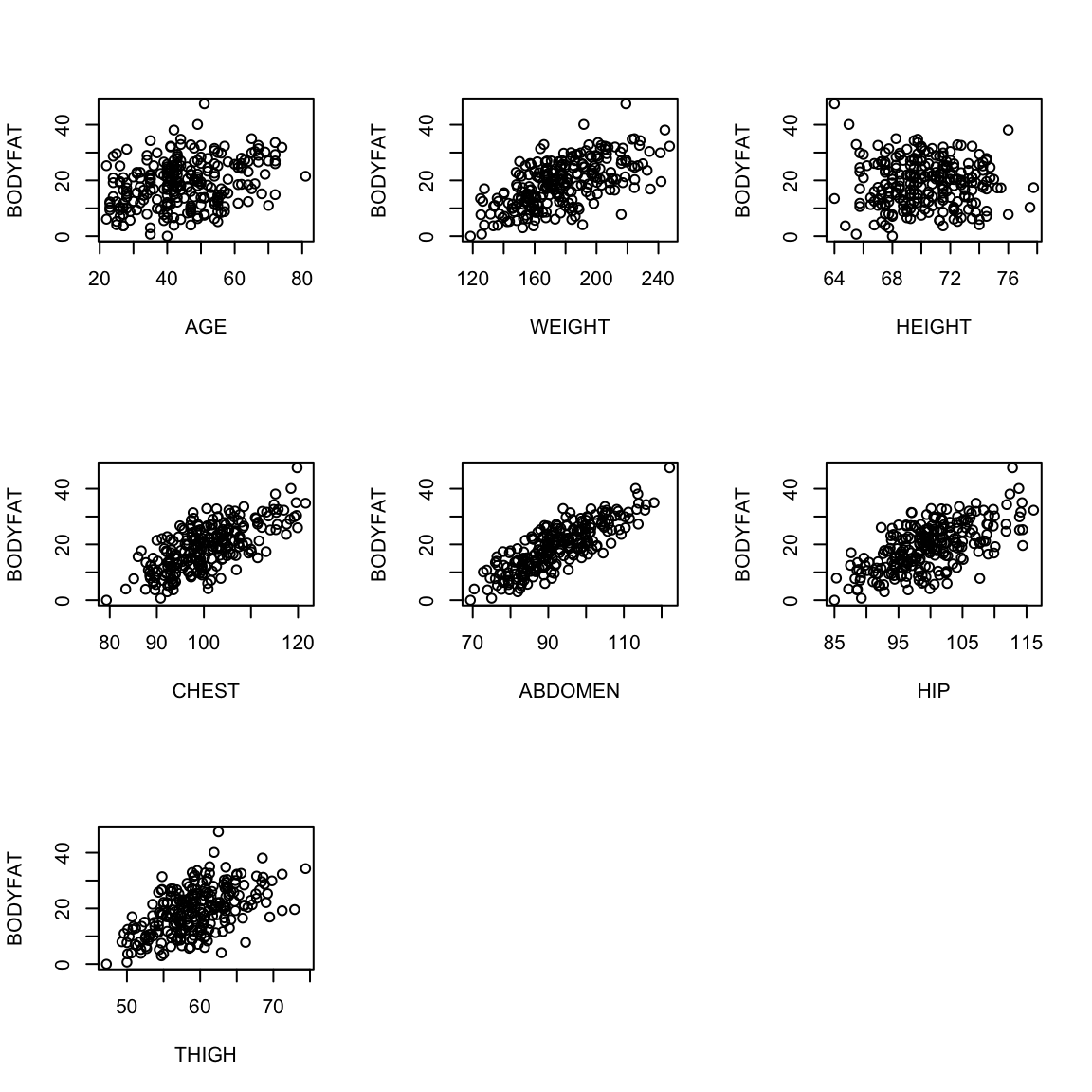

Let us look at the plots between the response variable (bodyfat) and all the explanatory variables (we’ll remove the outliers for this plot).

par(mfrow = c(3, 3))

for (i in 2:8) {

plot(body[-ou, i], body[-ou, 1], xlab = names(body)[i],

ylab = "BODYFAT")

}

par(mfrow = c(1, 1))

Most pairwise relationships seem to be linear. The clearest relationship is between bodyfat and abdomen. The next clearest is between bodyfat and chest.

We can expand the simple regression we used earlier to include more variables. \[y=\beta_0+\beta_1 x^{(1)}+\beta_2 x^{(2)}+\ldots \]

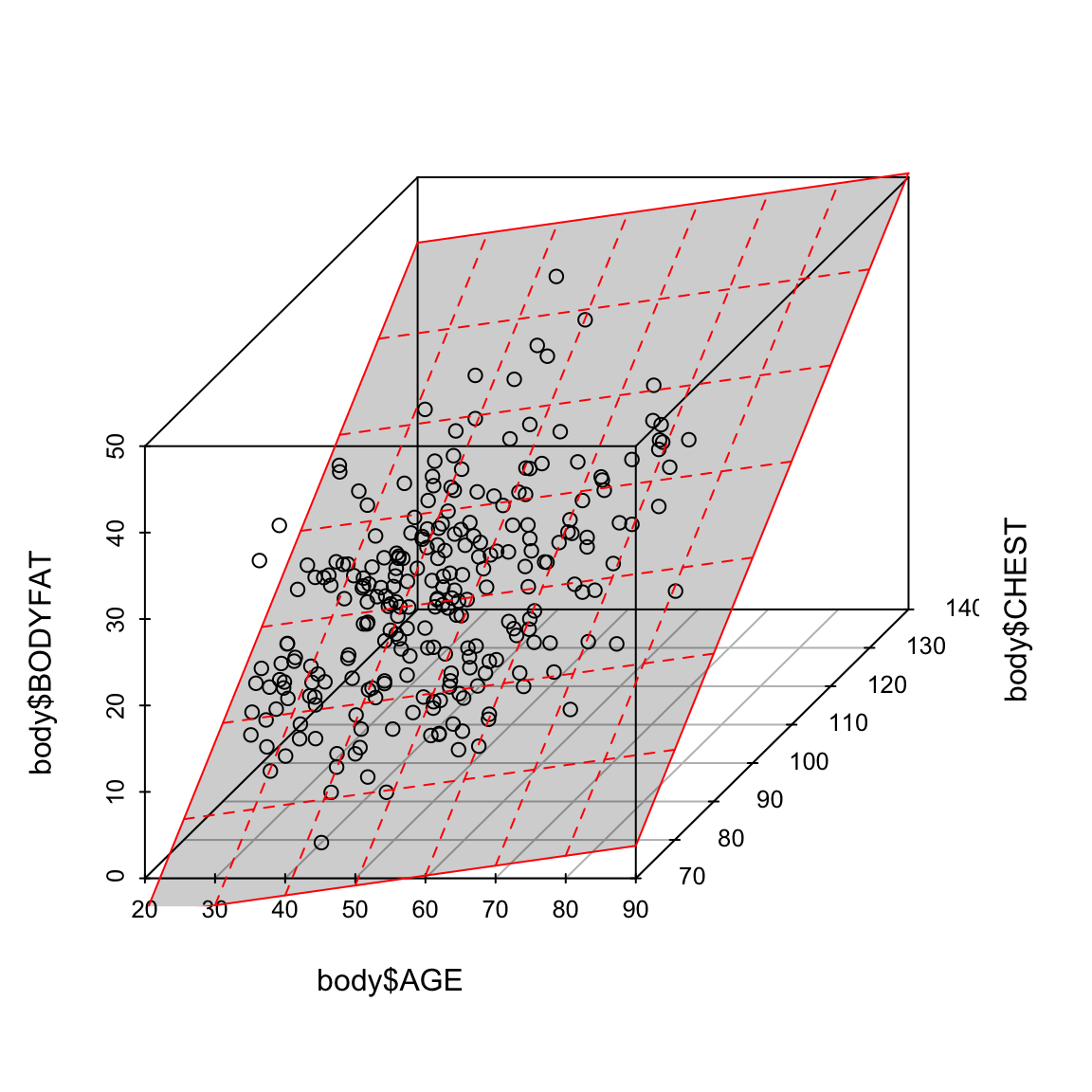

6.3.1 Regression Line vs Regression Plane

In simple linear regression (when there is only one explanatory variable), the fitted regression equation describes a line. If we have two variables, it defines a plane. This plane can be plotted in a 3D plot when there are two explanatory variables. When the number of explanatory variables is 3 or more, we have a general linear combination54 and we cannot plot this relationship.

To illustrate this, let us fit a regression equation to bodyfat percentage in terms of age and chest circumference:

We can visualize this 3D plot:

library(scatterplot3d)

sp = scatterplot3d(body$AGE, body$CHEST, body$BODYFAT)

sp$plane3d(ft2, lty.box = "solid", draw_lines = TRUE,

draw_polygon = TRUE, col = "red")

6.3.2 How to estimate the coefficients?

We can use the same principle as before. Specifically, for any selection of our \(\beta_j\) coefficients, we get a predicted or fitted value \(\hat{y}\). Then we can look for the \(\beta_j\) which minimize our loss \[\sum_{i=1}^n \ell(y_i,\hat{y}_i)\] Again, standard regression uses squared-error loss, \[\sum_{i=1}^n (y_i-\hat{y}_i)^2.\]

We again can fit this by using lm in R, with similar syntax as before:

##

## Call:

## lm(formula = BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST + ABDOMEN +

## HIP + THIGH, data = body)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0729 -3.2387 -0.0782 3.0623 10.3611

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.748e+01 1.449e+01 -2.585 0.01031 *

## AGE 1.202e-02 2.934e-02 0.410 0.68246

## WEIGHT -1.392e-01 4.509e-02 -3.087 0.00225 **

## HEIGHT -1.028e-01 9.787e-02 -1.051 0.29438

## CHEST -8.312e-04 9.989e-02 -0.008 0.99337

## ABDOMEN 9.685e-01 8.531e-02 11.352 < 2e-16 ***

## HIP -1.834e-01 1.448e-01 -1.267 0.20648

## THIGH 2.857e-01 1.362e-01 2.098 0.03693 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.438 on 244 degrees of freedom

## Multiple R-squared: 0.7266, Adjusted R-squared: 0.7187

## F-statistic: 92.62 on 7 and 244 DF, p-value: < 2.2e-16In fact, if we want to use all the variables in a data.frame we can use a simpler notation:

Notice how similar the output to the function above is to the case of simple linear regression. R has fit a linear equation for the variable BODYFAT in terms of the variables AGE, WEIGHT, HEIGHT, CHEST, ABDOMEN, HIP and THIGH. Again, the summary of the output gives each variable and its estimated coefficient,

\[\begin{align*} BODYFAT &= -37.48 + 0.012*AGE - 0.139*WEIGHT - 0.102*HEIGHT \\ & - 0.0008*CHEST + 0.968*ABDOMEN - 0.183*HIP + 0.286*THIGH \nonumber \end{align*}\]

We can also write down explicit equations for the estimates of the \(\hat{\beta}_j\) when we use squared-error loss, though we won’t give them here (they are usually given in matrix notation).

6.3.3 Interpretation of the regression equation

Here the coefficient \(\hat{\beta}_1\) is interpreted as the average increase in \(y\) for unit increase in \(x^{(1)}\), provided all other explanatory variables \(x^{(2)}, \dots, x^{(p)}\) are kept constant. More generally for \(j \geq 1\), the coefficient \(\hat{\beta}_j\) is interpreted as the average increase in \(y\) for unit increase in \(x^{(j)}\) provided all other explanatory variables \(x^{(k)}\) for \(k \neq j\) are kept constant. The intercept \(\hat{\beta}_0\) is interpreted as the average value of \(y\) when all the explanatory variables are equal to zero.

In the body fat example, the fitted regression equation as we have seen is: \[\begin{align*} BODYFAT &= -37.48 + 0.012*AGE - 0.139*WEIGHT - 0.102*HEIGHT \\ & - 0.0008*CHEST + 0.968*ABDOMEN - 0.183*HIP + 0.286*THIGH \end{align*}\] The coefficient of \(0.968\) can be interpreted as the average percentage increase in bodyfat percentage per unit (i.e., \(1\) cm) increase in Abdomen circumference provided all the other explanatory variables age, weight, height, chest circumference, hip circumference and thigh circumference are kept unchanged.

Do the signs of the fitted regression coefficients make sense?

6.3.3.1 Scaling and the size of the coefficient

It’s often tempting to look at the size of the \(\beta_j\) as a measure of how “important” the variable \(j\) is in predicting the response \(y\). However, it’s important to remember that \(\beta_j\) is relative to the scale of the input \(x^{(j)}\) – it is the change in \(y\) for one unit change in \(x^{(j)}\). So, for example, if we change from measurements in cm to mm (i.e. multiply \(x^{(j)}\) by 10) then we will get a \(\hat{\beta}_j\) that is divided by 10:

tempBody <- body

tempBody$ABDOMEN <- tempBody$ABDOMEN * 10

ftScale = lm(BODYFAT ~ ., data = tempBody)

cat("Coefficients with Abdomen in mm:\n")## Coefficients with Abdomen in mm:## (Intercept) AGE WEIGHT HEIGHT CHEST

## -3.747573e+01 1.201695e-02 -1.392006e-01 -1.028485e-01 -8.311678e-04

## ABDOMEN HIP THIGH

## 9.684620e-02 -1.833599e-01 2.857227e-01## Coefficients with Abdomen in cm:## (Intercept) AGE WEIGHT HEIGHT CHEST

## -3.747573e+01 1.201695e-02 -1.392006e-01 -1.028485e-01 -8.311678e-04

## ABDOMEN HIP THIGH

## 9.684620e-01 -1.833599e-01 2.857227e-01For this reason, it is not uncommon to scale the explanatory variables – i.e. divide each variable by its standard deviation – before running the regression:

tempBody <- body

tempBody[, -1] <- scale(tempBody[, -1])

ftScale = lm(BODYFAT ~ ., data = tempBody)

cat("Coefficients with variables scaled:\n")## Coefficients with variables scaled:## (Intercept) AGE WEIGHT HEIGHT CHEST ABDOMEN

## 19.15079365 0.15143812 -4.09098792 -0.37671913 -0.00700714 10.44300051

## HIP THIGH

## -1.31360120 1.50003073## Coefficients on original scale:## (Intercept) AGE WEIGHT HEIGHT CHEST

## -3.747573e+01 1.201695e-02 -1.392006e-01 -1.028485e-01 -8.311678e-04

## ABDOMEN HIP THIGH

## 9.684620e-01 -1.833599e-01 2.857227e-01## Sd per variable:## AGE WEIGHT HEIGHT CHEST ABDOMEN HIP THIGH

## 12.602040 29.389160 3.662856 8.430476 10.783077 7.164058 5.249952## Ratio of scaled lm coefficient to original lm coefficient## AGE WEIGHT HEIGHT CHEST ABDOMEN HIP THIGH

## 12.602040 29.389160 3.662856 8.430476 10.783077 7.164058 5.249952Now the interpretation of the \(\beta_j\) is that per standard deviation change in the variable \(x^{j}\), what is the change in \(y\), again all other variables remaining constant.

6.4 Important measurements of the regression estimate

6.4.1 Fitted Values and Multiple \(R^2\)

Any regression equation can be used to predict the value of the

response variable given values of the explanatory variables, which we call \(\hat{y}(x)\). We can get a fitted value for any value \(x\). For

example, consider our original fitted regression equation obtained by applying

lm with bodyfat percentage against all of the variables as explanatory variables:

\[\begin{align*}

BODYFAT &= -37.48 + 0.01202*AGE - 0.1392*WEIGHT - 0.1028*HEIGHT \\

& - 0.0008312*CHEST + 0.9685*ABDOMEN - 0.1834*HIP + 0.2857*THIGH

\end{align*}\]

Suppose a person X (who is of 30 years of age, weighs 180 pounds and

is 70 inches tall) wants to find out his bodyfat percentage. Let us

say that he is able to measure his chest circumference as 90 cm,

abdomen circumference as 86 cm, hip circumference as 97 cm and thigh

circumference as 60 cm. Then he can simply use the regression equation

to predict his bodyfat percentage as:

bf.pred = -37.48 + 0.01202 * 30 - 0.1392 * 180 - 0.1028 *

70 - 0.0008312 * 90 + 0.9685 * 86 - 0.1834 * 97 +

0.2857 * 60

bf.pred## [1] 13.19699The predictions given by the fitted regression equation *for each of the observations} are known as fitted values, \(\hat{y}_i=\hat{y}(x_i)\). For example, in the bodyfat dataset, the first observation (first row) is given by:

## BODYFAT AGE WEIGHT HEIGHT CHEST ABDOMEN HIP THIGH

## 1 12.3 23 154.25 67.75 93.1 85.2 94.5 59The observed value of the response (bodyfat percentage) for this individual is 12.3 %. The prediction for this person’s response given by the regression equation is

-37.48 + 0.01202 * body[1, "AGE"] - 0.1392 * body[1,

"WEIGHT"] - 0.1028 * body[1, "HEIGHT"] - 0.0008312 *

body[1, "CHEST"] + 0.9685 * body[1, "ABDOMEN"] -

0.1834 * body[1, "HIP"] + 0.2857 * body[1, "THIGH"]## [1] 16.32398Therefore the fitted value for the first observation is 16.424%. R directly calculates all fitted values and they are stored in the \(lm()\) object. You can obtain these via:

## 1 2 3 4 5 6

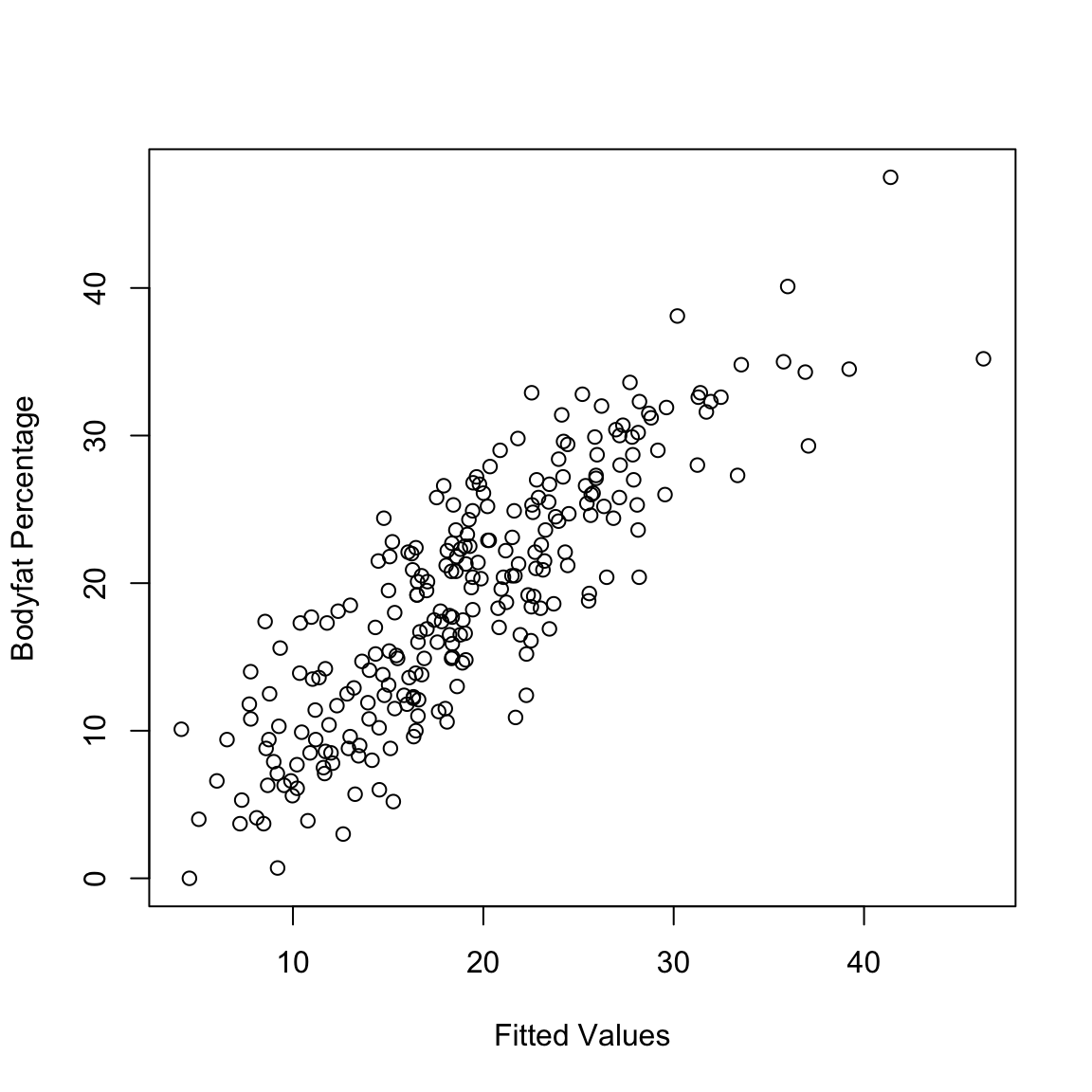

## 16.32670 10.22019 18.42600 11.89502 25.97564 16.28529If the regression equation fits the data well, we would expect the fitted values to be close to the observed responses. We can check this by just plotting the fitted values against the observed response values.

We can quantify how good of a fit our model is by taking the correlation between these two values. Specifically, the square of the correlation of \(y\) and \(\hat{y}\) is known as the Coefficient of

Determination or Multiple \(R^2\) or simply \(R^2\):

\[\begin{equation*}

R^2 = \left(cor(y_i,\hat{y}_i ) \right)^2.

\end{equation*}\]

This is an important

and widely used measure of the effectiveness of the regression

equation and given in our summary the lm fit.

## [1] 0.7265596##

## Call:

## lm(formula = BODYFAT ~ ., data = body)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0729 -3.2387 -0.0782 3.0623 10.3611

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.748e+01 1.449e+01 -2.585 0.01031 *

## AGE 1.202e-02 2.934e-02 0.410 0.68246

## WEIGHT -1.392e-01 4.509e-02 -3.087 0.00225 **

## HEIGHT -1.028e-01 9.787e-02 -1.051 0.29438

## CHEST -8.312e-04 9.989e-02 -0.008 0.99337

## ABDOMEN 9.685e-01 8.531e-02 11.352 < 2e-16 ***

## HIP -1.834e-01 1.448e-01 -1.267 0.20648

## THIGH 2.857e-01 1.362e-01 2.098 0.03693 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.438 on 244 degrees of freedom

## Multiple R-squared: 0.7266, Adjusted R-squared: 0.7187

## F-statistic: 92.62 on 7 and 244 DF, p-value: < 2.2e-16A high value of \(R^2\) means that the fitted values (given by the fitted regression equation) are close to the observed values and hence indicates that the regression equation fits the data well. A low value, on the other hand, means that the fitted values are far from the observed values and hence the regression line does not fit the data well.

Note that \(R^2\) has no units (because its a correlation). In other words, it is scale-free.

6.4.2 Residuals and Residual Sum of Squares (RSS)

For every point in the scatter the error we make in our prediction on a specific observation is the residual and is

defined as

\[\begin{equation*}

r_i = y_i-\hat{y}_i

\end{equation*}\]

Residuals are again so important that \(lm()\) automatically calculates them for us and they are contained in the lm object created.

## 1 2 3 4 5 6

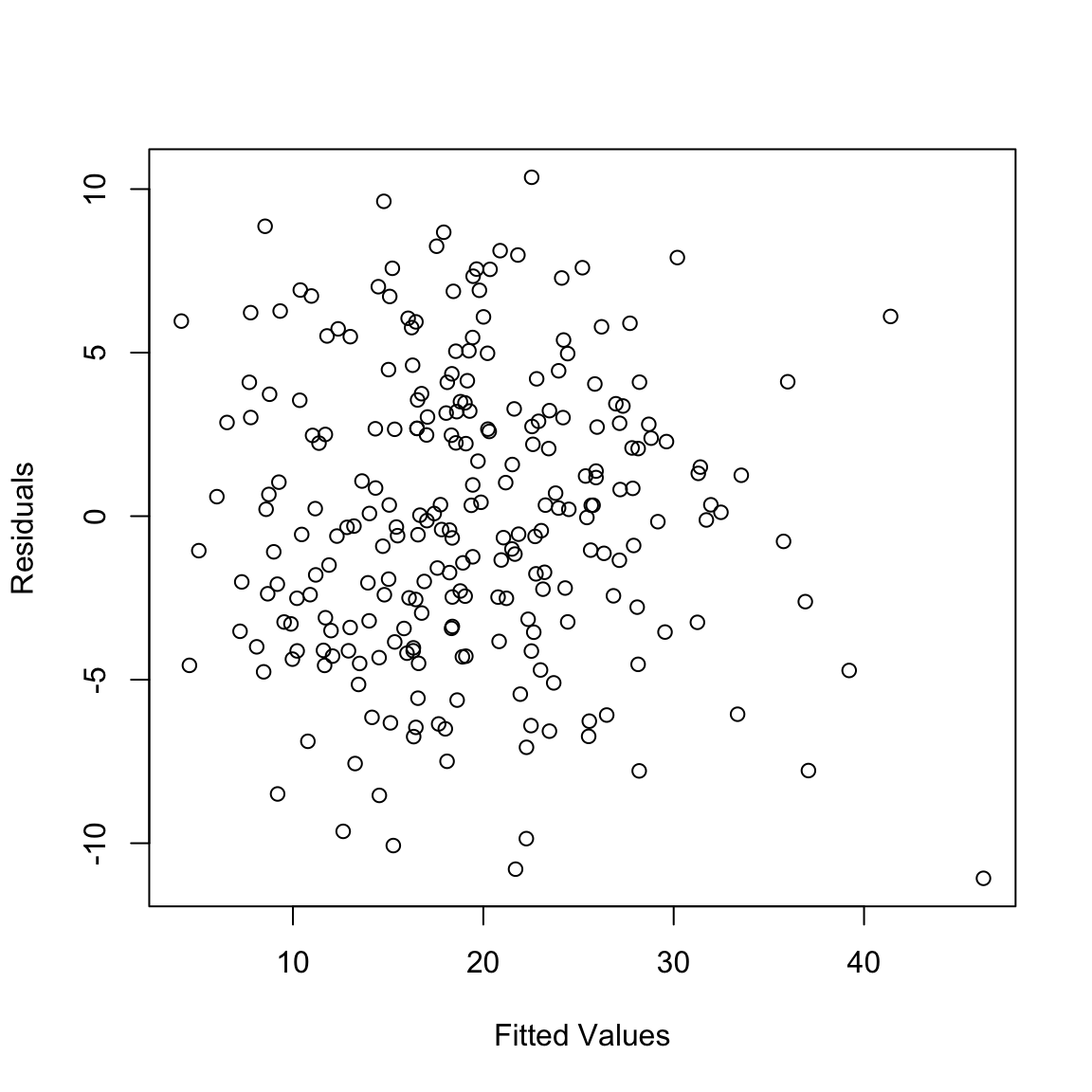

## -4.026695 -4.120189 6.874004 -1.495017 2.724355 4.614712A common way of looking at the residuals is to plot them against the fitted values.

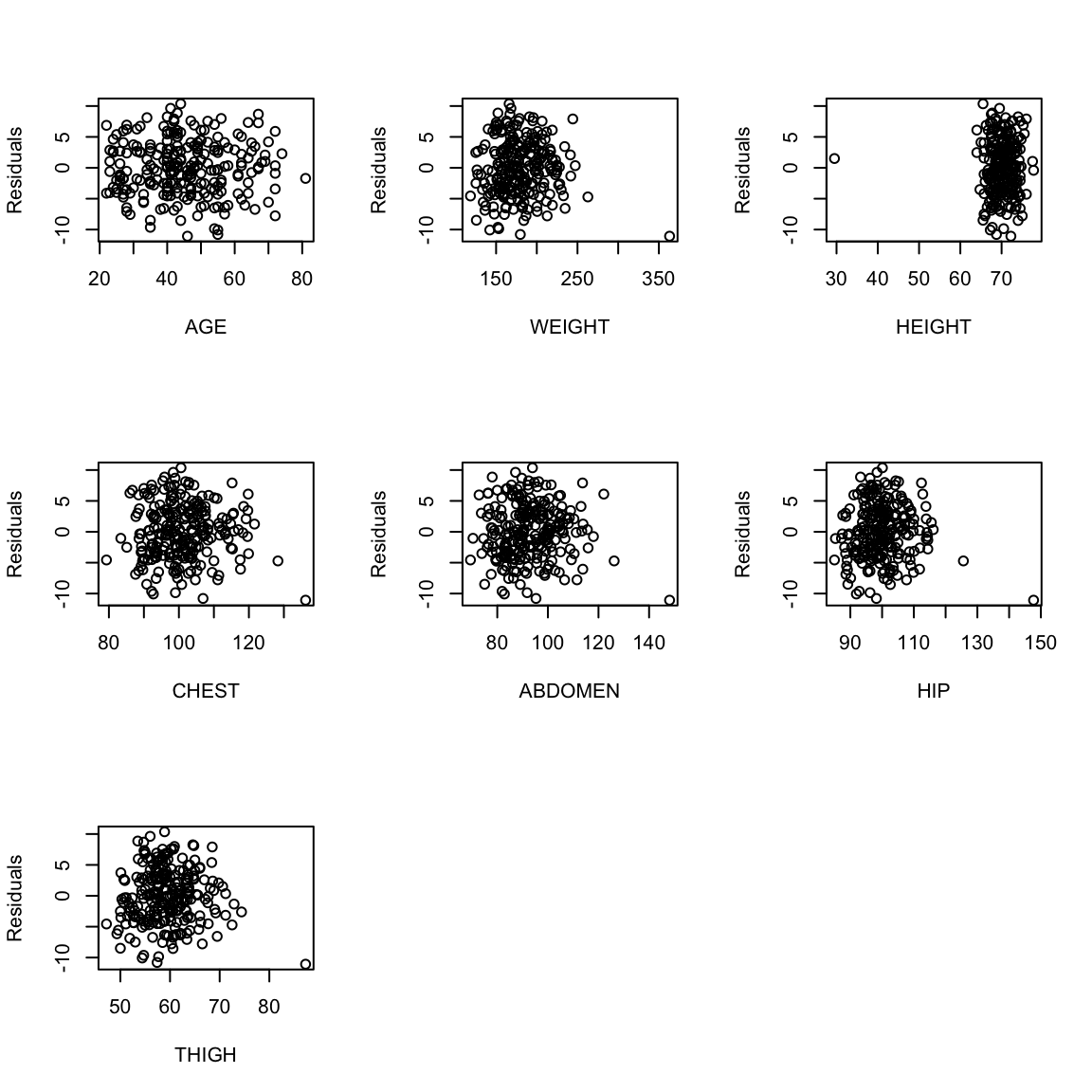

One can also plot the residuals against each of the explanatory

variables (note we didn’t remove the outliers in our regression so we include them in our plots).

One can also plot the residuals against each of the explanatory

variables (note we didn’t remove the outliers in our regression so we include them in our plots).

par(mfrow = c(3, 3))

for (i in 2:8) {

plot(body[, i], ft$residuals, xlab = names(body)[i],

ylab = "Residuals")

}

par(mfrow = c(1, 1))

The residuals represent what is left in the response (\(y\)) after all the linear effects of the explanatory variables are taken out.

One consequence of this is that the residuals are uncorrelated with every explanatory variable. We can check this in easily in the body fat example.

for (i in 2:8) {

cat("Correlation with", names(body)[i], ":\t")

cat(cor(body[, i], residuals(ft)), "\n")

}## Correlation with AGE : -1.754044e-17

## Correlation with WEIGHT : 4.71057e-17

## Correlation with HEIGHT : -1.720483e-15

## Correlation with CHEST : -4.672628e-16

## Correlation with ABDOMEN : -7.012368e-16

## Correlation with HIP : -8.493675e-16

## Correlation with THIGH : -5.509094e-16Moreover, as we discussed in simple regression, the residuals always have mean zero:

## [1] 2.467747e-16Again, these are automatic properties of any least-squares regression. This is not evidence that you have a good fit or that model makes sense!

Also, if one were to fit a regression equation to the residuals in terms of the same explanatory variables, then the fitted regression equation will have all coefficients exactly equal to zero:

m.res = lm(ft$residuals ~ body$AGE + body$WEIGHT +

body$HEIGHT + body$CHEST + body$ABDOMEN + body$HIP +

body$THIGH)

summary(m.res)##

## Call:

## lm(formula = ft$residuals ~ body$AGE + body$WEIGHT + body$HEIGHT +

## body$CHEST + body$ABDOMEN + body$HIP + body$THIGH)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0729 -3.2387 -0.0782 3.0623 10.3611

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.154e-14 1.449e+01 0 1

## body$AGE 1.282e-17 2.934e-02 0 1

## body$WEIGHT 1.057e-16 4.509e-02 0 1

## body$HEIGHT -1.509e-16 9.787e-02 0 1

## body$CHEST 1.180e-16 9.989e-02 0 1

## body$ABDOMEN -2.452e-16 8.531e-02 0 1

## body$HIP -1.284e-16 1.448e-01 0 1

## body$THIGH -1.090e-16 1.362e-01 0 1

##

## Residual standard error: 4.438 on 244 degrees of freedom

## Multiple R-squared: 6.384e-32, Adjusted R-squared: -0.02869

## F-statistic: 2.225e-30 on 7 and 244 DF, p-value: 1If the regression equation fits the data well, the residuals are supposed to be small. One popular way of assessing the size of the residuals is to compute their sum of squares. This quantity is called the Residual Sum of Squares (RSS).

## [1] 4806.806Note that RSS depends on the units in which the response variable is measured.

Relationship to \(R^2\)

There is a very simple relationship between RSS and \(R^2\) (recall that \(R^2\) is the square of the correlation between the response values and the fitted values): \[\begin{equation*} R^2 = 1 - \frac{RSS}{TSS} \end{equation*}\] where TSS stands for Total Sum of Squares and is defined as \[\begin{equation*} TSS = \sum_{i=1}^n \left(y_i - \bar{y} \right)^2. \end{equation*}\] TSS is just the variance of y without the \(1/(n-1)\) term.

It is easy to verify this formula in R.

## [1] 4806.806## [1] 0.7265596##

## Call:

## lm(formula = BODYFAT ~ ., data = body)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0729 -3.2387 -0.0782 3.0623 10.3611

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.748e+01 1.449e+01 -2.585 0.01031 *

## AGE 1.202e-02 2.934e-02 0.410 0.68246

## WEIGHT -1.392e-01 4.509e-02 -3.087 0.00225 **

## HEIGHT -1.028e-01 9.787e-02 -1.051 0.29438

## CHEST -8.312e-04 9.989e-02 -0.008 0.99337

## ABDOMEN 9.685e-01 8.531e-02 11.352 < 2e-16 ***

## HIP -1.834e-01 1.448e-01 -1.267 0.20648

## THIGH 2.857e-01 1.362e-01 2.098 0.03693 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.438 on 244 degrees of freedom

## Multiple R-squared: 0.7266, Adjusted R-squared: 0.7187

## F-statistic: 92.62 on 7 and 244 DF, p-value: < 2.2e-16If we did not have any explanatory variables, then we would predict the value of bodyfat percentage for any individual by simply the mean of the bodyfat values in our sample. The total squared error for this prediction is given by TSS. On the other hand, the total squared error for the prediction using linear regression based on the explanatory variables is given by RSS. Therefore \(1 - R^2\) represents the reduction in the squared error because of the explanatory variables.

6.4.3 Behaviour of RSS (and \(R^2\)) when variables are added or removed from the regression equation

The value of RSS always increases when one or more explanatory variables are removed from the regression equation. For example, suppose that we remove the variable abdomen circumference from the regression equation. The new RSS will then be:

ft.1 = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST +

HIP + THIGH, data = body)

rss.ft1 = summary(ft.1)$r.squared

rss.ft1## [1] 0.5821305## Length Class Mode

## 0 NULL NULLNotice that there is a quite a lot of increase in the RSS. What if we had kept ABDOMEN in the model but dropped the variable CHEST?

ft.2 = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + ABDOMEN +

HIP + THIGH, data = body)

rss.ft2 = summary(ft.2)$r.squared

rss.ft2## [1] 0.7265595## [1] 4806.806The RSS again increases but by a very very small amount. This therefore suggests that Abdomen circumference is a more important variable in this regression compared to Chest circumference.

The moral of this exercise is the following. The RSS always increases when variables are dropped from the regression equation. However the amount of increase varies for different variables. We can understand the importance of variables in a multiple regression equation by noting the amount by which the RSS increases when the individual variables are dropped. We will come back to this point while studying inference in the multiple regression model.

Because RSS has a direct relation to \(R^2\) via \(R^2 = 1 - (RSS/TSS)\), one can see \(R^2\) decreases when variables are removed from the model. However the amount of decrease will be different for different variables. For example, in the body fat dataset, after removing the abdomen circumference variable, \(R^2\) changes to:

ft.1 = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST +

HIP + THIGH, data = body)

R2.ft1 = summary(ft.1)$r.squared

R2.ft1## [1] 0.5821305## [1] 0.7265596Notice that there is a lot of decrease in \(R^2\). What happens if the variable Chest circumference is dropped.

ft.2 = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + ABDOMEN +

HIP + THIGH, data = body)

R2.ft2 = summary(ft.2)$r.squared

R2.ft2## [1] 0.7265595## [1] 0.7265596There is now a very very small decrease.

6.4.4 Residual Degrees of Freedom and Residual Standard Error

In a regression with \(p\) explanatory variables, the residual degrees of freedom is given by \(n - p - 1\) (recall that \(n\) is the number of observations). This can be thought of as the effective number of residuals. Even though there are \(n\) residuals, they are supposed to satisfy \(p+1\) exact equations (they sum to zero and they have zero correlation with each of the \(p\) explanatory variables).

The Residual Standard Error is defined as: \[\begin{equation*} \sqrt{\frac{\text{Residual Sum of Squares}}{\text{Residual Degrees of Freedom}}} \end{equation*}\] This can be interpreted as the average magnitude of an individual residual and can be used to assess the sizes of residuals (in particular, to find and identify large residual values).

For illustration,

ft = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST + ABDOMEN +

HIP + THIGH, data = body)

n = nrow(body)

p = 7

rs.df = n - p - 1

rs.df## [1] 244ft = lm(BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST + ABDOMEN +

HIP + THIGH, data = body)

rss = sum((ft$residuals)^2)

rse = sqrt(rss/rs.df)

rse## [1] 4.438471Both of these are printed in the summary function in R:

##

## Call:

## lm(formula = BODYFAT ~ AGE + WEIGHT + HEIGHT + CHEST + ABDOMEN +

## HIP + THIGH, data = body)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.0729 -3.2387 -0.0782 3.0623 10.3611

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.748e+01 1.449e+01 -2.585 0.01031 *

## AGE 1.202e-02 2.934e-02 0.410 0.68246

## WEIGHT -1.392e-01 4.509e-02 -3.087 0.00225 **

## HEIGHT -1.028e-01 9.787e-02 -1.051 0.29438

## CHEST -8.312e-04 9.989e-02 -0.008 0.99337

## ABDOMEN 9.685e-01 8.531e-02 11.352 < 2e-16 ***

## HIP -1.834e-01 1.448e-01 -1.267 0.20648

## THIGH 2.857e-01 1.362e-01 2.098 0.03693 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4.438 on 244 degrees of freedom

## Multiple R-squared: 0.7266, Adjusted R-squared: 0.7187

## F-statistic: 92.62 on 7 and 244 DF, p-value: < 2.2e-166.5 Multiple Regression With Categorical Explanatory Variables

In many instances of regression, some of the explanatory variables are categorical (note that the response variable is always continuous). For example, consider the (short version of the) college dataset that you have already encountered.

We can do a regression here with the retention rate (variable name

RET-FT4) as the response and all other variables as the

explanatory variables. Note that one of the explanatory variables

(variable name CONTROL) is categorical. This variable represents whether

the college is public (1), private non-profit (2) or private for

profit (3). Dealing with such categorical variables is a little

tricky. To illustrate the ideas here, let us focus on a regression for

the retention rate based on just two explanatory variables: the

out-of-state tuition and the categorical variable CONTROL.

The important thing to note about the variable CONTROL is that

its levels 1, 2 and 3 are completely arbitrary and have no

particular meaning. For example, we could have called its levels \(A\),

\(B\), \(C\) or \(Pu\), \(Pr-np\), \(Pr-fp\) as well. If we use the \(lm()\)

function in the usual way with TUITIONFEE and CONTROL

as the explanatory variables, then R will treat CONTROL as a

continuous variable which does not make sense:

##

## Call:

## lm(formula = RET_FT4 ~ TUITIONFEE_OUT + CONTROL, data = scorecard)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.69041 -0.04915 0.00516 0.05554 0.33165

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.661e-01 9.265e-03 71.90 <2e-16 ***

## TUITIONFEE_OUT 9.405e-06 3.022e-07 31.12 <2e-16 ***

## CONTROL -8.898e-02 5.741e-03 -15.50 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08741 on 1238 degrees of freedom

## Multiple R-squared: 0.4391, Adjusted R-squared: 0.4382

## F-statistic: 484.5 on 2 and 1238 DF, p-value: < 2.2e-16The regression coefficient for CONTROL has the usual

interpretation (if CONTROL increases by one unit, \(\dots\)) which

does not make much sense because CONTROL is categorical and so

increasing it by one unit is nonsensical. So everything about this regression is wrong (and we shouldn’t interpret anything from the inference here).

You can check that R is

treating CONTROL as a numeric variable by:

## [1] TRUEThe correct way to deal with categorical variables in R is to treat them as factors:

##

## Call:

## lm(formula = RET_FT4 ~ TUITIONFEE_OUT + as.factor(CONTROL), data = scorecard)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.68856 -0.04910 0.00505 0.05568 0.33150

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.765e-01 7.257e-03 79.434 < 2e-16 ***

## TUITIONFEE_OUT 9.494e-06 3.054e-07 31.090 < 2e-16 ***

## as.factor(CONTROL)2 -9.204e-02 5.948e-03 -15.474 < 2e-16 ***

## as.factor(CONTROL)3 -1.218e-01 3.116e-02 -3.909 9.75e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08732 on 1237 degrees of freedom

## Multiple R-squared: 0.4408, Adjusted R-squared: 0.4394

## F-statistic: 325 on 3 and 1237 DF, p-value: < 2.2e-16We can make this output a little better by fixing up the factor, rather than having R make it a factor on the fly:

scorecard$CONTROL <- factor(scorecard$CONTROL, levels = c(1,

2, 3), labels = c("public", "private", "private for-profit"))

req = lm(RET_FT4 ~ TUITIONFEE_OUT + CONTROL, data = scorecard)

summary(req)##

## Call:

## lm(formula = RET_FT4 ~ TUITIONFEE_OUT + CONTROL, data = scorecard)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.68856 -0.04910 0.00505 0.05568 0.33150

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.765e-01 7.257e-03 79.434 < 2e-16 ***

## TUITIONFEE_OUT 9.494e-06 3.054e-07 31.090 < 2e-16 ***

## CONTROLprivate -9.204e-02 5.948e-03 -15.474 < 2e-16 ***

## CONTROLprivate for-profit -1.218e-01 3.116e-02 -3.909 9.75e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08732 on 1237 degrees of freedom

## Multiple R-squared: 0.4408, Adjusted R-squared: 0.4394

## F-statistic: 325 on 3 and 1237 DF, p-value: < 2.2e-16What do you notice that is different than our wrong output when the CONTROL variable was treated as numeric?

Why is the coefficient of TUITIONFEE so small?

6.5.1 Separate Intercepts: The coefficients of Categorical/Factor variables

What do the multiple coefficients mean for the variable CONTROL?

This equation can be written in full as:

\[\begin{equation*}

RET = 0.5765 + 9.4\times 10^{-6}*TUITIONFEE

-0.0092*I\left(CONTROL = 2 \right) -

0.1218*I\left(CONTROL = 3 \right).

\end{equation*}\]

The variable \(I\left(CONTROL = 2 \right)\) is the indicator function, which takes the value 1 if the

college has CONTROL equal to 2 (i.e., if the college is private

non-profit) and 0 otherwise. Similarly the variable \(I\left(CONTROL = 3 \right)\) takes the value 1 if the college has CONTROL equal to 3

(i.e., if the college is private for profit) and 0

otherwise. Variables which take only the two values 0 and 1 are called

indicator variables.

Note that the variable \(I\left(CONTROL = 1 \right)\) does not appear in the regression equation . This means that the level 1 (i.e., the college is public) is the baseline level here and the effects of \(-0.0092\) and \(0.1218\) for private for-profit and private non-profit colleges respectively should be interpreted relative to public colleges.

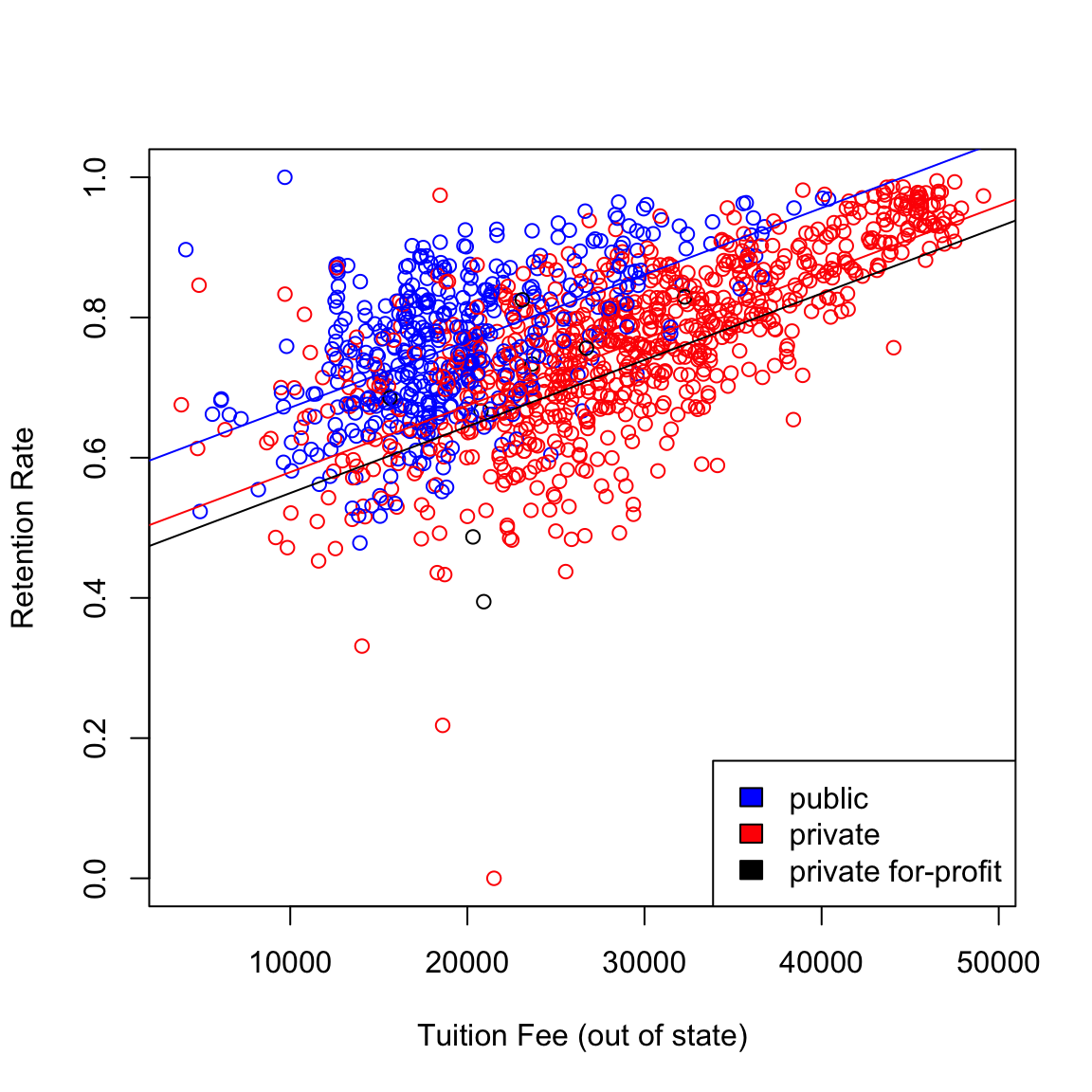

The regression equation can effectively be broken down into three equations. For public colleges, the two indicator variables in are zero and the equation becomes: \[\begin{equation*} RET = 0.5765 + 9.4\times 10^{-6}*TUITIONFEE. \end{equation*}\] For private non-profit colleges, the equation becomes \[\begin{equation*} RET = 0.5673 + 9.4\times 10^{-6}*TUITIONFEE. \end{equation*}\] and for private for-profit colleges, \[\begin{equation*} RET = 0.4547 + 9.4\times 10^{-6}*TUITIONFEE. \end{equation*}\]

Note that the coefficient of TUITIONFEE is the same in each of these

equations (only the intercept changes). We can plot a scatterplot

together with all these lines.

cols <- c("blue", "red", "black")

plot(RET_FT4 ~ TUITIONFEE_OUT, data = scorecard, xlab = "Tuition Fee (out of state)",

ylab = "Retention Rate", col = cols[scorecard$CONTROL])

baseline <- coef(req)[["(Intercept)"]]

slope <- coef(req)[["TUITIONFEE_OUT"]]

for (ii in 1:nlevels(scorecard$CONTROL)) {

lev <- levels(scorecard$CONTROL)[[ii]]

if (ii == 1) {

abline(a = baseline, b = slope, col = cols[[ii]])

}

else {

abline(a = baseline + coef(req)[[ii + 1]],

b = slope, col = cols[[ii]])

}

}

legend("bottomright", levels(scorecard$CONTROL), fill = cols)

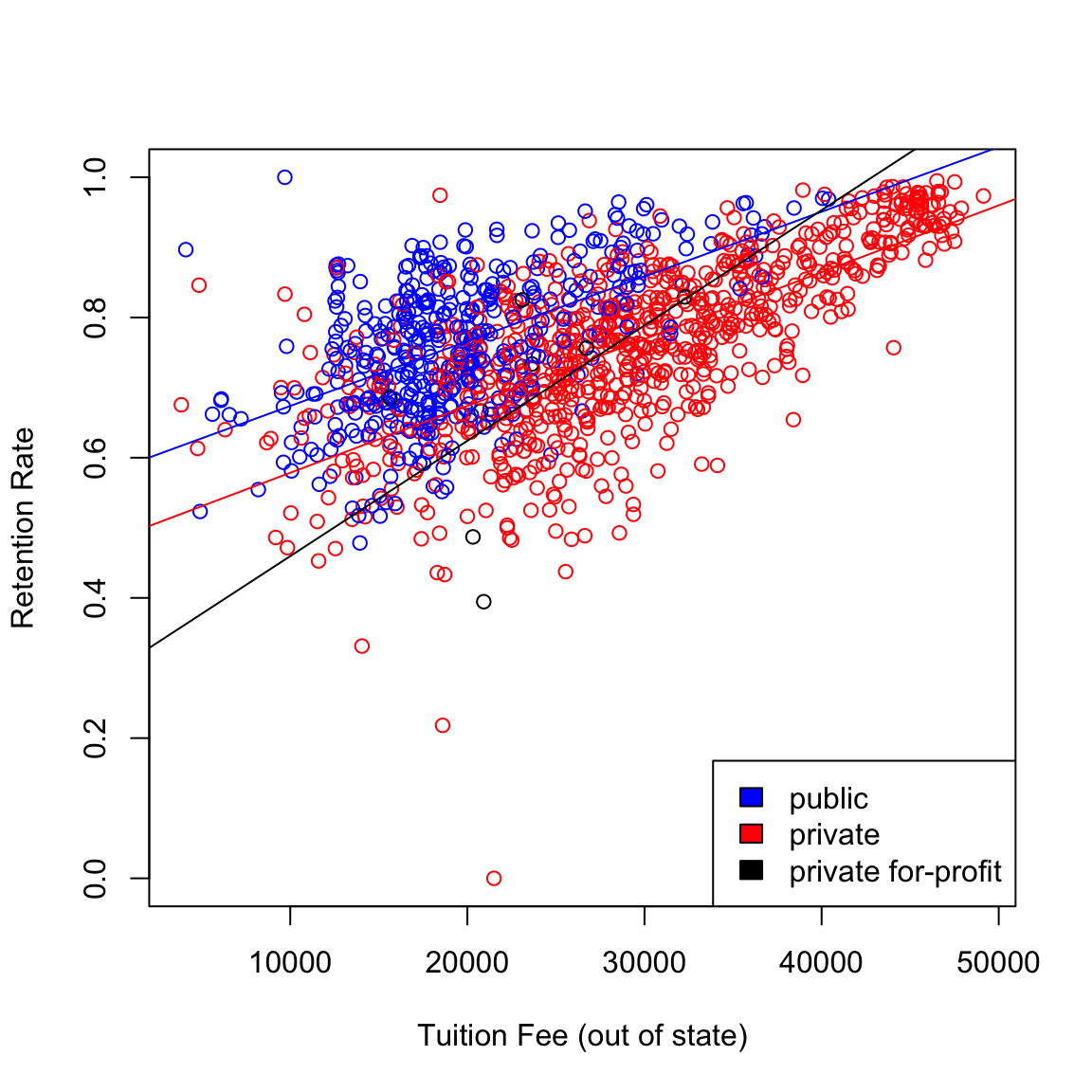

6.5.2 Separate Slopes: Interactions

What if we want these regression equations to have different slopes as well as different intercepts for each of the types of colleges?

Intuitively, we can do separate regressions for each of the three groups given by

the CONTROL variable.

Alternatively, we can do this in multiple

regression by adding an interaction variable between CONTROL and

TUITIONFEE as follows:

req.1 = lm(RET_FT4 ~ TUITIONFEE_OUT + CONTROL + TUITIONFEE_OUT:CONTROL,

data = scorecard)

summary(req.1)##

## Call:

## lm(formula = RET_FT4 ~ TUITIONFEE_OUT + CONTROL + TUITIONFEE_OUT:CONTROL,

## data = scorecard)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.68822 -0.04982 0.00491 0.05555 0.32900

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.814e-01 1.405e-02 41.372 < 2e-16

## TUITIONFEE_OUT 9.240e-06 6.874e-07 13.441 < 2e-16

## CONTROLprivate -9.830e-02 1.750e-02 -5.617 2.4e-08

## CONTROLprivate for-profit -2.863e-01 1.568e-01 -1.826 0.0681

## TUITIONFEE_OUT:CONTROLprivate 2.988e-07 7.676e-07 0.389 0.6971

## TUITIONFEE_OUT:CONTROLprivate for-profit 7.215e-06 6.716e-06 1.074 0.2829

##

## (Intercept) ***

## TUITIONFEE_OUT ***

## CONTROLprivate ***

## CONTROLprivate for-profit .

## TUITIONFEE_OUT:CONTROLprivate

## TUITIONFEE_OUT:CONTROLprivate for-profit

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08734 on 1235 degrees of freedom

## Multiple R-squared: 0.4413, Adjusted R-squared: 0.4391

## F-statistic: 195.1 on 5 and 1235 DF, p-value: < 2.2e-16Note that this regression equation has two more coefficients compared to the previous regression (which did not have the interaction term). The two additional variables are the product of the terms of each of the previous terms: \(TUITIONFEE * I(CONTROL = 2)\) and \(TUITIONFEE * I(CONTROL = 3)\).

The presence of these product terms means that three separate slopes per each level of the factor are being fit here, why?

Alternatively, this regression with interaction can also be done in R via:

##

## Call:

## lm(formula = RET_FT4 ~ TUITIONFEE_OUT * CONTROL, data = scorecard)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.68822 -0.04982 0.00491 0.05555 0.32900

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.814e-01 1.405e-02 41.372 < 2e-16

## TUITIONFEE_OUT 9.240e-06 6.874e-07 13.441 < 2e-16

## CONTROLprivate -9.830e-02 1.750e-02 -5.617 2.4e-08

## CONTROLprivate for-profit -2.863e-01 1.568e-01 -1.826 0.0681

## TUITIONFEE_OUT:CONTROLprivate 2.988e-07 7.676e-07 0.389 0.6971

## TUITIONFEE_OUT:CONTROLprivate for-profit 7.215e-06 6.716e-06 1.074 0.2829

##

## (Intercept) ***

## TUITIONFEE_OUT ***

## CONTROLprivate ***

## CONTROLprivate for-profit .

## TUITIONFEE_OUT:CONTROLprivate

## TUITIONFEE_OUT:CONTROLprivate for-profit

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.08734 on 1235 degrees of freedom

## Multiple R-squared: 0.4413, Adjusted R-squared: 0.4391

## F-statistic: 195.1 on 5 and 1235 DF, p-value: < 2.2e-16The three separate regressions can be plotted in one plot as before.

cols <- c("blue", "red", "black")

plot(RET_FT4 ~ TUITIONFEE_OUT, data = scorecard, xlab = "Tuition Fee (out of state)",

ylab = "Retention Rate", col = cols[scorecard$CONTROL])

baseline <- coef(req.1)[["(Intercept)"]]

slope <- coef(req.1)[["TUITIONFEE_OUT"]]

for (ii in 1:nlevels(scorecard$CONTROL)) {

lev <- levels(scorecard$CONTROL)[[ii]]

if (ii == 1) {

abline(a = baseline, b = slope, col = cols[[ii]])

}

else {

abline(a = baseline + coef(req.1)[[ii + 1]],

b = slope + coef(req.1)[[ii + 3]], col = cols[[ii]])

}

}

legend("bottomright", levels(scorecard$CONTROL), fill = cols)

Interaction terms make regression equations complicated (have more variables) and also slightly harder to interpret although, in some situations, they really improve predictive power. In this particular example, note that the multiple \(R^2\) only increased from 0.4408 to 0.4413 after adding the interaction terms. This small increase means that the interaction terms are not really adding much to the regression equation so we are better off using the previous model with no interaction terms.

To get more practice with regressions having categorical variables, let us consider the bike sharing dataset discussed above.

Let us fit a basic regression equation with casual (number of

bikes rented by casual users hourly) as the response variable and the

explanatory variables being atemp (normalized feeling

temperature), workingday. For this dataset, I’ve already encoded the categorical variables as factors.

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.07907 0.33784 0.48673 0.47435 0.60860 0.84090## No Yes

## 231 500## Clear/Partly Cloudy Light Rain/Snow Misty

## 463 21 247We fit the regression equation with a different shift in the mean for each level:

##

## Call:

## lm(formula = casual ~ atemp + workingday + weathersit, data = bike)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1456.76 -243.97 -22.93 166.81 1907.20

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 350.31 55.11 6.357 3.63e-10 ***

## atemp 2333.77 97.48 23.942 < 2e-16 ***

## workingdayYes -794.11 33.95 -23.388 < 2e-16 ***

## weathersitLight Rain/Snow -523.79 95.23 -5.500 5.26e-08 ***

## weathersitMisty -150.79 33.75 -4.468 9.14e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 425.2 on 726 degrees of freedom

## Multiple R-squared: 0.6186, Adjusted R-squared: 0.6165

## F-statistic: 294.3 on 4 and 726 DF, p-value: < 2.2e-16How are the coefficients in the above regression interpreted?

There are interactons that one

can add here too. For example, I can add an interaction between

workingday and atemp:

##

## Call:

## lm(formula = casual ~ atemp + workingday + weathersit + workingday:atemp,

## data = bike)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1709.76 -198.09 -55.12 152.88 1953.07

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -276.22 77.48 -3.565 0.000388 ***

## atemp 3696.41 155.56 23.762 < 2e-16 ***

## workingdayYes 166.71 94.60 1.762 0.078450 .

## weathersitLight Rain/Snow -520.78 88.48 -5.886 6.05e-09 ***

## weathersitMisty -160.28 31.36 -5.110 4.12e-07 ***

## atemp:workingdayYes -2052.09 190.48 -10.773 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 395.1 on 725 degrees of freedom

## Multiple R-squared: 0.6712, Adjusted R-squared: 0.6689

## F-statistic: 296 on 5 and 725 DF, p-value: < 2.2e-16What is the interpretation of the coefficients now?

6.6 Inference in Multiple Regression

So far, we have learned how to fit multiple regression equations to observed data and interpret the coefficient. Inference is necessary for answering questions such as: “Is the observed relationship between the response and the explanatory variables real or is it merely caused by sampling variability?”

We will again consider both parametric models and resampling techniques for inference.

6.6.1 Parametric Models for Inference

There is a response variable \(y\) and \(p\) explanatory variables \(x^{(1)}, \dots, x^{(p)}\). The data generation model is similar to that of simple regression: \[\begin{equation*} y = \beta_0 + \beta_1 x^{(1)} + \dots + \beta_p x^{(p)} + e. \end{equation*}\] The numbers \(\beta_0, \dots, \beta_p\) are the parameters of the model and unknown.

The error \(e\) is the only random part of the model, and we make the same assumptions as in simple regression:

- \(e_i\) are independent for each observation \(i\)

- \(e_i\) all have the same distribution with mean \(0\) and variance \(\sigma^2\)

- \(e_i\) follow a normal distribution

We could write this more succinctly as \[e_i \text{ are i.i.d } N(0,\sigma^2)\] but it’s helpful to remember that these are separate assumptions, so we can talk about which are the most important.

This means that under this model, \[y \sim N(\beta_0 + \beta_1 x^{(1)} + \dots + \beta_p x^{(p)}, \sigma^2)\] i.e. the observed \(y_i\) are normal and independent from each other, but each with a different mean, which depends on \(x_i\) (so the \(y_i\) are NOT i.i.d. because not identically distributed).

Estimates

The numbers \(\beta_0, \dots, \beta_p\) capture the true relationship between \(y\) and \(x_1, \dots, x_p\). Also unknown is the quantity \(\sigma^2\) which is the variance of the unknown \(e_i\). When we fit a regression equation to a dataset via \(lm()\) in R, we obtain estimates \(\hat{\beta}_j\) of the unknown \(\beta_j\).

The residual \(r_i\) serve as natural proxies for the unknown random errors \(e_i\). Therefore a natural estimate for the error standard deviation \(\sigma\) is the Residual Standard Error, \[\hat{\sigma}^2=\frac{1}{n-p-1}\sum r_i^2=\frac{1}{n-p-1} RSS\] Notice this is the same as our previous equation from simple regression, only now we are using \(n-p-1\) as our correction to make the estimate unbiased.

6.6.2 Global Fit

The most obvious question is the global question: are these variables cummulatively any good in predicting \(y\)? This can be restated as, whether you could predict \(y\) just as well if didn’t use any of the \(x^{(j)}\) variables.

If we didn’t use any of the variables, what is our best “prediction” of \(y\)?

So our question can be phrased as whether our prediction that we estimated, \(\hat{y}(x)\), is better than just \(\bar{y}\) in predicting \(y\).

Equivalently, we can think that our null hypothesis is \[H_0: \beta_j=0, \text{for all }j\]

6.6.2.1 Parametric Test of Global Fit

The parametric test that is commonly used for assessing the global fit is a F-test. A common way to assess the fit, we have just said is either large \(R^2\) or small \(RSS=\sum_{i=1}^{n}r_i^2\).

We can also think our global test is an implicit test for comparing two possible prediction models

Model 0: No variables, just predict \(\bar{y}\) for all observations

Model 1: Our linear model with all the variables

Then we could also say that we could test the global fit by comparing the RSS from model 0 (the null model), versus model 1 (the one with the variables), e.g. \[RSS_0-RSS_1\]

This will always be positive, why?

We will actually instead change this to be a proportional increase, i.e. relative to the full model, how much increase in RSS do I get when I take out the variables: \[\frac{RSS_0-RSS_1}{RSS_1}\]

To make this quantity more comparable across many datasets, we are going to normalize this quantity by the number of variables in the data, \[F=\frac{(RSS_0-RSS_1)/p}{RSS_1/(n-p-1)}\] Notice that the \(RSS_0\) of our 0 model is actually the TSS. This is because \[\hat{y}^{\text{Model 0}}=\bar{y}\] so \[RSS_0=\sum_{i=1}^n (y_i-\hat{y}^{\text{Model 0}})^2=\sum_{i=1}^n (y_i-\bar{y})^2\] Further, \[RSS_1/(n-p-1)=\hat{\sigma}^2\] So we have \[F=\frac{(TSS-RSS)/p}{\hat{\sigma}^2}\]

All of this we can verify on our data:

n <- nrow(body)

p <- ncol(body) - 1

tss <- (n - 1) * var(body$BODYFAT)

rss <- sum(residuals(ft)^2)

sigma <- summary(ft)$sigma

(tss - rss)/p/sigma^2## [1] 92.61904## value numdf dendf

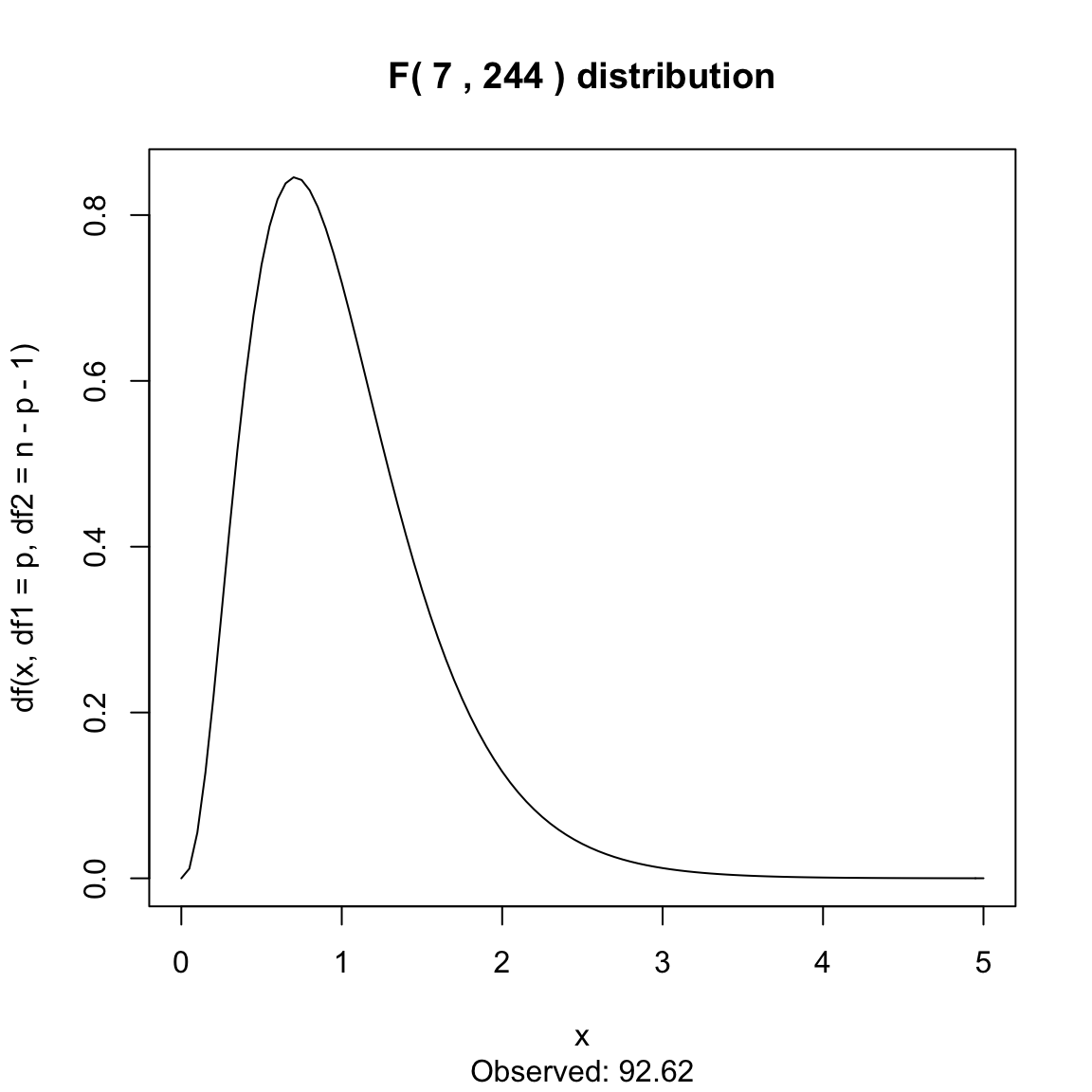

## 92.61904 7.00000 244.00000We do all this normalization, because under our assumptions of the parametric model, the \(F\) statistic above follows a \(F\)-distribution. The \(F\) distribution you have seen in a HW when you were simulating data, and has two parameters, the degrees of freedom of the numerator (\(df1\)) and the degrees of freedom of the denominator (\(df2\)); they are those constants we divide the numerator and denominator by in the definition of the \(F\) statistic. Then the \(F\) statistic we described above follows a \(F(p, n-p-1)\) distribution under our parametric model.

Here is the null distribution for our \(F\) statistic for the bodyfat:

curve(df(x, df1 = p, df2 = n - p - 1), xlim = c(0,

5), main = paste("F(", p, ",", n - p - 1, ") distribution"),

sub = paste("Observed:", round(summary(ft)$fstatistic["value"],

2)))

This is a highly significant result, and indeed most tests of general fit are highly significant. It is rare that the entire set of variables collected have zero predictive value to the response!

6.6.2.2 Permutation test for global fit

Our null hypothesis to assess the global fit is that the \(x_i\) do not give us any information regarding the \(y\). We had a similar situation previously when we considered comparing two groups. There, we measured a response \(y\) on two groups, and wanted to know whether the group assignment of the observation made a difference in the \(y\) response. To answer that question with permutation tests, we permuted the assignment of the \(y_i\) variables into the two groups.

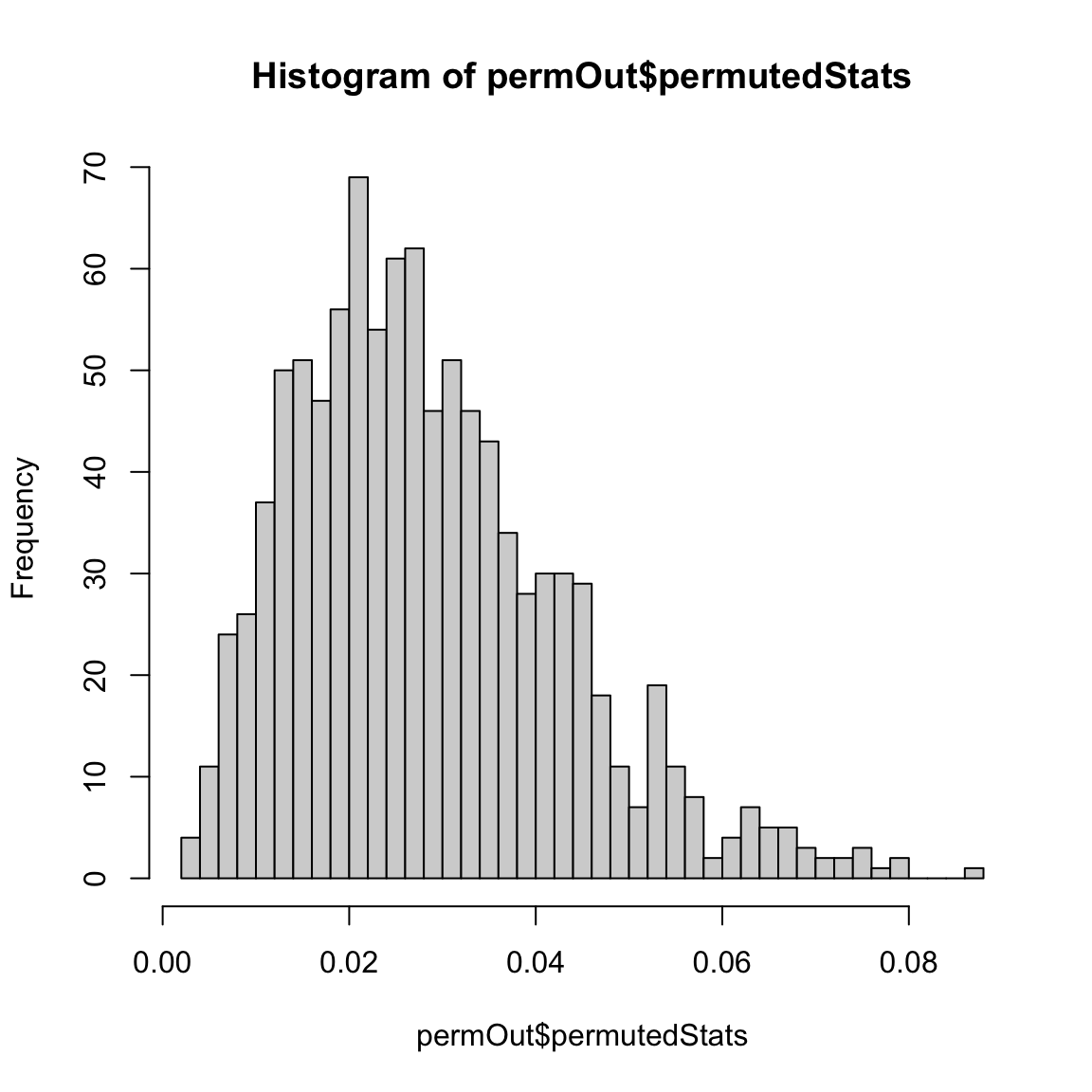

Then we can think of the global fit of the regression similarly, since under the null knowing \(x_i\) doesn’t give us any information about \(y_i\), so I can permute the assignment of the \(y_i\) to \(x_i\) and it shouldn’t change the fit of our data.

Specifically, we have a statistic, \(R^2\), for how well our predictions fit the data. We observe pairs \((y_i, x_i)\) (\(x_i\) here is a vector of all the variables for the observation \(i\)). Then

- Permute the order of the \(y_i\) values, so that the \(y_i\) are paired up with different \(x_i\).

- Fit the regression model on the permuted data

- Calculate \(R^2_b\)

- Repeat \(B\) times to get \(R^2_1,\ldots,R^2_B\).

- Determine the p-value of the observed \(R^2\) as compared to the compute null distribution

We can do this with the body fat dataset:

set.seed(147980)

permutationLM <- function(y, data, n.repetitions, STAT = function(lmFit) {

summary(lmFit)$r.squared

}) {

stat.obs <- STAT(lm(y ~ ., data = data))

makePermutedStats <- function() {

sampled <- sample(y)

fit <- lm(sampled ~ ., data = data)

return(STAT(fit))

}

stat.permute <- replicate(n.repetitions, makePermutedStats())

p.value <- sum(stat.permute >= stat.obs)/n.repetitions

return(list(p.value = p.value, observedStat = stat.obs,

permutedStats = stat.permute))

}

permOut <- permutationLM(body$BODYFAT, data = body[,

-1], n.repetitions = 1000)

hist(permOut$permutedStats, breaks = 50)

## $p.value

## [1] 0

##

## $observedStat

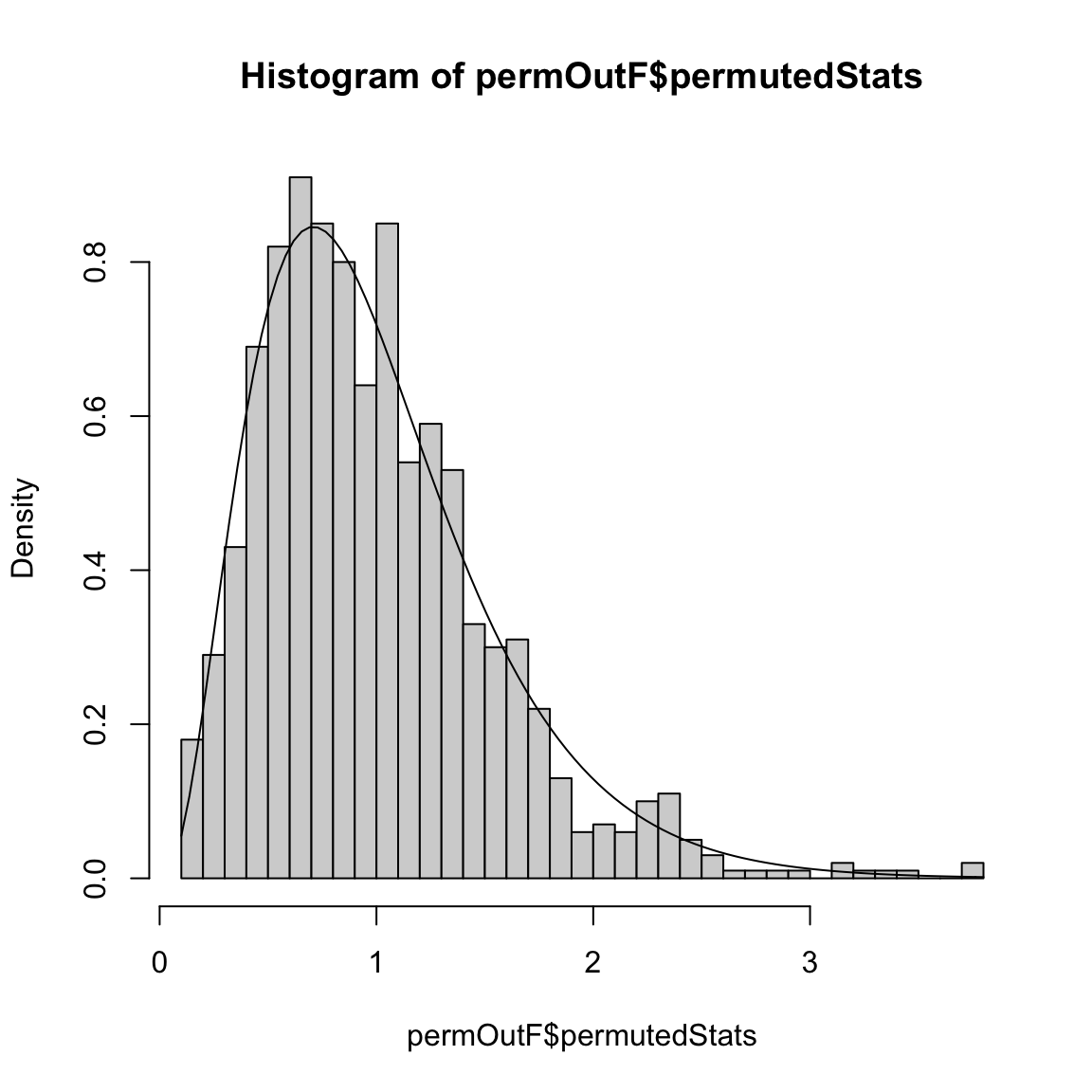

## [1] 0.7265596Notice that we could also use the \(F\) statistic from before too (here we overlay the null distribution of the \(F\) statistic from the parametric model for comparison),

n <- nrow(body)

p <- ncol(body) - 1

permOutF <- permutationLM(body$BODYFAT, data = body[,

-1], n.repetitions = 1000, STAT = function(lmFit) {

summary(lmFit)$fstatistic["value"]

})

hist(permOutF$permutedStats, freq = FALSE, breaks = 50)

curve(df(x, df1 = p, df2 = n - p - 1), add = TRUE,

main = paste("F(", p, ",", n - p - 1, ") distribution"))

## $p.value

## [1] 0

##

## $observedStat

## value

## 92.619046.6.3 Individual Variable Importance

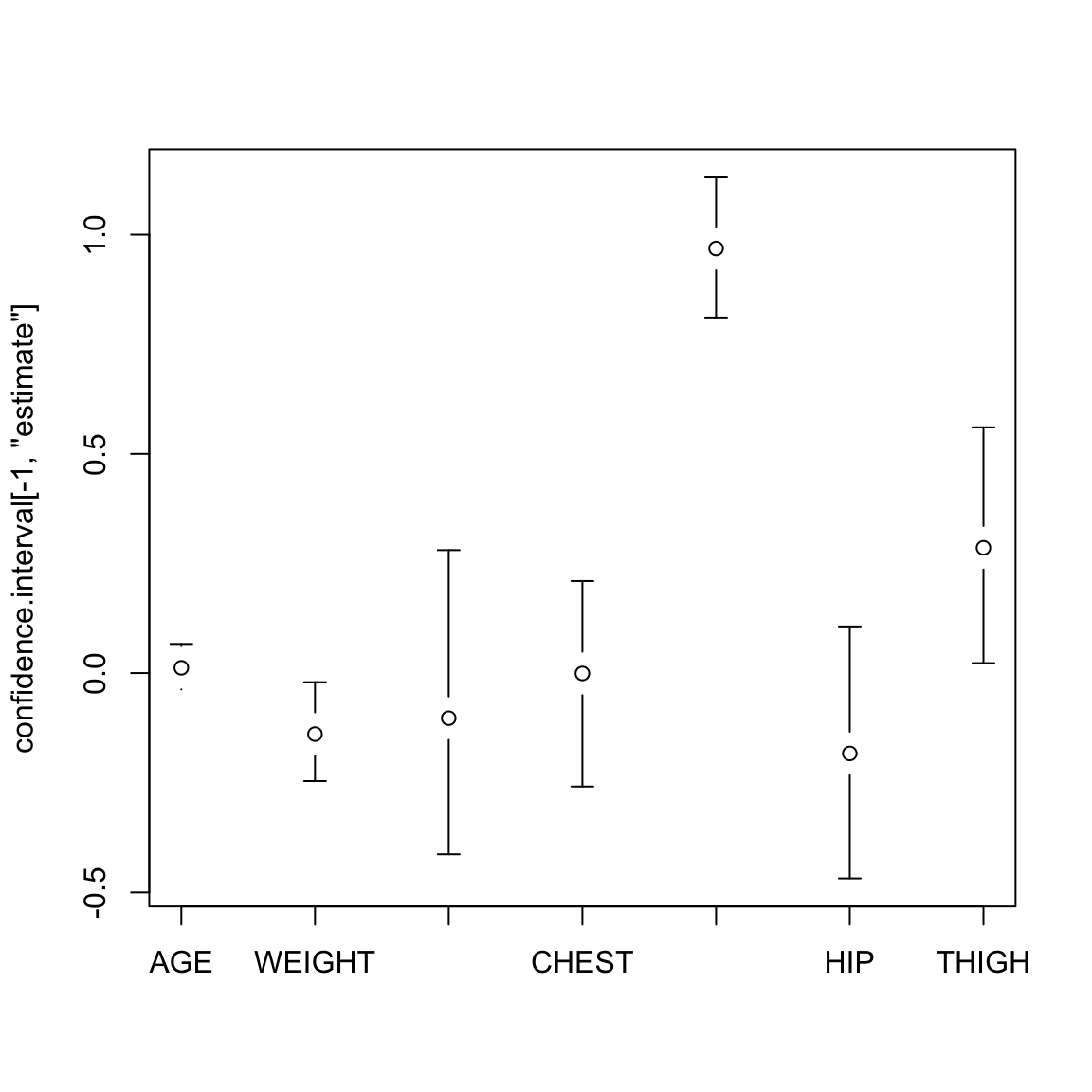

We can also ask about individual variable, \(\beta_j\). This is a problem that we have discussed in the setting of simple regression, where we are interested in inference regarding the parameter \(\beta_j\), either with confidence intervals of \(\beta_j\) or the null hypothesis: \[H_0: \beta_j=0\]

In order to perform inference for \(\beta_j\), we have two possibilities of how to perform inference, like in simple regression: bootstrap CI and the parametric model.

6.6.3.1 Bootstrap for CI of \(\hat{\beta}_j\)

Performing the bootstrap to get CI for \(\hat{\beta}_j\) in multiple regression is the exact same procedure as in simple regression.

Specifically, we still bootstrap pairs \((y_i,x_i)\) and each time recalculate the linear model. For each \(\beta_j\), we will have a distribution of \(\hat{\beta}_j^*\) for which we can perform confidence intervals.

We can even use the same function as we used in the simple regression setting with little changed.

bootstrapLM <- function(y, x, repetitions, confidence.level = 0.95) {

stat.obs <- coef(lm(y ~ ., data = x))

bootFun <- function() {

sampled <- sample(1:length(y), size = length(y),

replace = TRUE)

coef(lm(y[sampled] ~ ., data = x[sampled, ]))

}

stat.boot <- replicate(repetitions, bootFun())

level <- 1 - confidence.level

confidence.interval <- apply(stat.boot, 1, quantile,

probs = c(level/2, 1 - level/2))

return(list(confidence.interval = cbind(lower = confidence.interval[1,

], estimate = stat.obs, upper = confidence.interval[2,

]), bootStats = stat.boot))

}## lower estimate upper

## (Intercept) -75.68776383 -3.747573e+01 -3.84419402

## AGE -0.03722018 1.201695e-02 0.06645578

## WEIGHT -0.24629552 -1.392006e-01 -0.02076377

## HEIGHT -0.41327145 -1.028485e-01 0.28042319

## CHEST -0.25876131 -8.311678e-04 0.20995486

## ABDOMEN 0.81115069 9.684620e-01 1.13081481

## HIP -0.46808557 -1.833599e-01 0.10637834

## THIGH 0.02272414 2.857227e-01 0.56054626require(gplots)

with(bodyBoot, plotCI(confidence.interval[-1, "estimate"],

ui = confidence.interval[-1, "upper"], li = confidence.interval[-1,

"lower"], xaxt = "n"))

axis(side = 1, at = 1:(nrow(bodyBoot$conf) - 1), rownames(bodyBoot$conf)[-1])

Note, that unless I scale the variables, I can’t directly interpret the size of the \(\beta_j\) as its importance (see commentary above under interpretation).

Assumptions of the Bootstrap

Recall that the bootstrap has assumptions, two important ones being that we have independent observations and the other being that we can reasonably estimate \(F\) with \(\hat{F}\). However, the distribution \(F\) we need to estimate is not the distribution of an individual a single variable, but the entire joint distributions of all the variables. This gets to be a harder and harder task for larger numbers of variables (i.e. for larger \(p\)).

In particular, when using the bootstrap in multiple regression, it will not perform well if \(p\) is large relative to \(n\).56 In general you want the ratio \(p/n\) to be small (like less than 0.1); otherwise the bootstrap can give very poor CI.57

## Ratio of p/n in body fat: 0.031746036.6.3.2 Parametric models

Again, our inference on \(\beta_j\) will look very similar to simple regression. Using our parametric assumptions about the distribution of the errors will mean that each \(\hat{\beta}_j\) is normally distributed 58 \[\hat{\beta}_j \sim N(\beta_j, \nu^2_j)\] where \[\nu^2_j=\ell(X)\sigma^2\] (\(\ell(X)\) is a linear combination of all of the observed explanatory variables, given in the matrix \(X\)).59

Using this, we create t-statistics for each \(\beta_j\) by standardizing \(\hat{\beta}_j\)

\[T_j=\frac{\hat{\beta_j}}{\sqrt{\hat{var}(\hat{\beta_j})}}\]

Just like the t-test, \(T_j\) should be normally distributed60

This is exactly what lm gives us:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -3.747573e+01 14.49480190 -2.585460204 1.030609e-02

## AGE 1.201695e-02 0.02933802 0.409603415 6.824562e-01

## WEIGHT -1.392006e-01 0.04508534 -3.087490946 2.251838e-03

## HEIGHT -1.028485e-01 0.09787473 -1.050817489 2.943820e-01

## CHEST -8.311678e-04 0.09988554 -0.008321202 9.933675e-01

## ABDOMEN 9.684620e-01 0.08530838 11.352484708 2.920768e-24

## HIP -1.833599e-01 0.14475772 -1.266667813 2.064819e-01

## THIGH 2.857227e-01 0.13618546 2.098041564 3.693019e-02Correlation of estimates

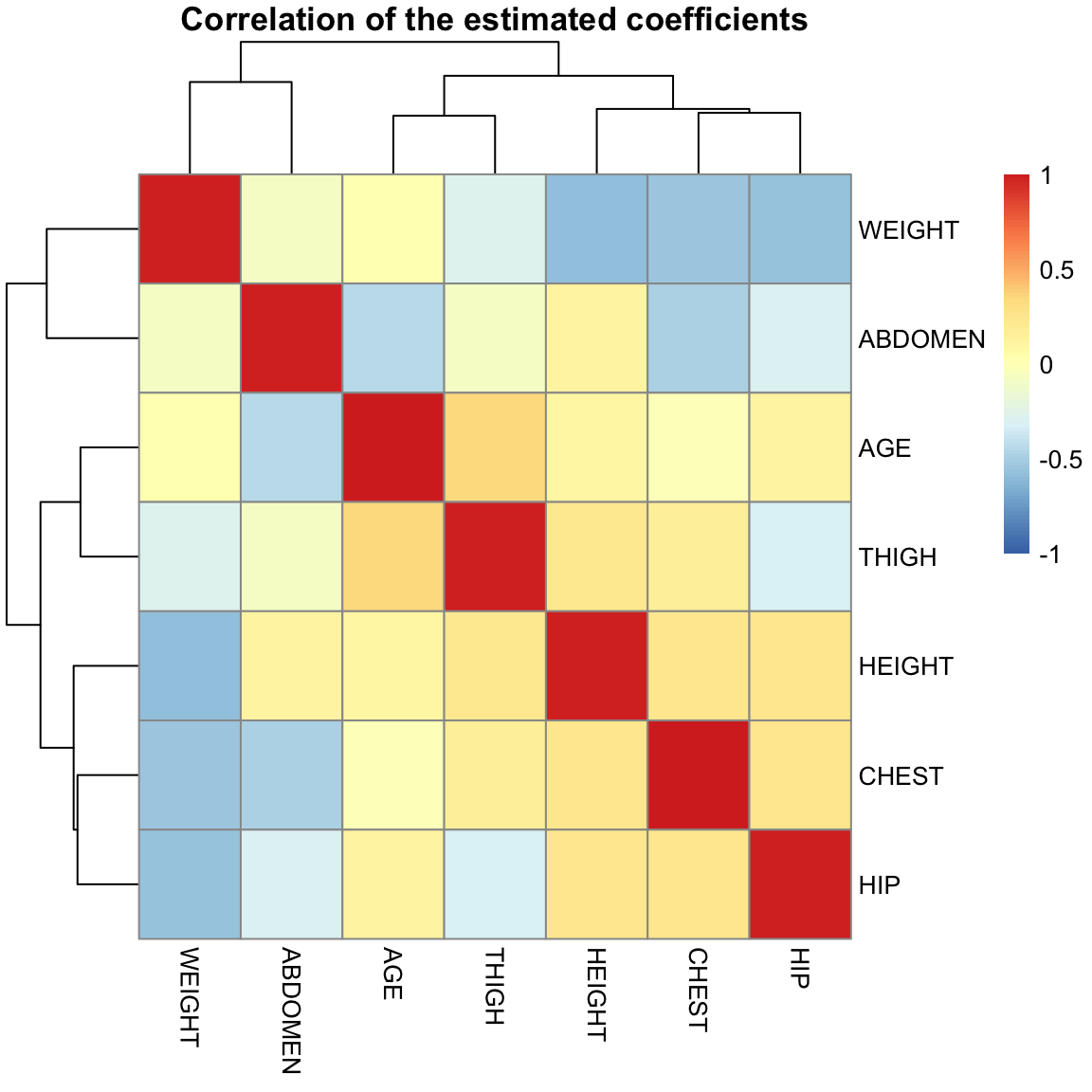

The estimated \(\hat{\beta}_j\) are themselves correlated with each other, unless the \(x^{j}\) and \(x^{k}\) variables are uncorrelated.

library(pheatmap)

pheatmap(summary(ft, correlation = TRUE)$corr[-1, -1],

breaks = seq(-1, 1, length = 100), main = "Correlation of the estimated coefficients")

6.6.4 Inference on \(\hat{y}(x)\)

We can also create confidence intervals on the prediction given by the model, \(\hat{y}(x)\). For example, suppose now that we are asked to predict the bodyfat percentage of an individual who has a particular set of variables \(x_0\). Then the same logic in simple regression follows here.

There are two intervals associated with prediction:

- Confidence intervals for the average response, i.e. bodyfat percentage for all individuals who have the values \(x_0\). The average (or expected values) at \(x_0\) is \[E(y(x_0))=\beta_0+\beta_1 x_0^{(1)}+\ldots+\beta_p x_0^{(p)}.\] and so we estimate it using our estimates of \(\beta_j\), getting \(\hat{y}(x_0)\).

Then our \(1-\alpha\) confidence interval will be61 \[\hat{y}(x_0) \pm t_{\alpha_2} \sqrt{\hat{var}(\hat{y}(x_0) )}\]

- Confidence intervals for a particular individual (prediction interval). If we knew \(\beta\) completely, we still wouldn’t know the value of the particular individual. But if we knew \(\beta\), we know that our parametric model says that all individuals with the same \(x_0\) values are normally distributed as \[N(\beta_0+\beta_1 x_0^{(1)}+\ldots+\beta_p x_0^{(p)}, \sigma^2) \]

So we could give an interval that we would expect 95% confidence that such an individual would be in, how?

We don’t know \(\beta\), so actually we have to estimate both parts of this, \[\hat{y}(x_0)+\pm 1.96 \sqrt{\hat{\sigma}^2+ \hat{var}(\hat{y}(x_0) )}\]

Both of these intervals are obtained in R via the predict function.

x0 = data.frame(AGE = 30, WEIGHT = 180, HEIGHT = 70,

CHEST = 95, ABDOMEN = 90, HIP = 100, THIGH = 60)

predict(ft, x0, interval = "confidence")## fit lwr upr

## 1 16.51927 15.20692 17.83162## fit lwr upr

## 1 16.51927 7.678715 25.35983Note that the prediction interval is much wider compared to the confidence interval for average response.

% % ## Regression Diagnostics

Our next topic in multiple regression is regression diagnostics. The inference procedures that we talked about work under the assumptions of the linear regression model. If these assumptions are violated, then our hypothesis tests, standard errors and confidence intervals will be violated. Regression diagnostics enable us to diagnose if the model assumptions are violated or not.

The key assumptions we can check for in the regression model are:

- Linearity: the mean of the \(y\) is linearly related to the explanatory variables.

- Homoscedasticity: the errors have the same variance.

- Normality: the errors have the normal distribution.

- All the observations obey the same model (i.e., there are no outliers or exceptional observations).

These are particularly problems for the parametric model; the bootstrap will be relatively robust to these assumptions, but violations of these assumptions can cause the inference to be less powerful – i.e. harder to detect interesting signal.

These above assumptions can be checked by essentially looking at the residuals:

- Linearity: The residuals represent what is left in the response variable after the linear effects of the explanatory variables are taken out. So if there is a non-linear relationship between the response and one or more of the explanatory variables, the residuals will be related non-linearly to the explanatory variables. This can be detected by plotting the residuals against the explanatory variables. It is also common to plot the residuals against the fitted values. Note that one can also detect non-linearity by simply plotting the response against each of the explanatory variables.

- Homoscedasticity: Heteroscedasticity can be checked again by plotting the residuals against the explanatory variables and the fitted values. It is common here to plot the absolute values of the residuals or the square root of the absolute values of the residuals.

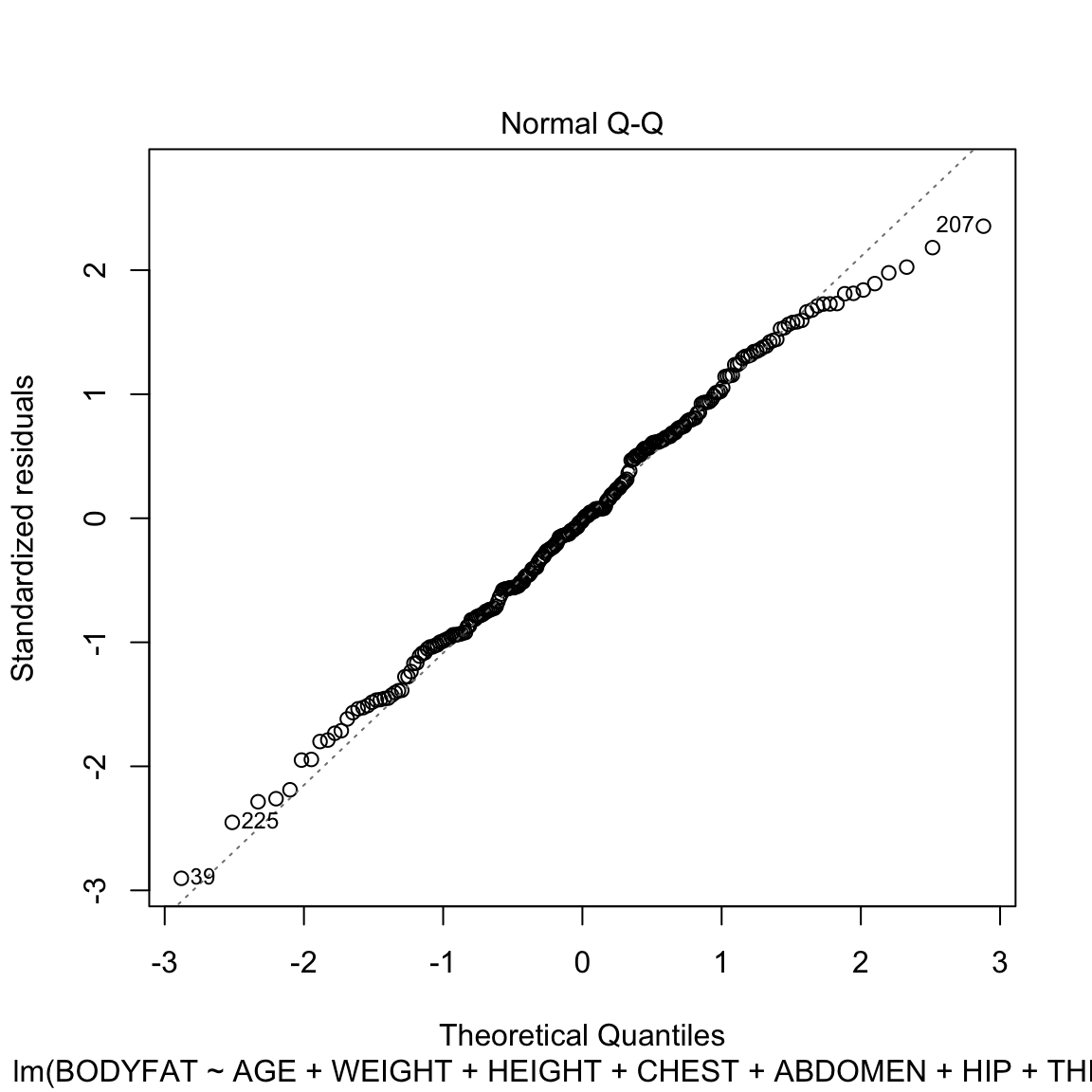

- Normality: Detected by the normal Q-Q plot of the residuals.

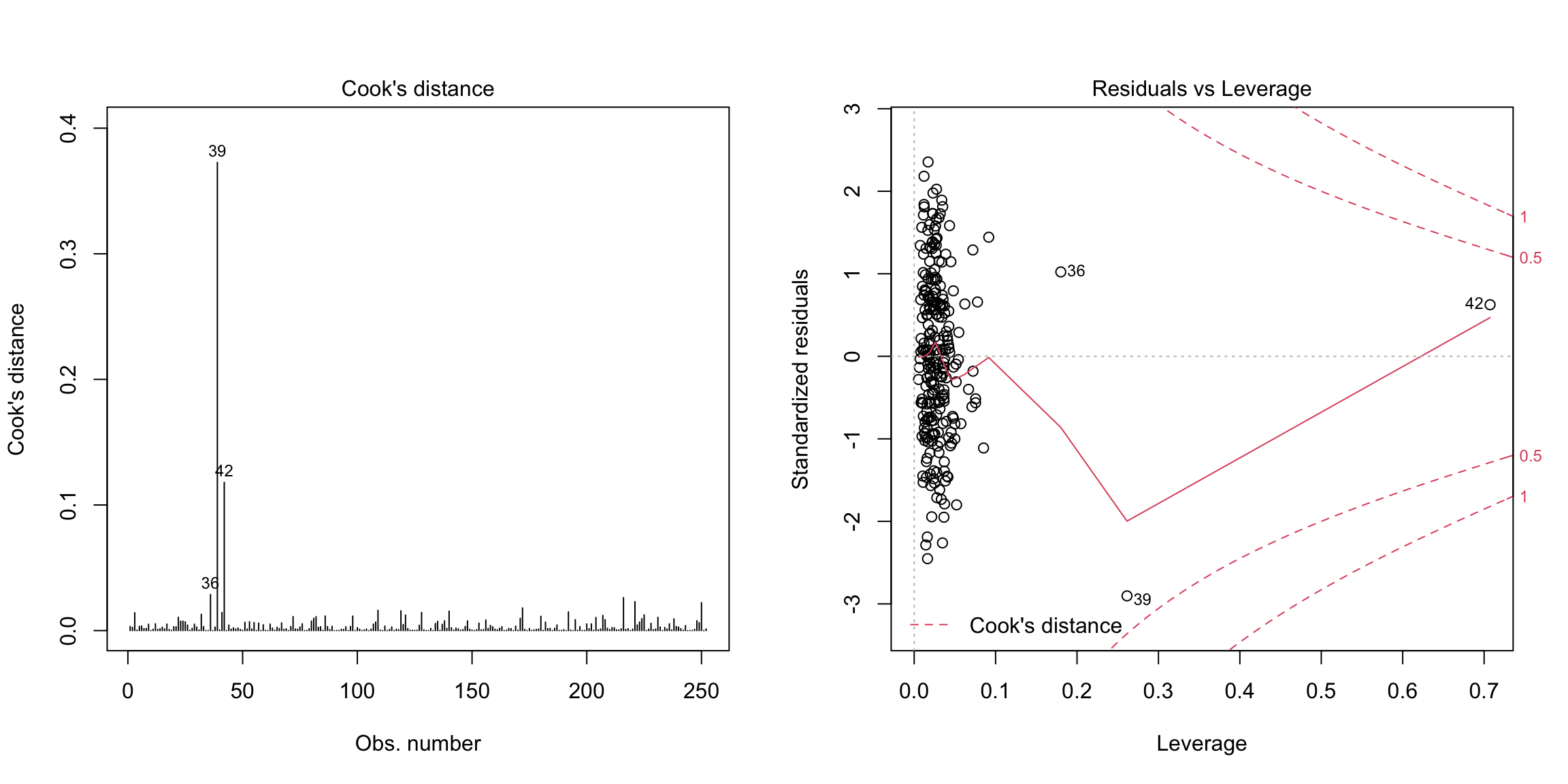

- Outliers: The concern with outliers is that they could be effecting the fit. There are three measurements we could use to consider whether a point is an outlier

- Size of the residuals (\(r_i\)) – diagnostics often use standardized residuals to make them more comparable between different observations62

- Leverage – a measure of how far the vector of explanatory variables of an observation are from the rest, and on average are expected to be about \(p/n\).

- Cook’s Distance – how much the coefficients \(\hat{\beta}\) will change if you leave out observation \(i\), which basically combines the residual and the leverage of a point.

Outliers typically will have either large (in absolute value) residuals and/or large leverage.

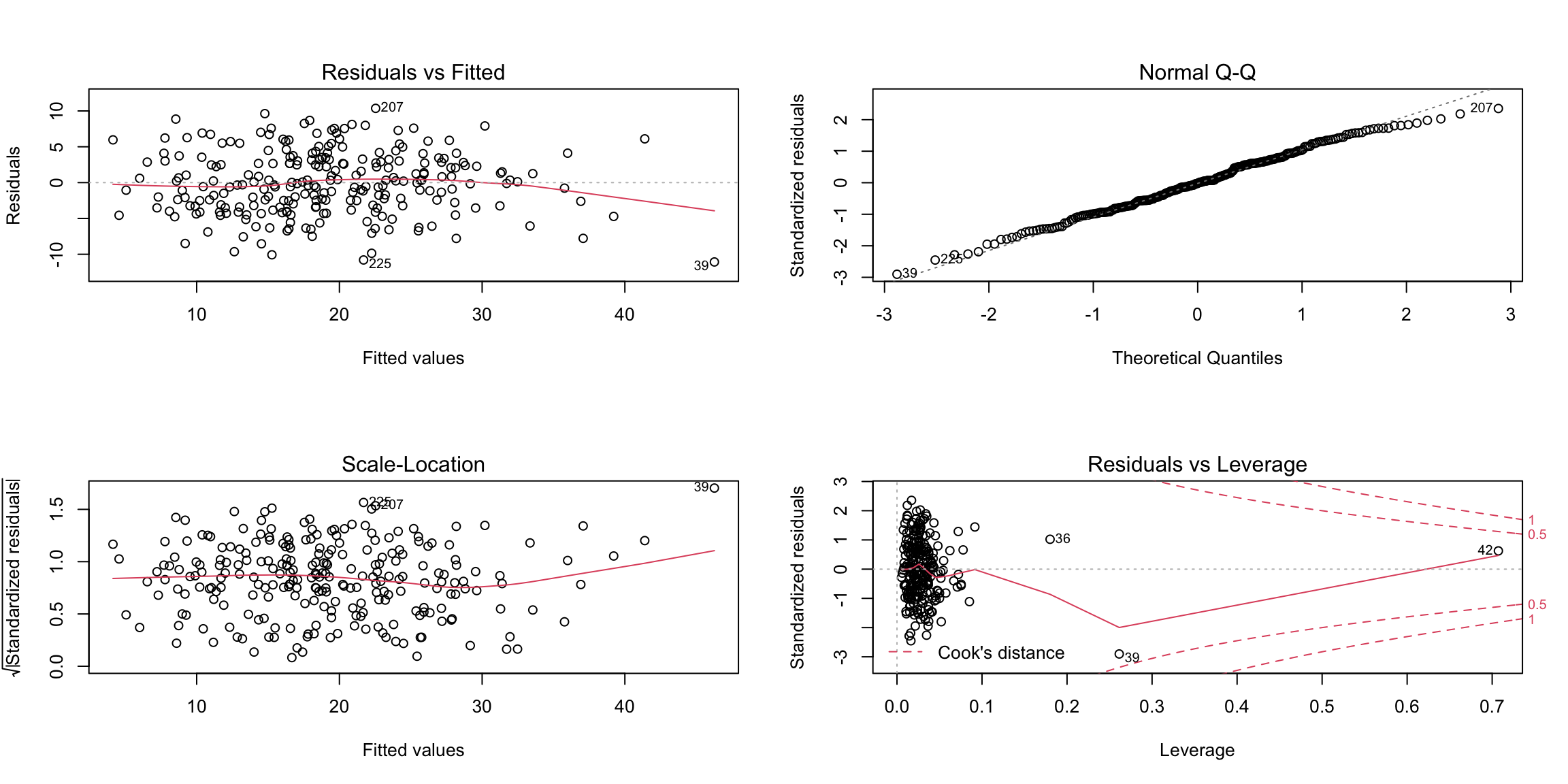

Consider the bodyfat dataset. A simple way for doing some of the standard regression diagnostics is to use the plot

command as applied to the linear model fit:

Let’s go through these plots and what we can look for in these plots. There can sometimes be multiple issues that we can detect in a single plot.

Independence

Note that the most important assumption is independence. Violations of independence will cause problems for every inference procedure we have looked at, including the resampling procedures, and the problems such a violation will cause for your inference will be even worse than the problems listed above. Unfortunately, violations of independence are difficult to check for in a generic problem. If you suspect a certain kind of dependence, e.g. due to time or geographical proximity, there are special tools that can be used to check for that. But if you don’t have a candidate for what might be the source of the dependence, the only way to know there is no dependence is to have close control over how the data was collected.

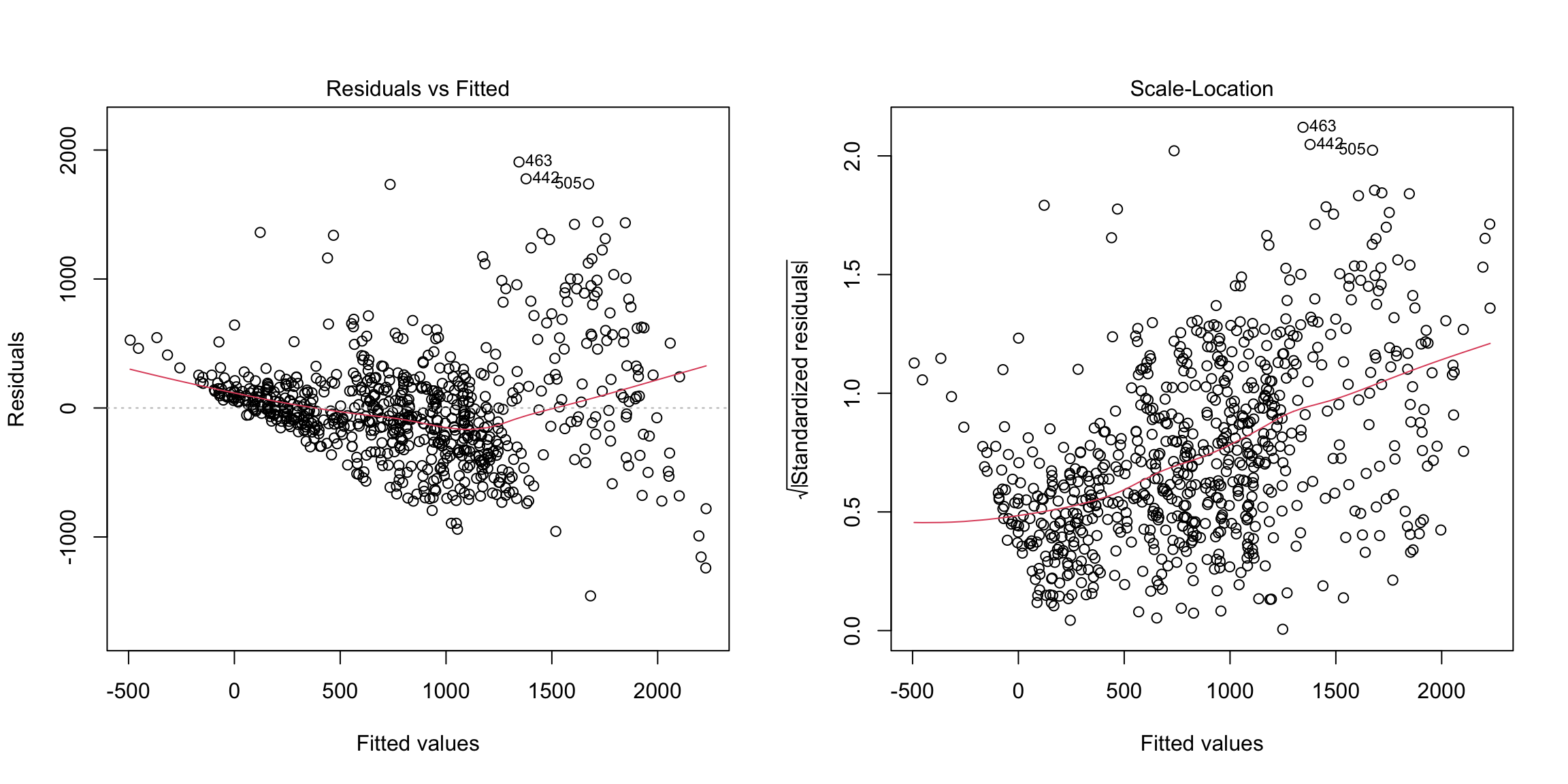

6.6.5 Residuals vs. Fitted Plot

The first plot is the residuals plotted against the fitted values. The points should look like a random scatter with no discernible pattern. We are often looking for two possible violations:

- Non-linear relationship to response, detected by a pattern in the mean of the residuals. Recall that the correlation between \(\hat{y}\) and the residuals must be numerically zero – but that doesn’t mean that there can’t be non-linear relationships.

- Heteroscedasticity – a pattern in the variability of the residuals, for example higher variance in observations with large fitted values.

Let us now look at some simulation examples in the simple setting of a single predictor to demonstrate these phenomena.

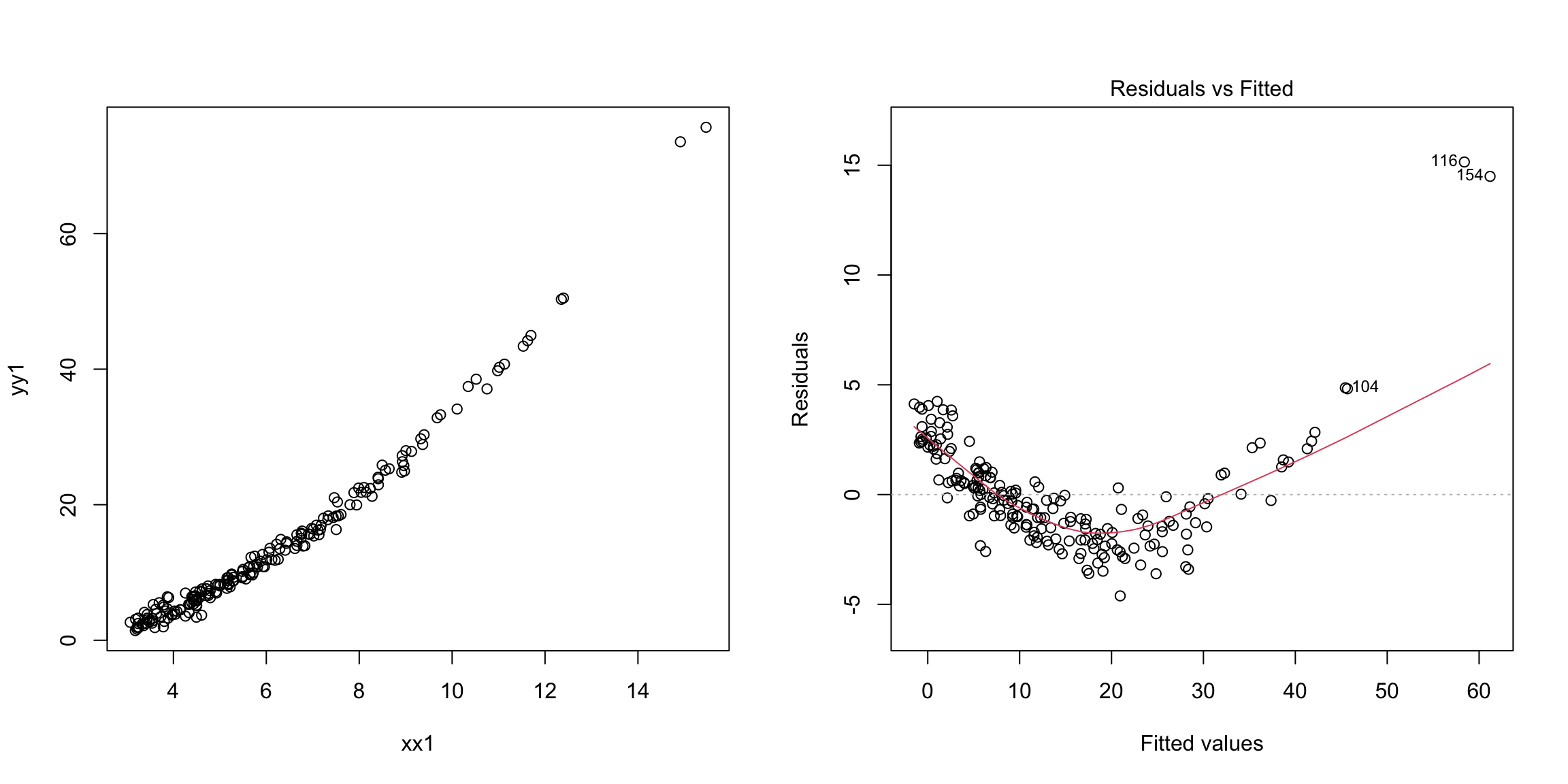

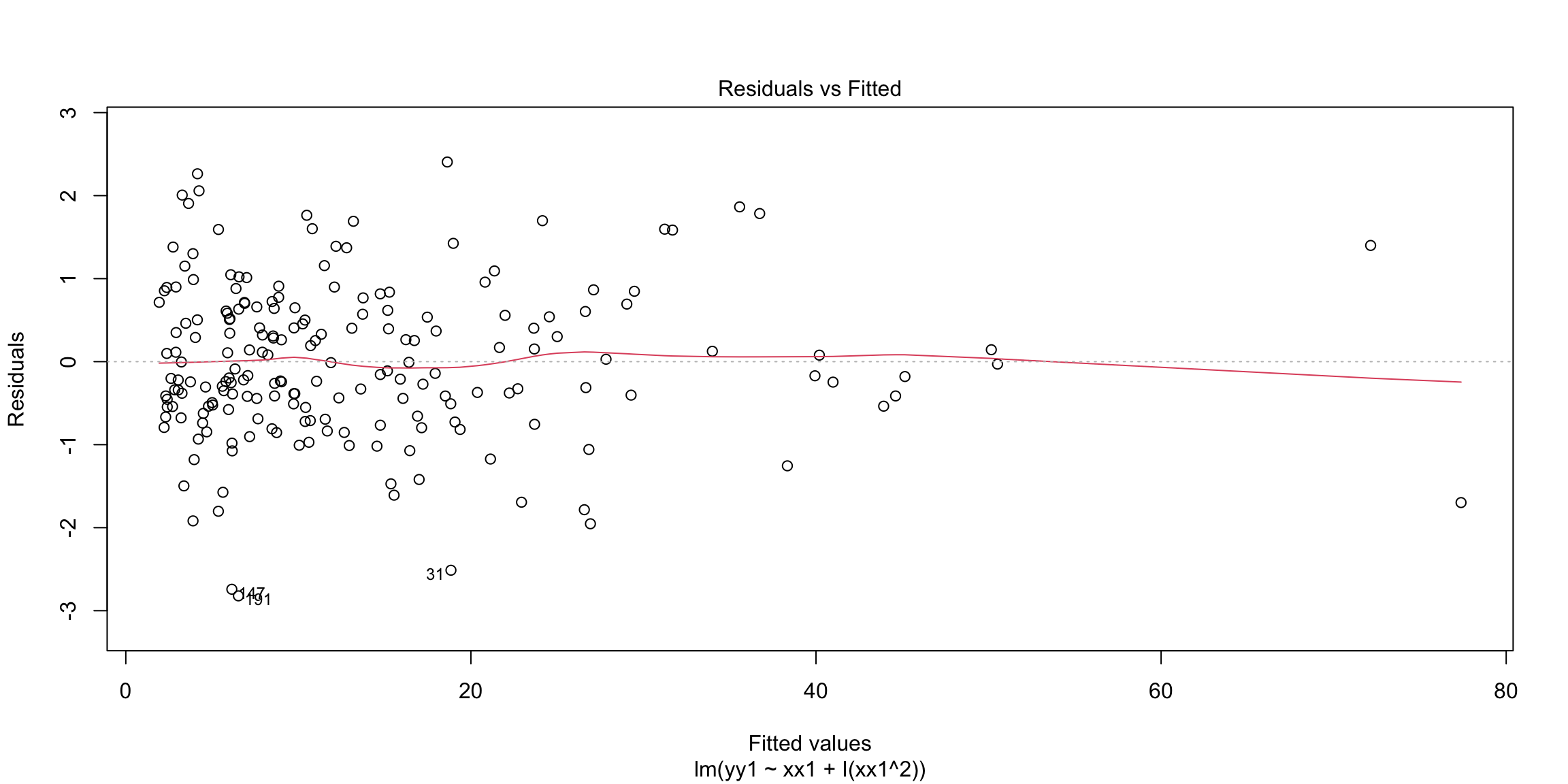

Example: Non-linearity

In the next example, the response is related non-linearly to \(x\).

n = 200

xx1 = 3 + 4 * abs(rnorm(n))

yy1 = -2 + 0.5 * xx1^(1.85) + rnorm(n)

m1 = lm(yy1 ~ xx1)

par(mfrow = c(1, 2))

plot(yy1 ~ xx1)

plot(m1, which = 1) Non-linearity is fixed by adding non-linear functions of explanatory

variables as additional explanatory variables. In this example, for

instance, we can add \(x^2\) as an additional explanatory variable.

Non-linearity is fixed by adding non-linear functions of explanatory

variables as additional explanatory variables. In this example, for

instance, we can add \(x^2\) as an additional explanatory variable.

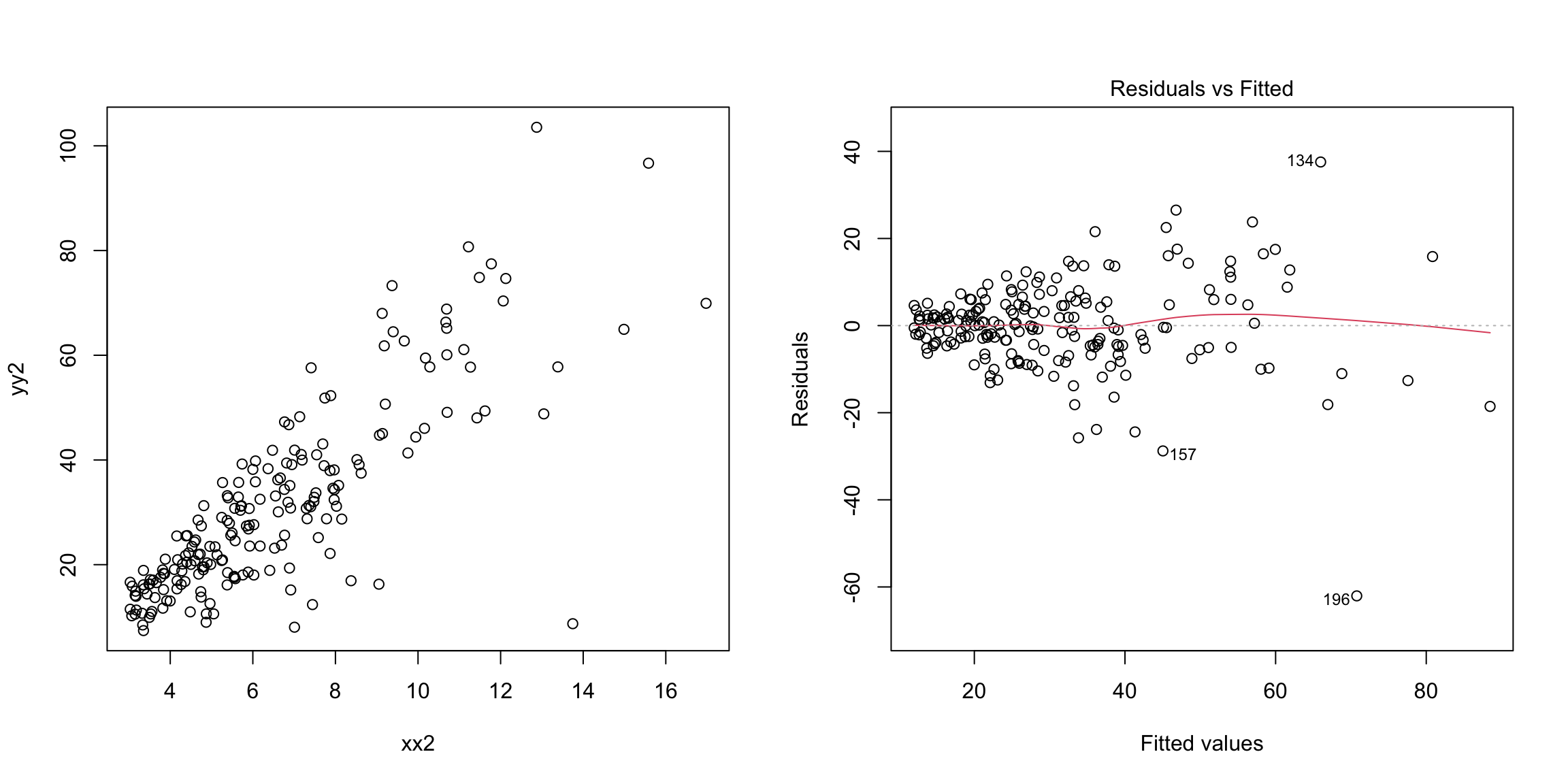

Example: Heteroscedasticity

Next let us consider an example involving heterscedasticity (unequal variance).

set.seed(478912)

n = 200

xx2 = 3 + 4 * abs(rnorm(n))

yy2 = -2 + 5 * xx2 + 0.5 * (xx2^(1.5)) * rnorm(n)

m2 = lm(yy2 ~ xx2)

par(mfrow = c(1, 2))

plot(yy2 ~ xx2)

plot(m2, which = 1) Notice that even with a single variable, it is easier to see the difference in variability with the residuals than in plotting \(y\) versus \(x\) (in the plot of \(y\) versus \(x\), the fact that \(y\) is growing with \(x\) makes it harder to be sure).

Notice that even with a single variable, it is easier to see the difference in variability with the residuals than in plotting \(y\) versus \(x\) (in the plot of \(y\) versus \(x\), the fact that \(y\) is growing with \(x\) makes it harder to be sure).

Heteroscedasticity is a little tricky to handle in general. Heteroscedasiticity can sometimes be fixed by applying a transformation to the response variable (\(y\)) before fitting the regression. For example, if all the response values are positive, taking the logarithm or square root of the response variable is a common solution.

The Scale-Location plot (which is one of the default plots of plot) is also useful for detecting heteroscedasiticity. It plots the square

root of the absolute value of the residuals (actually standardized

residuals but these are similar to the residuals) against the fitted

values. Any increasing or decreasing pattern in this plot indicates

heteroscedasticity. Here is that plot on the simulated data that has increasing variance:

Back to data

We don’t see any obvious pattern in the fitted versus residual plot.

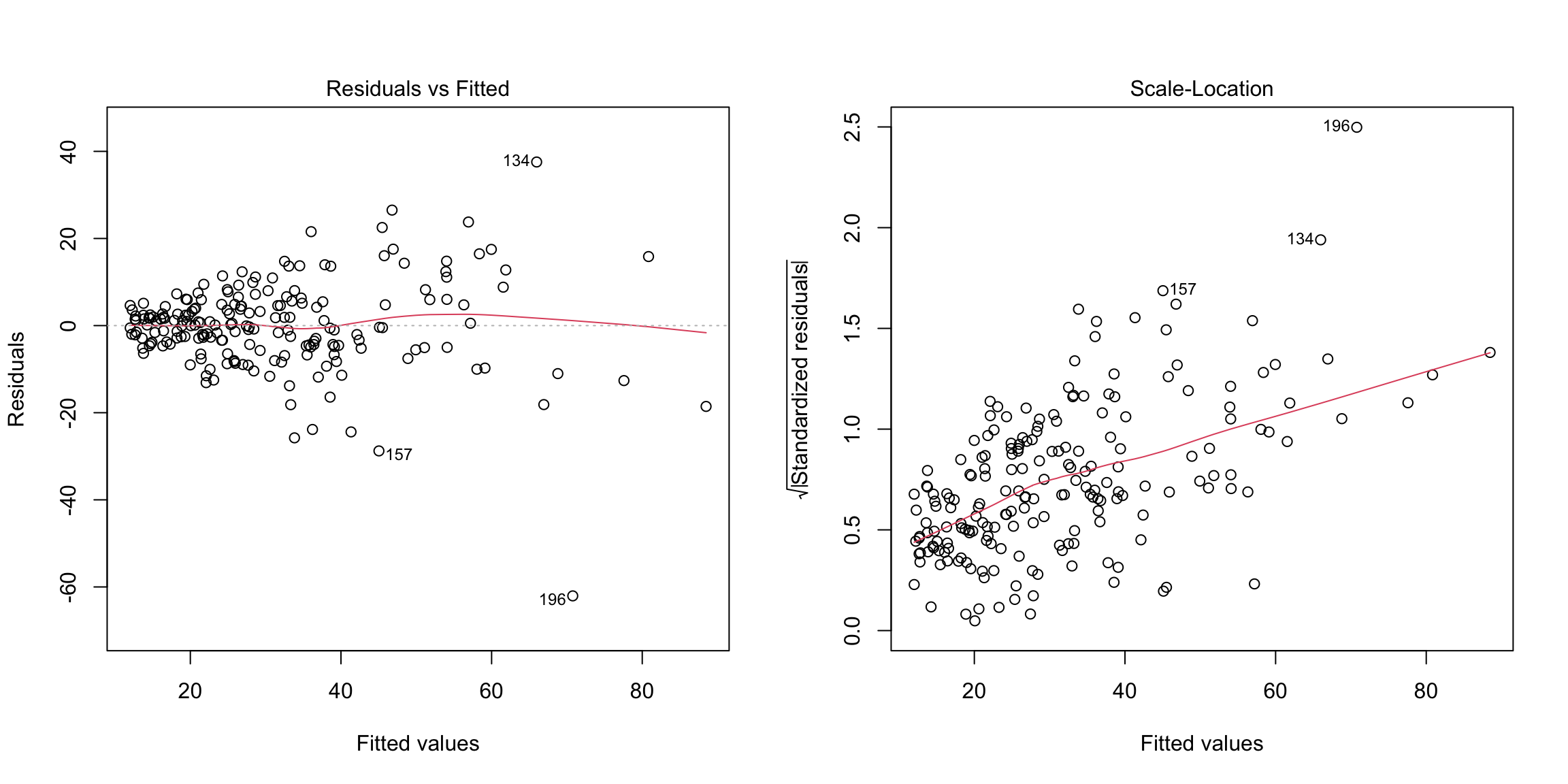

We do the same plot from our bike regression from above:

md1 = lm(casual ~ atemp + workingday + weathersit,

data = bike)

par(mfrow = c(1, 2))

plot(md1, which = c(1, 3))

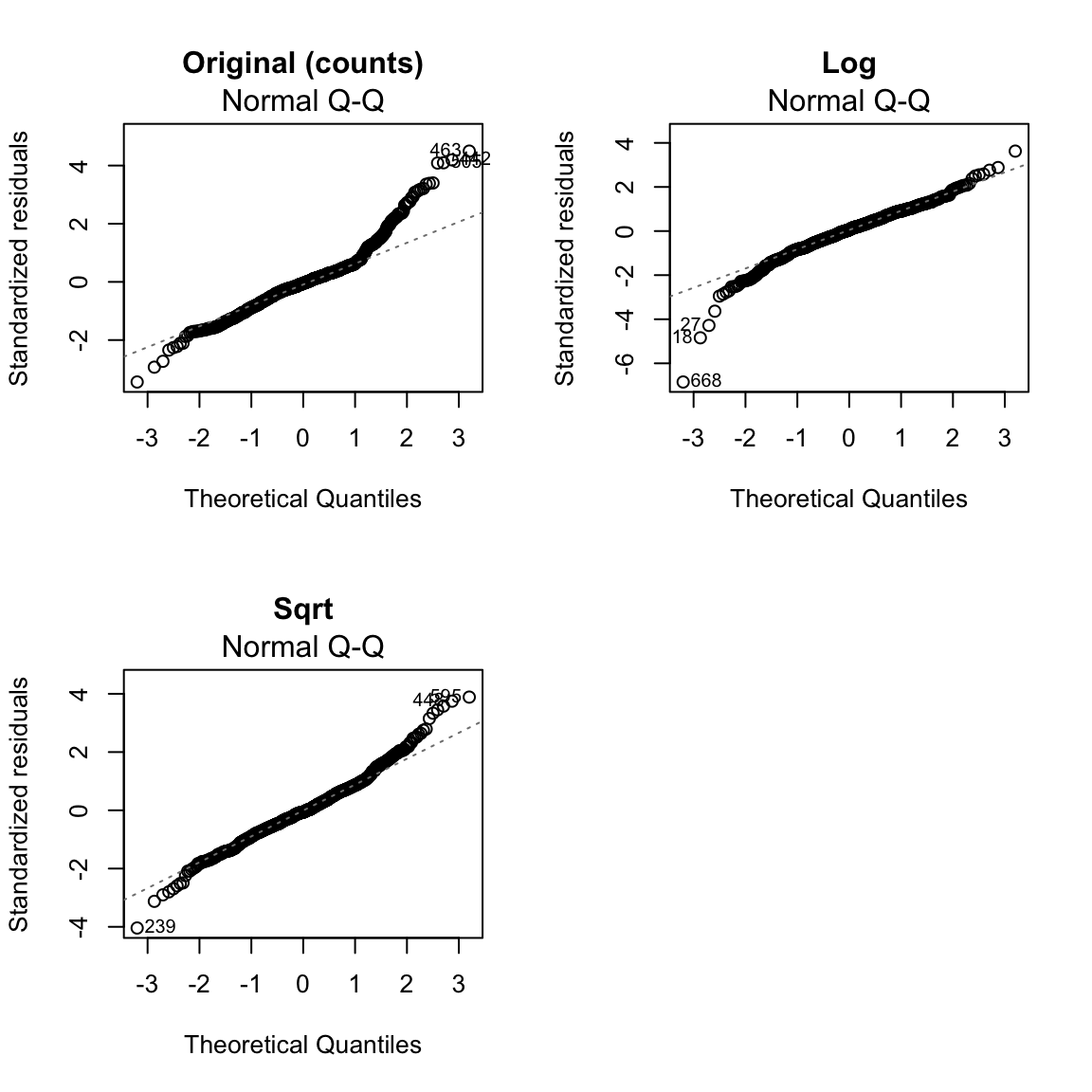

Here we see serious heteroskedasticity, where there is more variability in our residuals for larger fitted values than for smaller ones. There’s also possibly signs that our residuals have a pattern to them (not centered at zero), possibly indicating that our linear fit is not appropriate.

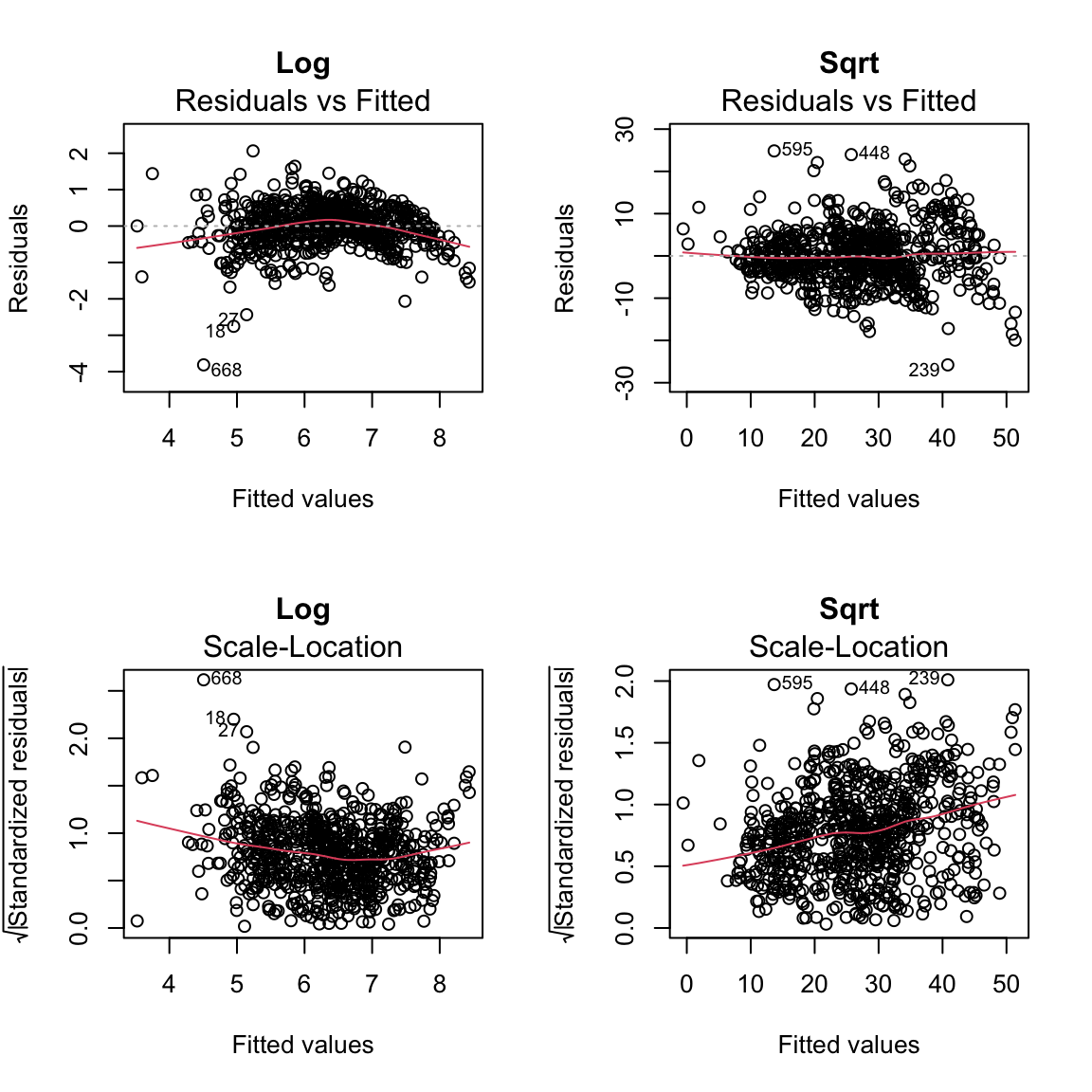

The response here is counts (number of casual users) and it is common to transform such data. Here we show the fitted/residual plot after transforming the response by the log and square-root:

mdLog = lm(log(casual) ~ atemp + workingday + weathersit,

data = bike)

mdSqrt = lm(sqrt(casual) ~ atemp + workingday + weathersit,

data = bike)

par(mfrow = c(2, 2))

plot(mdLog, which = 1, main = "Log")

plot(mdSqrt, which = 1, main = "Sqrt")

plot(mdLog, which = 3, main = "Log")

plot(mdSqrt, which = 3, main = "Sqrt")

Why plot against \(\hat{y}\)?

If we think there is a non linear relationship, shouldn’t we plot against the individual \(x^{(j)}\) variables? We certainly can! Just like with \(\hat{y}\), each \(x^{(j)}\) is uncorrelated with the residuals, but there can be non-linear relationships that show up. Basically any plot we do of the residuals should look like a random cloud of points with no pattern, including against the explanatory variables.

Plotting against the individual \(x^{(j)}\) can help to determine which variables have a non-linear relationship, and can help in determining an alternative model. Of course this is only feasible with a relatively small number of variables.

One reason that \(\hat{y}\) is our default plot is that 1) there are often too many variables to plot against easily; and 2) there are many common examples where the variance changes as a function of the size of the response, e.g. more variance for larger \(y\) values.

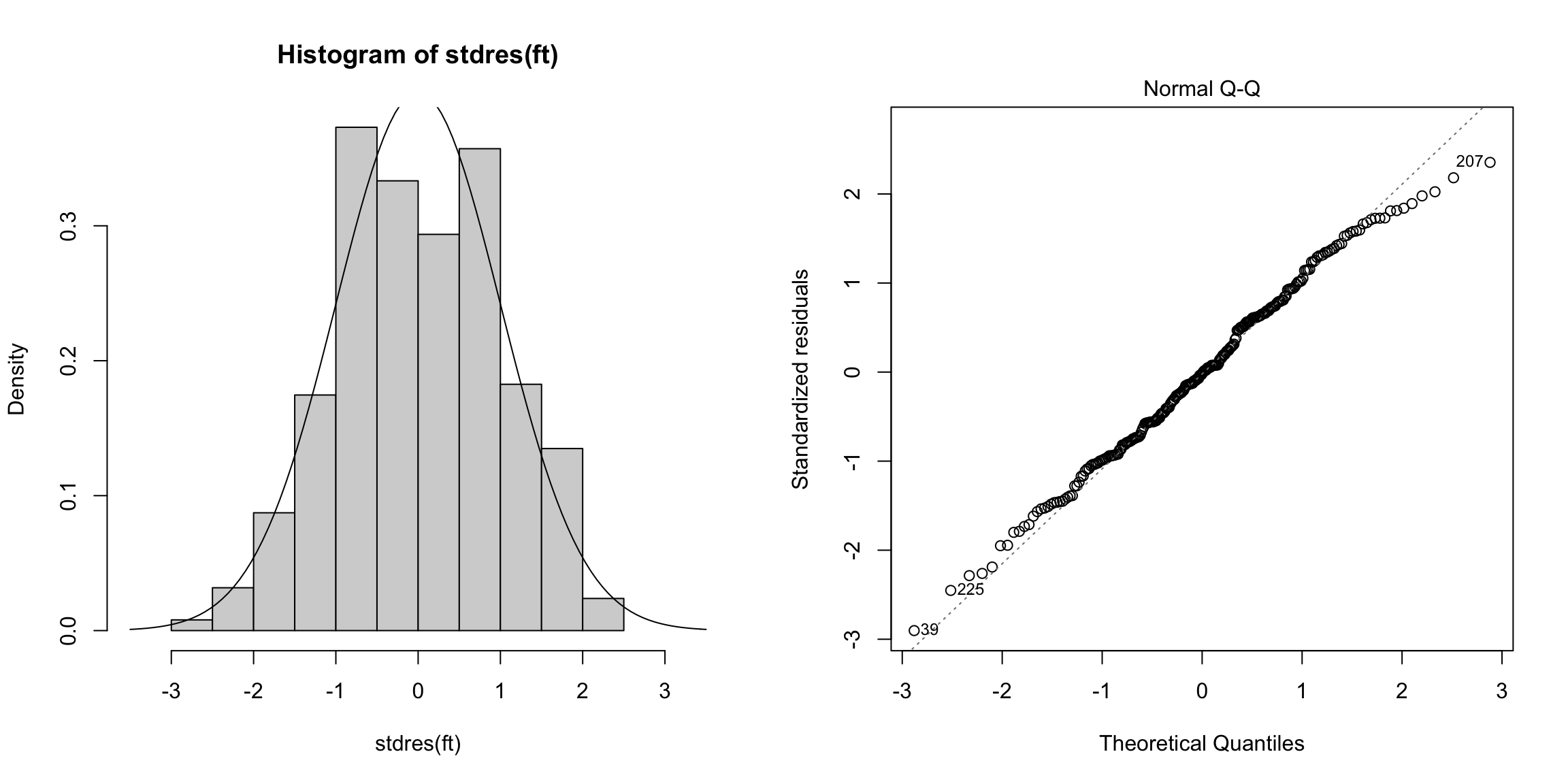

6.6.6 QQ-Plot

The second plot is the normal Q-Q plot of the standardized residuals. If the normal assumption holds, then the points should be along the line here.

library(MASS)

par(mfrow = c(1, 2))

hist(stdres(ft), freq = FALSE, breaks = 10, xlim = c(-3.5,

3.5))

curve(dnorm, add = TRUE)

plot(ft, which = 2)

A QQ-plot is based on the idea that every point in your dataset is a quantile. Specifically, if you have data \(x_1,\ldots,x_n\) and you assume they are all in order, then the probability of finding a data point less than or equal to \(x_1\) is \(1/n\) (assuming there are no ties). So \(x_1\) is the \(1/n\) quantile of the observed data distribution. \(x_2\) is the \(2/n\) quantile, and so forth.63

## 0.3968254%

## -2.453687Under our assumption of normality, then we also know what the \(1/n\) quantile should be based on qnorm (the standardized residuals are such that we expect them to be \(N(0,1)\))

## [1] -2.654759The idea with QQ-plots is that we can do this for all of the data, and compare whether our data has quantiles that match what we would expect for a normal distribution.

Here are some examples of QQ-plots for some simulated data, to give you a sense of how QQ-plots correspond to distributional properties:

par(mfrow = c(4, 2), cex = 2)

n <- 500

qqlim <- c(-4, 4)

set.seed(302)

x <- rnorm(n)

x <- scale(x, scale = TRUE)

hist(x, freq = FALSE, breaks = 10, xlim = c(-4, 4),

main = "Normal Data")

curve(dnorm, add = TRUE)

qqnorm(x, xlim = qqlim, ylim = qqlim)

qqline(x)

x <- rt(n, df = 3)

x <- scale(x, scale = TRUE)

hist(x, freq = FALSE, breaks = 30, xlim = c(-4, 4),

main = "Heavy Tailed")

curve(dnorm, add = TRUE)

qqnorm(x, xlim = qqlim, ylim = qqlim)

qqline(x)

x <- rnorm(n, 0, 1)

x <- scale(sign(x) * abs(x)^{

3/4

}, scale = TRUE)

hist(x, freq = FALSE, breaks = 10, xlim = c(-4, 4),

main = "Light Tailed")

curve(dnorm, add = TRUE)

qqnorm(x, xlim = qqlim, ylim = qqlim)

qqline(x)

x <- rgamma(n, 5, 1)

x <- scale(x, scale = TRUE)

hist(x, freq = FALSE, breaks = 10, xlim = c(-4, 4),

main = "Skewed")

curve(dnorm, add = TRUE)

qqnorm(x, xlim = qqlim, ylim = qqlim)

qqline(x)

Back to body fat data

There are some signs in the right tail that the residuals are a little off normal.

Would you say that they are heavy or light tailed?

Looking at the bike model, we see the QQ plot shows serious problems in the residuals as well. We see that taking a transformation of the response not only helped with the heteroskedasticity, but also makes the residuals look closer to normal. This is not uncommon, that what helps create more constant variance can help the distributional assumptions as well.

par(mfrow = c(2, 2))

plot(md1, which = 2, main = "Original (counts)")

plot(mdLog, which = 2, main = "Log")

plot(mdSqrt, which = 2, main = "Sqrt")

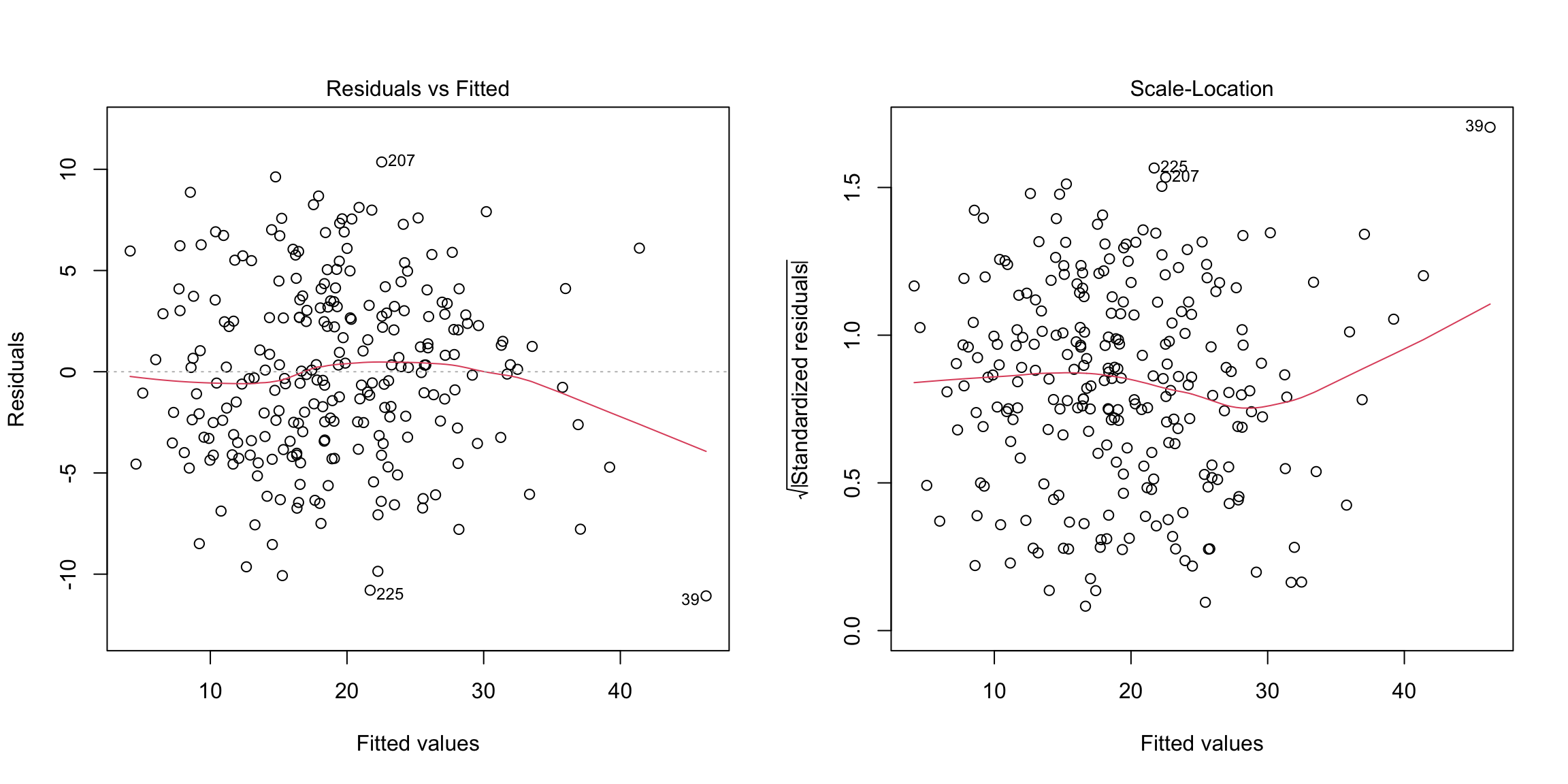

6.6.7 Detecting outliers

The final plots are used for detecting outliers and other exceptional observations. Large leverage or large residuals can indicate potential outliers, as does cooks distance, which is a combination of the two. The default plots give the index of potential outliers to help identify them.

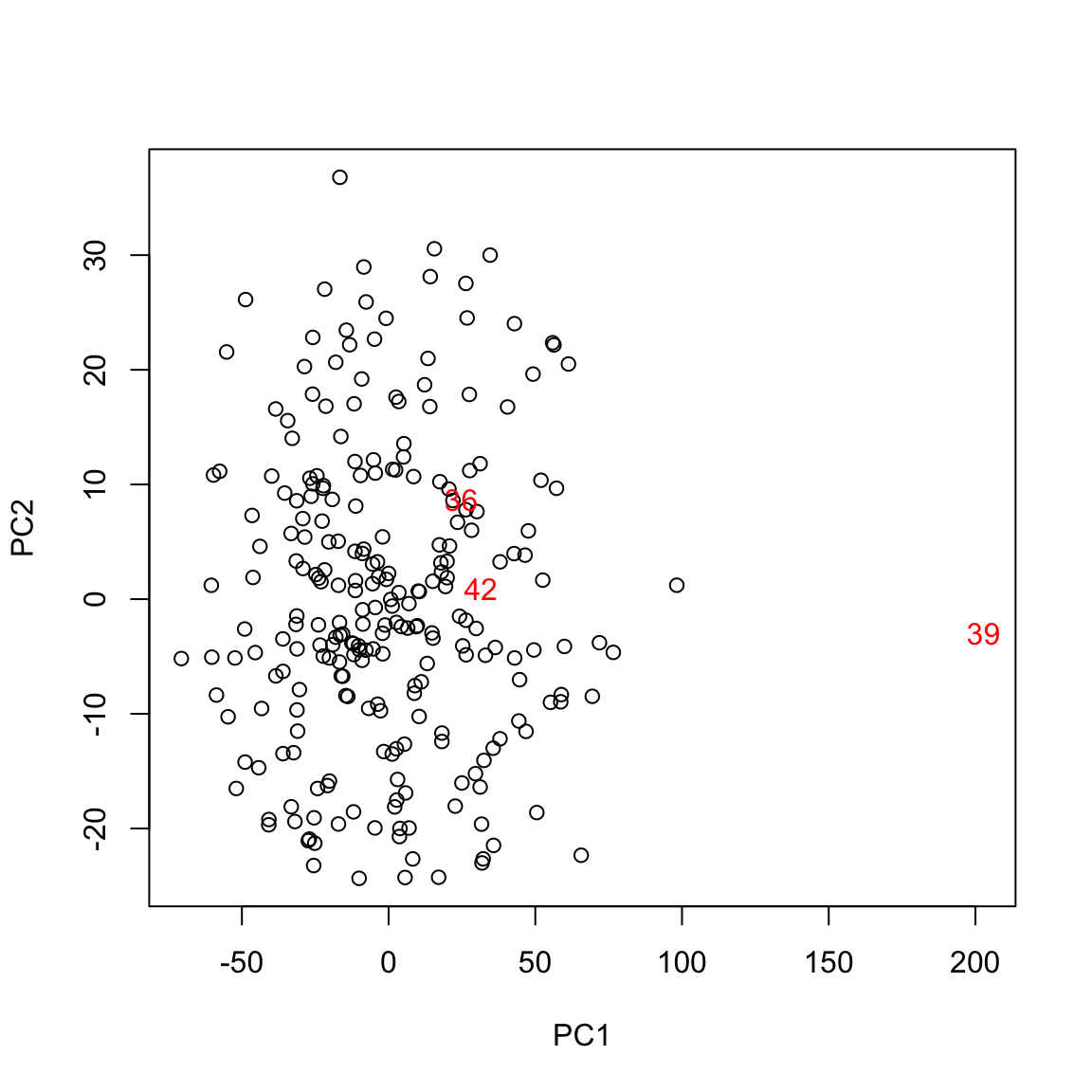

Three points flagged here are observations \(i=39, 42,36\). Let us look at these observations separately, as well as plot some our visualizations highlighting these points:

## High leverage points:## BODYFAT AGE WEIGHT HEIGHT CHEST ABDOMEN HIP THIGH

## 39 35.2 46 363.15 72.25 136.2 148.1 147.7 87.3

## 42 32.9 44 205.00 29.50 106.0 104.3 115.5 70.6

## 36 40.1 49 191.75 65.00 118.5 113.1 113.8 61.9## Mean of each variables:## BODYFAT AGE WEIGHT HEIGHT CHEST ABDOMEN HIP THIGH

## 19.15079 44.88492 178.92440 70.14881 100.82421 92.55595 99.90476 59.40595

pairs(body, panel = function(x, y) {

points(x[-whOut], y[-whOut])

text(x[whOut], y[whOut], labels = whOut)

})

The observation 39 is certainly an outlier in many variables. Observation 42 seems to have an erroneous height recording. Observation 36 seems to have a high value for the response (percent bodyfat).

When outliers are detected, one can perform the regression analysis after dropping the outlying observations and evaluate their impact. After this, one needs to decide whether to report the analysis with the outliers or without them.

## Coefficients without outliers:## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -22.902 20.297 -1.128 0.260

## AGE 0.021 0.029 0.717 0.474

## WEIGHT -0.074 0.059 -1.271 0.205

## HEIGHT -0.241 0.187 -1.288 0.199

## CHEST -0.121 0.113 -1.065 0.288

## ABDOMEN 0.945 0.088 10.709 0.000

## HIP -0.171 0.152 -1.124 0.262

## THIGH 0.223 0.141 1.584 0.114##

## Coefficients in Original Model:## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -37.476 14.495 -2.585 0.010

## AGE 0.012 0.029 0.410 0.682

## WEIGHT -0.139 0.045 -3.087 0.002

## HEIGHT -0.103 0.098 -1.051 0.294

## CHEST -0.001 0.100 -0.008 0.993

## ABDOMEN 0.968 0.085 11.352 0.000

## HIP -0.183 0.145 -1.267 0.206

## THIGH 0.286 0.136 2.098 0.037We can see that WEIGHT and THIGH are no longer significant after removing these outlying points. We should note that removing observations reduces the power of all tests, so you may often see less significance if you remove many points (three is not really many!). But we can compare to removing three random points, and see that we don’t have major changes in our results:

## Coefficients without three random points:## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -36.732 14.620 -2.513 0.013

## AGE 0.008 0.030 0.287 0.774

## WEIGHT -0.139 0.045 -3.070 0.002

## HEIGHT -0.108 0.098 -1.094 0.275

## CHEST 0.002 0.100 0.016 0.987

## ABDOMEN 0.972 0.086 11.351 0.000

## HIP -0.182 0.145 -1.249 0.213

## THIGH 0.266 0.136 1.953 0.0526.7 Variable Selection

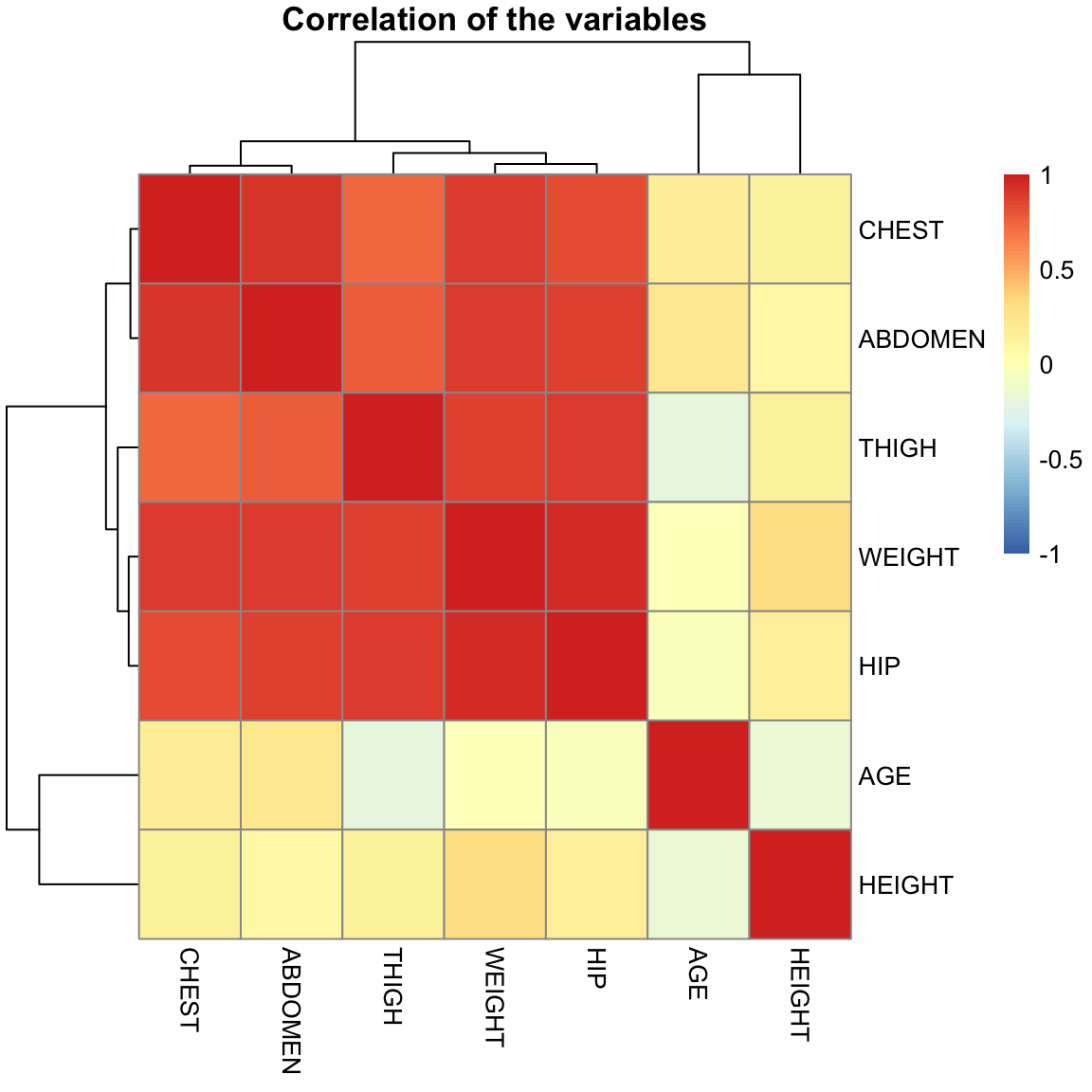

Consider a regression problem with a response variable \(y\) and \(p\) explanatory variables \(x_1, \dots, x_p\). Should we just go ahead and fit a linear model to \(y\) with all the \(p\) explanatory variables or should we throw out some unnecessary explanatory variables and then fit a linear model for \(y\) based on the remaining variables? One often does the latter in practice. The process of selecting important explanatory variables to include in a regression model is called variable selection. The following are reasons for performing variable selection:

- Removing unnecessary variables results in a simpler model. Simpler models are always preferred to complicated models.

- Unnecessary explanatory variables will add noise to the estimation of quantities that we are interested in.

- Collinearity (i.e. strong linear relationships in the variables) is a problem with having too many variables trying to do the same job.

- We can save time and/or money by not measuring redundant explanatory variables.

Several common, interrelated strategies for asking this question

- Hypothesis testing on variables or submodels

- Stepwise regression based on \(p\)-values

- Criteria based Variable Selection

We shall illustrate variable selection procedures using the following

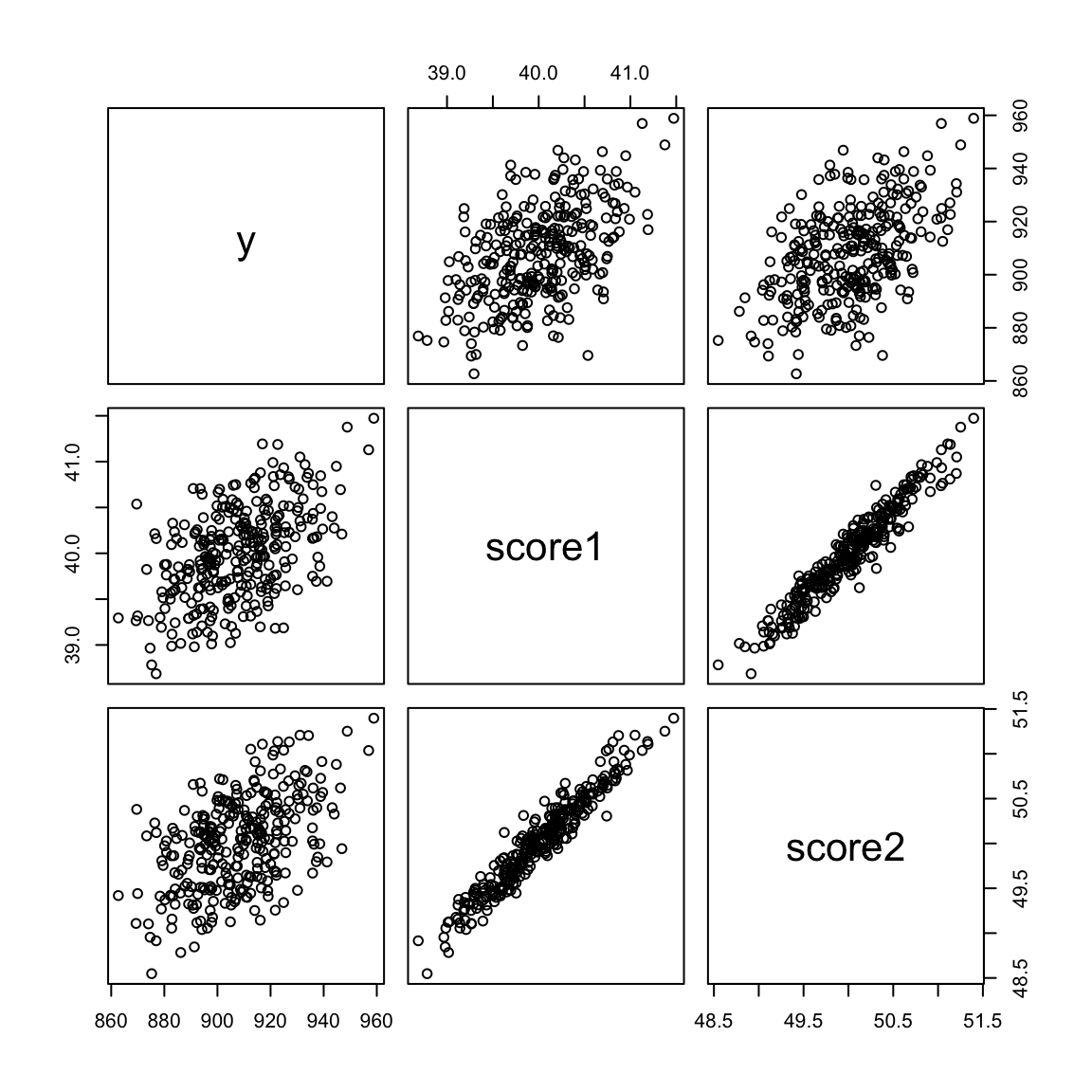

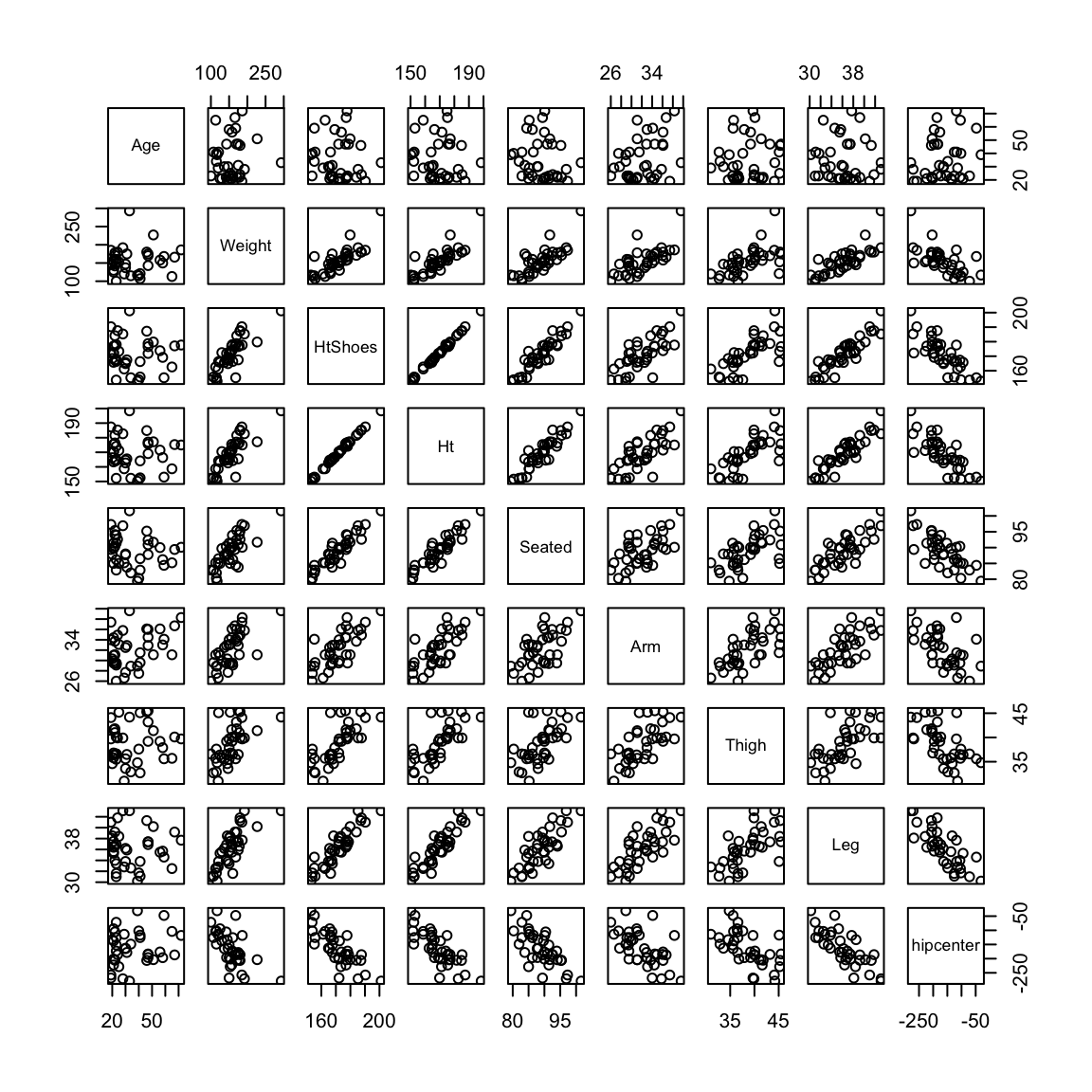

dataset (which is available in R from the faraway package). This small dataset gives information about drivers and the seat position that they choose, with the idea of trying to predict a seat position from information regarding the driver (age, weight, height,…).

We can see that the variables are highly correlated with each other, and no variables are significant. However, the overall \(p\)-value reported for the \(F\)-statistic in the summary is almost zero (this is an example of how you might actually find the \(F\) statistic useful, in that it provides a check that even though no single variable is significant, the variables jointly do fit the data well )

##

## Call:

## lm(formula = hipcenter ~ ., data = seatpos)

##

## Residuals:

## Min 1Q Median 3Q Max

## -73.827 -22.833 -3.678 25.017 62.337

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 436.43213 166.57162 2.620 0.0138 *

## Age 0.77572 0.57033 1.360 0.1843

## Weight 0.02631 0.33097 0.080 0.9372

## HtShoes -2.69241 9.75304 -0.276 0.7845

## Ht 0.60134 10.12987 0.059 0.9531

## Seated 0.53375 3.76189 0.142 0.8882

## Arm -1.32807 3.90020 -0.341 0.7359

## Thigh -1.14312 2.66002 -0.430 0.6706

## Leg -6.43905 4.71386 -1.366 0.1824

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 37.72 on 29 degrees of freedom

## Multiple R-squared: 0.6866, Adjusted R-squared: 0.6001

## F-statistic: 7.94 on 8 and 29 DF, p-value: 1.306e-056.7.1 Submodels and Hypothesis testing

We already saw that we can evaluate if we need any of the variables by setting up two models

Model 0: No variables, just predict \(\bar{y}\) for all observations

Model 1: Our linear model with all the variables